Sorour Mohajerani

Illumination-Invariant Image from 4-Channel Images: The Effect of Near-Infrared Data in Shadow Removal

May 04, 2020

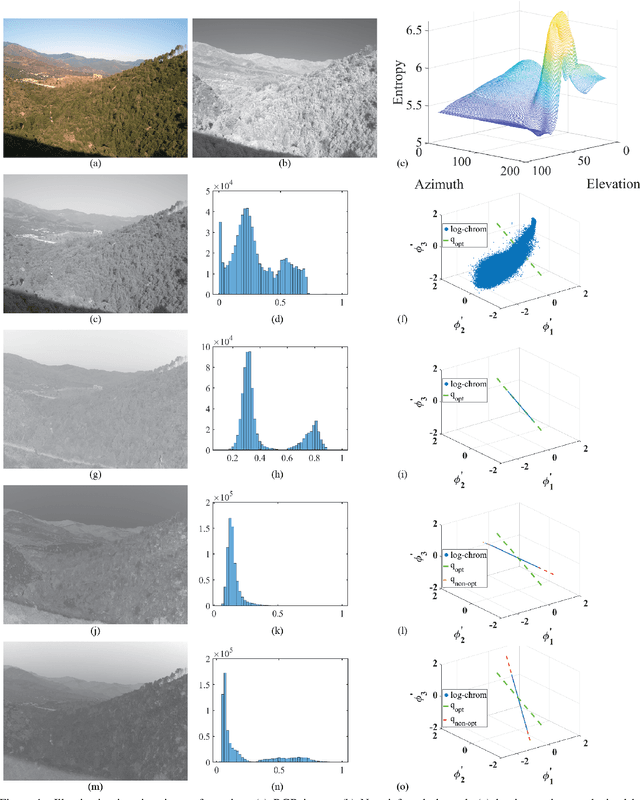

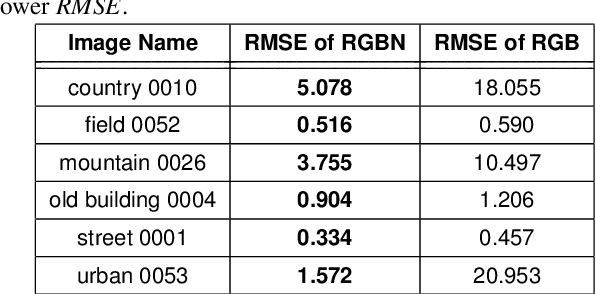

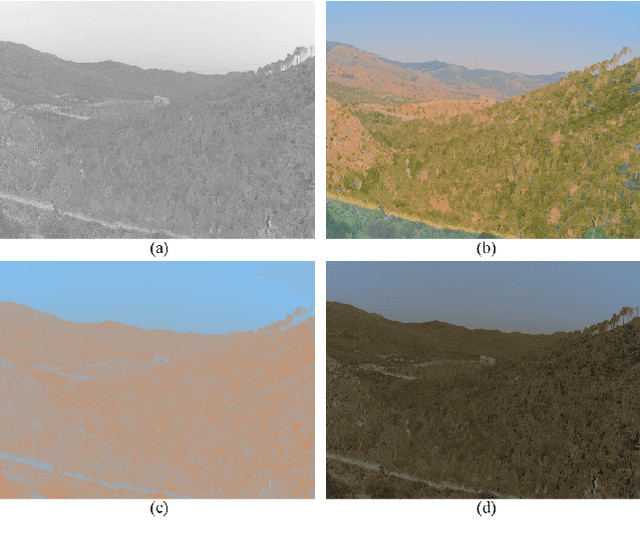

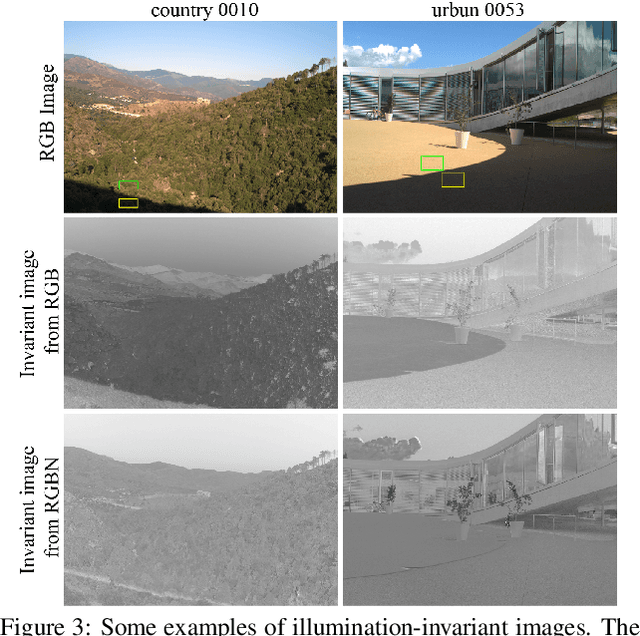

Abstract:Removing the effect of illumination variation in images has been proved to be beneficial in many computer vision applications such as object recognition and semantic segmentation. Although generating illumination-invariant images has been studied in the literature before, it has not been investigated on real 4-channel (4D) data. In this study, we examine the quality of illumination-invariant images generated from red, green, blue, and near-infrared (RGBN) data. Our experiments show that the near-infrared channel substantively contributes toward removing illumination. As shown in our numerical and visual results, the illumination-invariant image obtained by RGBN data is superior compared to that obtained by RGB alone.

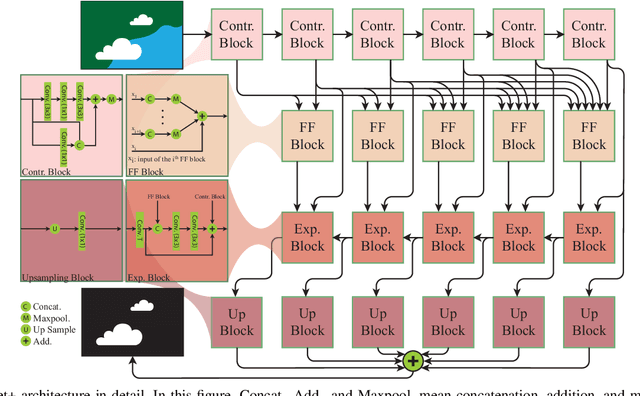

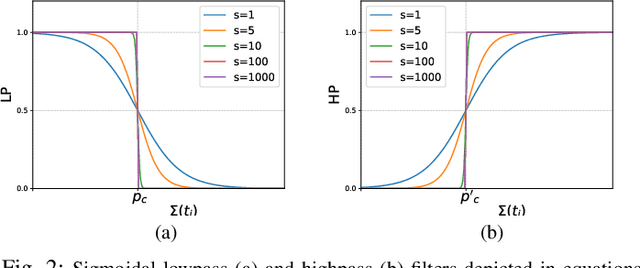

Cloud-Net+: A Cloud Segmentation CNN for Landsat 8 Remote Sensing Imagery Optimized with Filtered Jaccard Loss Function

Jan 23, 2020

Abstract:Cloud Segmentation is one of the fundamental steps in optical remote sensing image analysis. Current methods for identification of cloud regions in aerial or satellite images are not accurate enough especially in the presence of snow and haze. This paper presents a deep learning-based framework to address the problem of cloud detection in Landsat 8 imagery. The proposed method benefits from a convolutional neural network (Cloud-Net+) with multiple blocks, which is trained with a novel loss function (Filtered Jaccard loss). The proposed loss function is more sensitive to the absence of cloud pixels in an image and penalizes/rewards the predicted mask more accurately. The combination of Cloud-Net+ and Filtered Jaccard loss function delivers superior results over four public cloud detection datasets. Our experiments on one of the most common public datasets in computer vision (Pascal VOC dataset) show that the proposed network/loss function could be used in other segmentation tasks for more accurate performance/evaluation.

Cloud-Net: An end-to-end Cloud Detection Algorithm for Landsat 8 Imagery

Jan 29, 2019

Abstract:Cloud detection in satellite images is an important first-step in many remote sensing applications. This problem is more challenging when only a limited number of spectral bands are available. To address this problem, a deep learning-based algorithm is proposed in this paper. This algorithm consists of a Fully Convolutional Network (FCN) that is trained by multiple patches of Landsat 8 images. This network, which is called Cloud-Net, is capable of capturing global and local cloud features in an image using its convolutional blocks. Since the proposed method is an end-to-end solution, no complicated pre-processing step is required. Our experimental results prove that the proposed method outperforms the state-of-the-art method over a benchmark dataset by 8.7\% in Jaccard Index.

Cloud Detection Algorithm for Remote Sensing Images Using Fully Convolutional Neural Networks

Oct 13, 2018

Abstract:This paper presents a deep-learning based framework for addressing the problem of accurate cloud detection in remote sensing images. This framework benefits from a Fully Convolutional Neural Network (FCN), which is capable of pixel-level labeling of cloud regions in a Landsat 8 image. Also, a gradient-based identification approach is proposed to identify and exclude regions of snow/ice in the ground truths of the training set. We show that using the hybrid of the two methods (threshold-based and deep-learning) improves the performance of the cloud identification process without the need to manually correct automatically generated ground truths. In average the Jaccard index and recall measure are improved by 4.36% and 3.62%, respectively.

CPNet: A Context Preserver Convolutional Neural Network for Detecting Shadows in Single RGB Images

Oct 13, 2018

Abstract:Automatic detection of shadow regions in an image is a difficult task due to the lack of prior information about the illumination source and the dynamic of the scene objects. To address this problem, in this paper, a deep-learning based segmentation method is proposed that identifies shadow regions at the pixel-level in a single RGB image. We exploit a novel Convolutional Neural Network (CNN) architecture to identify and extract shadow features in an end-to-end manner. This network preserves learned contexts during the training and observes the entire image to detect global and local shadow patterns simultaneously. The proposed method is evaluated on two publicly available datasets of SBU and UCF. We have improved the state-of-the-art Balanced Error Rate (BER) on these datasets by 22\% and 14\%, respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge