Sonya Mahajan

Interpretable (not just posthoc-explainable) heterogeneous survivor bias-corrected treatment effects for assignment of postdischarge interventions to prevent readmissions

Apr 19, 2023

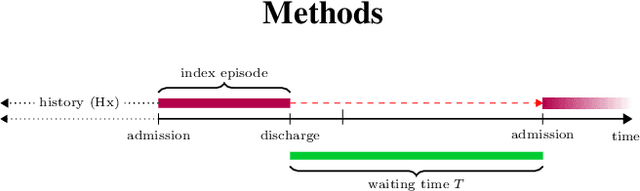

Abstract:We used survival analysis to quantify the impact of postdischarge evaluation and management (E/M) services in preventing hospital readmission or death. Our approach avoids a specific pitfall of applying machine learning to this problem, which is an inflated estimate of the effect of interventions, due to survivors bias -- where the magnitude of inflation may be conditional on heterogeneous confounders in the population. This bias arises simply because in order to receive an intervention after discharge, a person must not have been readmitted in the intervening period. After deriving an expression for this phantom effect, we controlled for this and other biases within an inherently interpretable Bayesian survival framework. We identified case management services as being the most impactful for reducing readmissions overall, particularly for patients discharged to long term care facilities, with high resource utilization in the quarter preceding admission.

Interpretable (not just posthoc-explainable) medical claims modeling for discharge placement to prevent avoidable all-cause readmissions or death

Aug 28, 2022

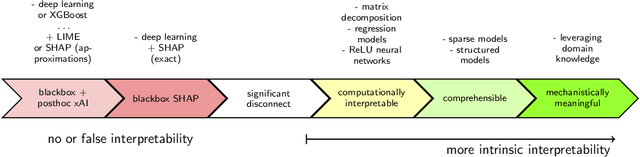

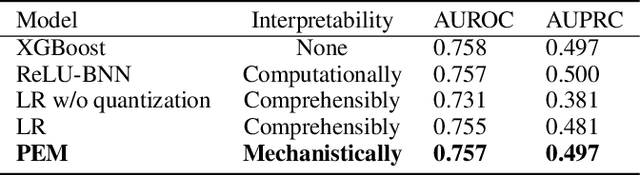

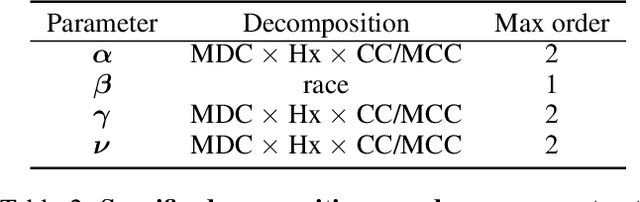

Abstract:This manuscript addresses the simultaneous problems of predicting all-cause inpatient readmission or death after discharge, and quantifying the impact of discharge placement in preventing these adverse events. To this end, we developed an inherently interpretable multilevel Bayesian modeling framework inspired by the piecewise linearity of ReLU-activated deep neural networks. In a survival model, we explicitly adjust for confounding in quantifying local average treatment effects for discharge placement interventions. We trained the model on a 5% sample of Medicare beneficiaries from 2008 and 2011, and then tested the model on 2012 claims. Evaluated on classification accuracy for 30-day all-cause unplanned readmissions (defined using official CMS methodology) or death, the model performed similarly against XGBoost, logistic regression (after feature engineering), and a Bayesian deep neural network trained on the same data. Tested on the 30-day classification task of predicting readmissions or death using left-out future data, the model achieved an AUROC of approximately 0.76 and and AUPRC of approximately 0.50 (relative to an overall positively rate in the testing data of 18%), demonstrating how one need not sacrifice interpretability for accuracy. Additionally, the model had a testing AUROC of 0.78 on the classification of 90-day all-cause unplanned readmission or death. We easily peer into our inherently interpretable model, summarizing its main findings. Additionally, we demonstrate how the black-box posthoc explainer tool SHAP generates explanations that are not supported by the fitted model -- and if taken at face value does not offer enough context to make a model actionable.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge