Sofien Dhouib

Meta Learning in Bandits within Shared Affine Subspaces

Mar 31, 2024Abstract:We study the problem of meta-learning several contextual stochastic bandits tasks by leveraging their concentration around a low-dimensional affine subspace, which we learn via online principal component analysis to reduce the expected regret over the encountered bandits. We propose and theoretically analyze two strategies that solve the problem: One based on the principle of optimism in the face of uncertainty and the other via Thompson sampling. Our framework is generic and includes previously proposed approaches as special cases. Besides, the empirical results show that our methods significantly reduce the regret on several bandit tasks.

Hypothesis Transfer in Bandits by Weighted Models

Nov 14, 2022

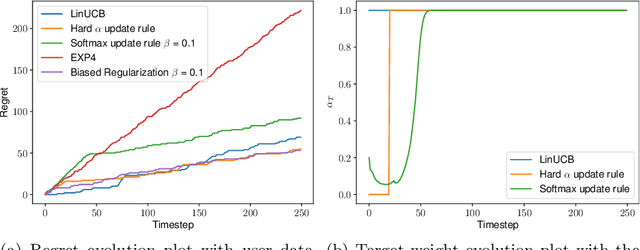

Abstract:We consider the problem of contextual multi-armed bandits in the setting of hypothesis transfer learning. That is, we assume having access to a previously learned model on an unobserved set of contexts, and we leverage it in order to accelerate exploration on a new bandit problem. Our transfer strategy is based on a re-weighting scheme for which we show a reduction in the regret over the classic Linear UCB when transfer is desired, while recovering the classic regret rate when the two tasks are unrelated. We further extend this method to an arbitrary amount of source models, where the algorithm decides which model is preferred at each time step. Additionally we discuss an approach where a dynamic convex combination of source models is given in terms of a biased regularization term in the classic LinUCB algorithm. The algorithms and the theoretical analysis of our proposed methods substantiated by empirical evaluations on simulated and real-world data.

Connecting sufficient conditions for domain adaptation: source-guided uncertainty, relaxed divergences and discrepancy localization

Mar 09, 2022

Abstract:Recent advances in domain adaptation establish that requiring a low risk on the source domain and equal feature marginals degrade the adaptation's performance. At the same time, empirical evidence shows that incorporating an unsupervised target domain term that pushes decision boundaries away from the high-density regions, along with relaxed alignment, improves adaptation. In this paper, we theoretically justify such observations via a new bound on the target risk, and we connect two notions of relaxation for divergence, namely $\beta-$relaxed divergences and localization. This connection allows us to incorporate the source domain's categorical structure into the relaxation of the considered divergence, provably resulting in a better handling of the label shift case in particular.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge