Sofia Gourtsoyianni

CEC-CNN: A Consecutive Expansion-Contraction Convolutional Network for Very Small Resolution Medical Image Classification

Sep 27, 2022

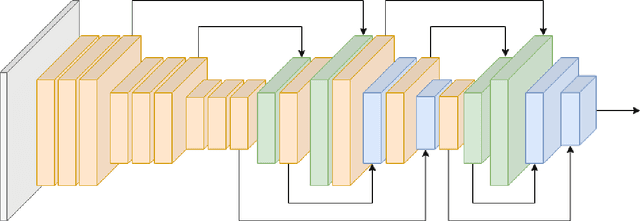

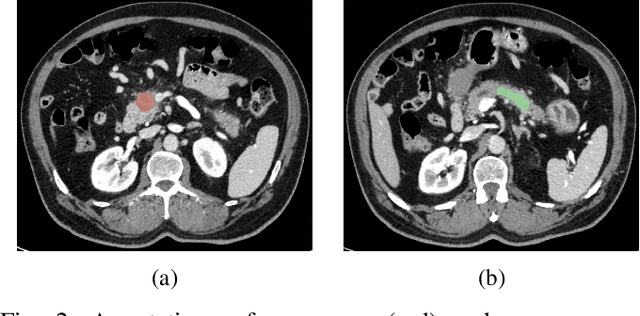

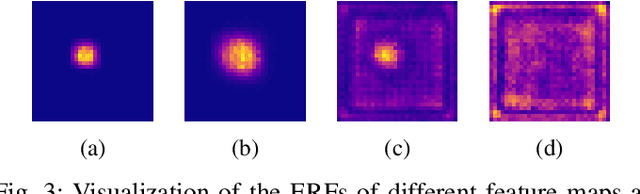

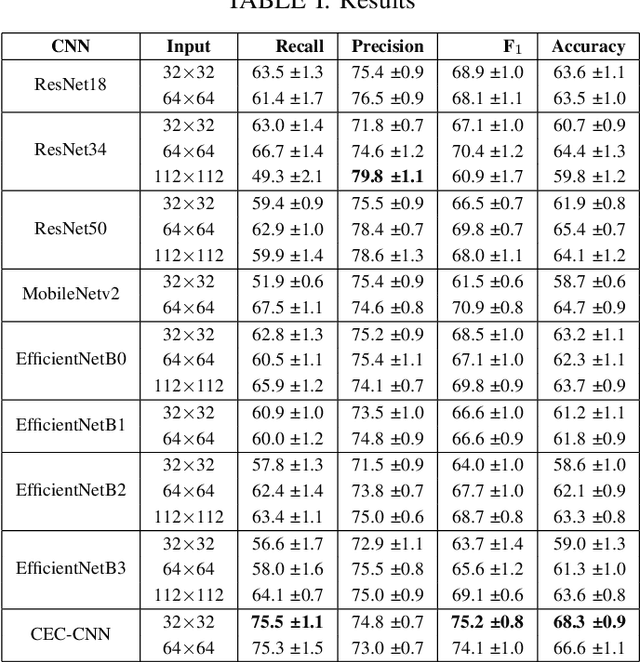

Abstract:Deep Convolutional Neural Networks (CNNs) for image classification successively alternate convolutions and downsampling operations, such as pooling layers or strided convolutions, resulting in lower resolution features the deeper the network gets. These downsampling operations save computational resources and provide some translational invariance as well as a bigger receptive field at the next layers. However, an inherent side-effect of this is that high-level features, produced at the deep end of the network, are always captured in low resolution feature maps. The inverse is also true, as shallow layers always contain small scale features. In biomedical image analysis engineers are often tasked with classifying very small image patches which carry only a limited amount of information. By their nature, these patches may not even contain objects, with the classification depending instead on the detection of subtle underlying patterns with an unknown scale in the image's texture. In these cases every bit of information is valuable; thus, it is important to extract the maximum number of informative features possible. Driven by these considerations, we introduce a new CNN architecture which preserves multi-scale features from deep, intermediate, and shallow layers by utilizing skip connections along with consecutive contractions and expansions of the feature maps. Using a dataset of very low resolution patches from Pancreatic Ductal Adenocarcinoma (PDAC) CT scans we demonstrate that our network can outperform current state of the art models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge