Siwei Xiong

Towards Robust Stacked Capsule Autoencoder with Hybrid Adversarial Training

Mar 01, 2022

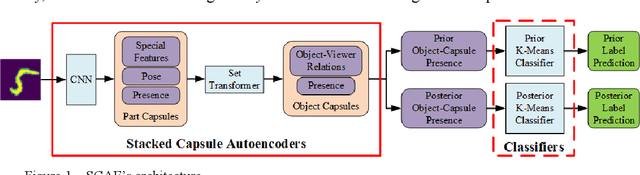

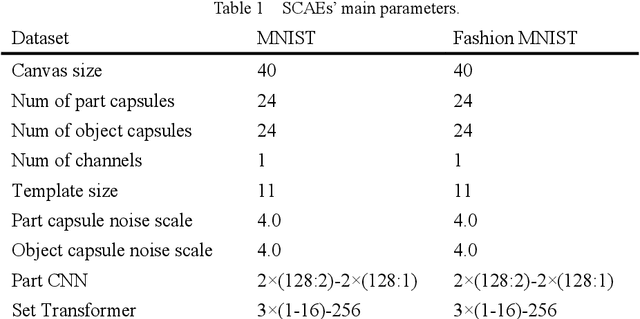

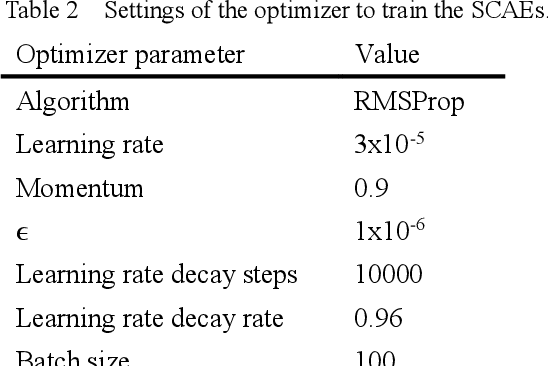

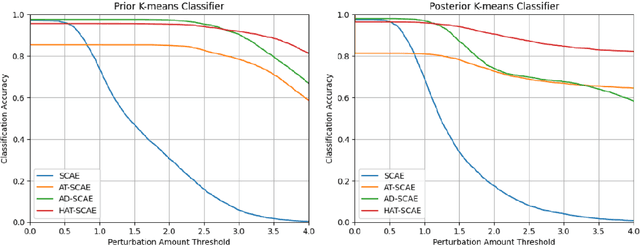

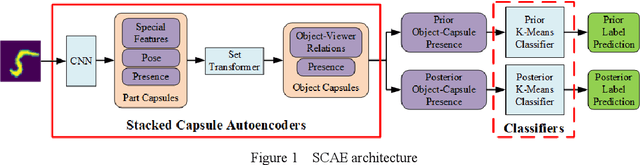

Abstract:Capsule networks (CapsNets) are new neural networks that classify images based on the spatial relationships of features. By analyzing the pose of features and their relative positions, it is more capable to recognize images after affine transformation. The stacked capsule autoencoder (SCAE) is a state-of-the-art CapsNet, and achieved unsupervised classification of CapsNets for the first time. However, the security vulnerabilities and the robustness of the SCAE has rarely been explored. In this paper, we propose an evasion attack against SCAE, where the attacker can generate adversarial perturbations based on reducing the contribution of the object capsules in SCAE related to the original category of the image. The adversarial perturbations are then applied to the original images, and the perturbed images will be misclassified. Furthermore, we propose a defense method called Hybrid Adversarial Training (HAT) against such evasion attacks. HAT makes use of adversarial training and adversarial distillation to achieve better robustness and stability. We evaluate the defense method and the experimental results show that the refined SCAE model can achieve 82.14% classification accuracy under evasion attack. The source code is available at https://github.com/FrostbiteXSW/SCAE_Defense.

An Adversarial Attack against Stacked Capsule Autoencoder

Oct 19, 2020

Abstract:Capsule network is a kind of neural network which uses spatial relationship between features to classify images. By capturing poses and relative positions between features, its ability to recognize affine transformation is improved and surpasses traditional convolutional neural networks (CNNs) when dealing with translation, rotation and scaling. Stacked Capsule Autoencoder (SCAE) is the state-of-the-art generation of capsule network. SCAE encodes the image as capsules, each of which contains poses of features and their correlations. The encoded contents are then input into downstream classifier to predict the categories of the images. Existed research mainly focuses on security of capsule networks with dynamic routing or EM routing, little attention has been paid to the security and robustness of SCAE. In this paper, we propose an evasion attack against SCAE. After perturbation is generated with an optimization algorithm, it is added to an image to reduce the output of capsules related to the original category of the image. As the contribution of these capsules to the original class is reduced, the perturbed image will be misclassified. We evaluate the attack with image classification experiment on the MNIST dataset. The experimental results indicate that our attack can achieve around 99% success rate.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge