Siruo Zhou

Nine-year-old children outperformed ChatGPT in emotion: Evidence from Chinese writing

Oct 01, 2023Abstract:ChatGPT has been demonstrated to possess significant capabilities in generating intricate, human-like text, and recent studies have established that its performance in theory of mind tasks is comparable to that of a nine-year-old child. However, it remains uncertain whether ChatGPT surpasses nine-year-old children in Chinese writing proficiency. To explore this, our study juxtaposed the Chinese writing performance of ChatGPT and nine-year-old children on both narrative and scientific topics, aiming to uncover the relative strengths and weaknesses of ChatGPT in writing. The collected data were analyzed across five linguistic dimensions: fluency, accuracy, complexity, cohesion, and emotion. Each dimension underwent assessment through precise indices. The findings revealed that nine-year-old children excelled beyond ChatGPT in terms of fluency and cohesion within their writing. In contrast, ChatGPT manifested a superior performance in accuracy compared to the children. Concerning complexity, children exhibited superior skills in science-themed writing, while ChatGPT prevailed in nature-themed writing. Significantly, this research is pioneering in revealing that nine-year-old children convey stronger emotions than ChatGPT in their Chinese compositions.

Chinese Intermediate English Learners outdid ChatGPT in deep cohesion: Evidence from English narrative writing

Mar 21, 2023

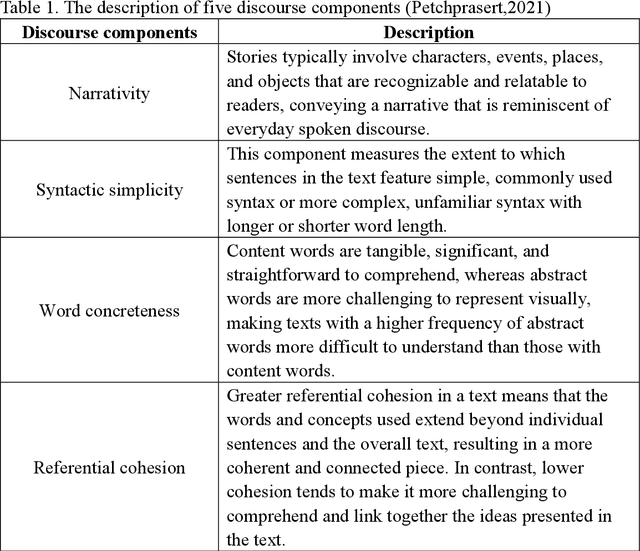

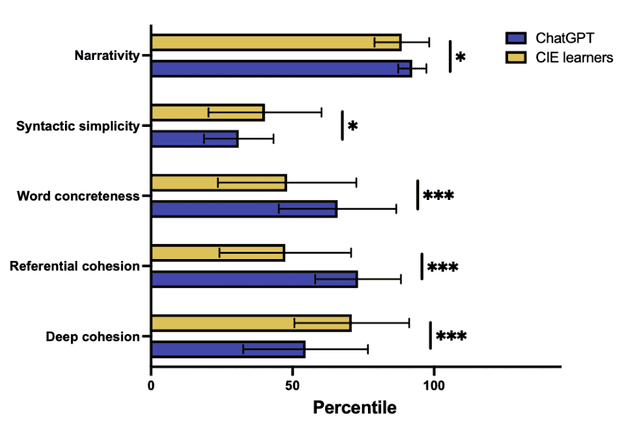

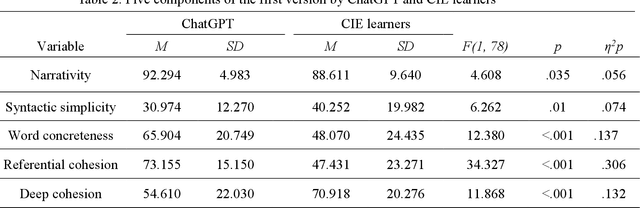

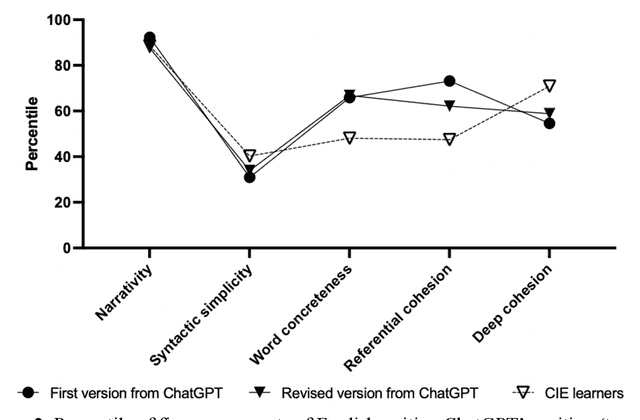

Abstract:ChatGPT is a publicly available chatbot that can quickly generate texts on given topics, but it is unknown whether the chatbot is really superior to human writers in all aspects of writing and whether its writing quality can be prominently improved on the basis of updating commands. Consequently, this study compared the writing performance on a narrative topic by ChatGPT and Chinese intermediate English (CIE) learners so as to reveal the chatbot's advantage and disadvantage in writing. The data were analyzed in terms of five discourse components using Coh-Metrix (a special instrument for analyzing language discourses), and the results revealed that ChatGPT performed better than human writers in narrativity, word concreteness, and referential cohesion, but worse in syntactic simplicity and deep cohesion in its initial version. After more revision commands were updated, while the resulting version was facilitated in syntactic simplicity, yet it is still lagged far behind CIE learners' writing in deep cohesion. In addition, the correlation analysis of the discourse components suggests that narrativity was correlated with referential cohesion in both ChatGPT and human writers, but the correlations varied within each group.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge