Sindre Benjamin Remman

Realistic Counterfactual Explanations for Machine Learning-Controlled Mobile Robots using 2D LiDAR

May 11, 2025

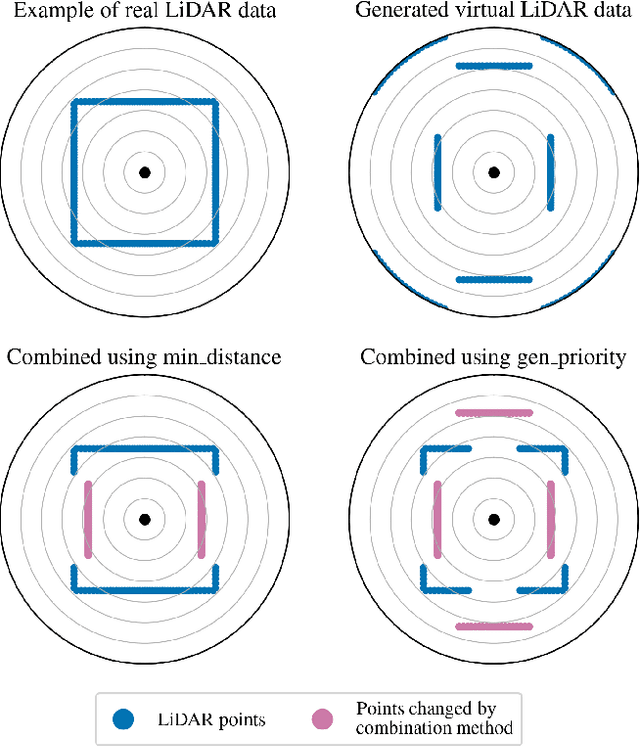

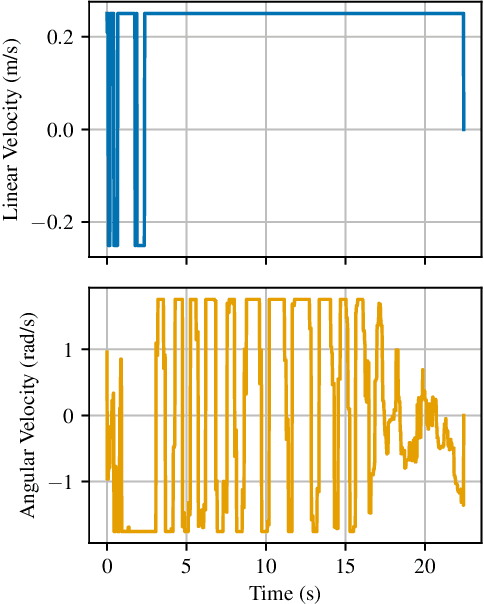

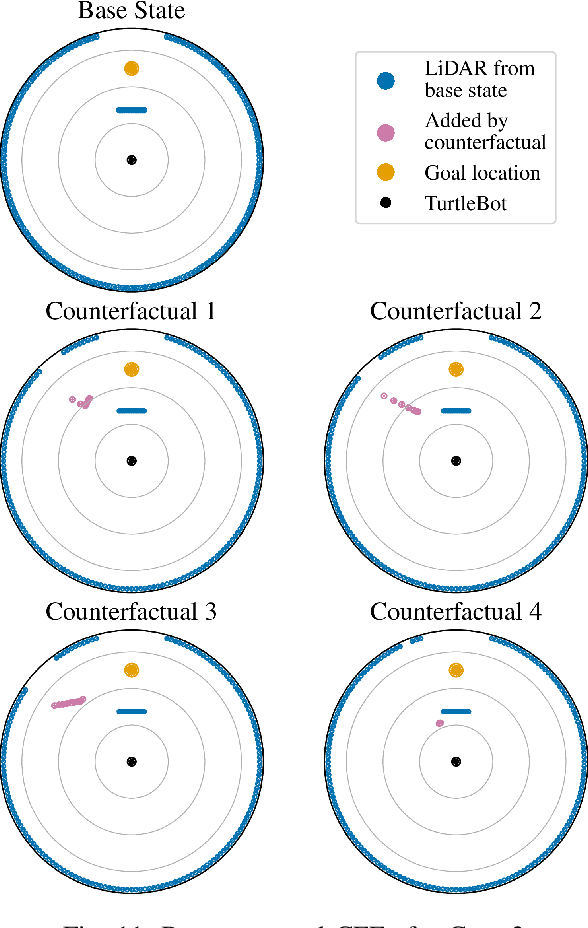

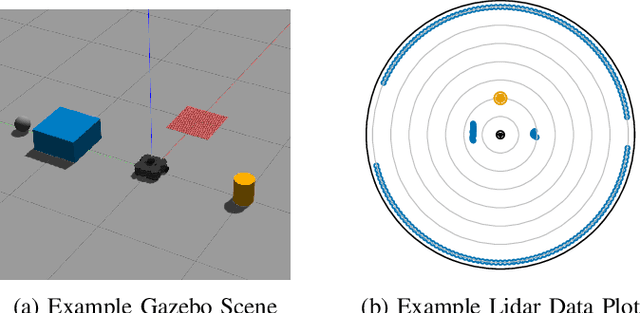

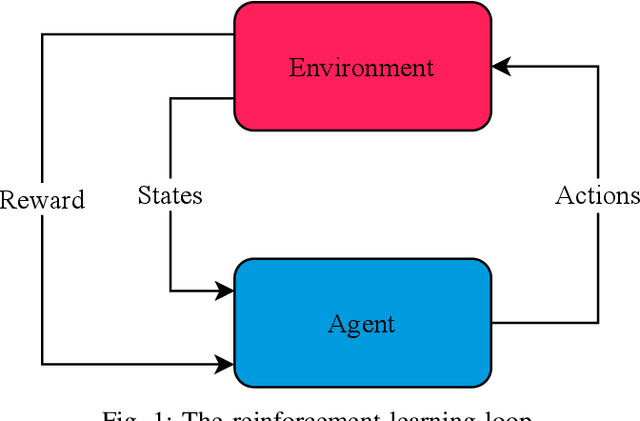

Abstract:This paper presents a novel method for generating realistic counterfactual explanations (CFEs) in machine learning (ML)-based control for mobile robots using 2D LiDAR. ML models, especially artificial neural networks (ANNs), can provide advanced decision-making and control capabilities by learning from data. However, they often function as black boxes, making it challenging to interpret them. This is especially a problem in safety-critical control applications. To generate realistic CFEs, we parameterize the LiDAR space with simple shapes such as circles and rectangles, whose parameters are chosen by a genetic algorithm, and the configurations are transformed into LiDAR data by raycasting. Our model-agnostic approach generates CFEs in the form of synthetic LiDAR data that resembles a base LiDAR state but is modified to produce a pre-defined ML model control output based on a query from the user. We demonstrate our method on a mobile robot, the TurtleBot3, controlled using deep reinforcement learning (DRL) in real-world and simulated scenarios. Our method generates logical and realistic CFEs, which helps to interpret the DRL agent's decision making. This paper contributes towards advancing explainable AI in mobile robotics, and our method could be a tool for understanding, debugging, and improving ML-based autonomous control.

Deep Reinforcement Learning Behavioral Mode Switching Using Optimal Control Based on a Latent Space Objective

Jun 03, 2024Abstract:In this work, we use optimal control to change the behavior of a deep reinforcement learning policy by optimizing directly in the policy's latent space. We hypothesize that distinct behavioral patterns, termed behavioral modes, can be identified within certain regions of a deep reinforcement learning policy's latent space, meaning that specific actions or strategies are preferred within these regions. We identify these behavioral modes using latent space dimension-reduction with \ac*{pacmap}. Using the actions generated by the optimal control procedure, we move the system from one behavioral mode to another. We subsequently utilize these actions as a filter for interpreting the neural network policy. The results show that this approach can impose desired behavioral modes in the policy, demonstrated by showing how a failed episode can be made successful and vice versa using the lunar lander reinforcement learning environment.

Discovering Behavioral Modes in Deep Reinforcement Learning Policies Using Trajectory Clustering in Latent Space

Feb 20, 2024

Abstract:Understanding the behavior of deep reinforcement learning (DRL) agents is crucial for improving their performance and reliability. However, the complexity of their policies often makes them challenging to understand. In this paper, we introduce a new approach for investigating the behavior modes of DRL policies, which involves utilizing dimensionality reduction and trajectory clustering in the latent space of neural networks. Specifically, we use Pairwise Controlled Manifold Approximation Projection (PaCMAP) for dimensionality reduction and TRACLUS for trajectory clustering to analyze the latent space of a DRL policy trained on the Mountain Car control task. Our methodology helps identify diverse behavior patterns and suboptimal choices by the policy, thus allowing for targeted improvements. We demonstrate how our approach, combined with domain knowledge, can enhance a policy's performance in specific regions of the state space.

Causal versus Marginal Shapley Values for Robotic Lever Manipulation Controlled using Deep Reinforcement Learning

Nov 04, 2021

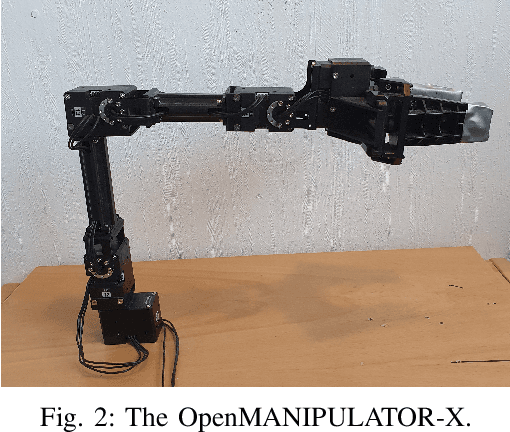

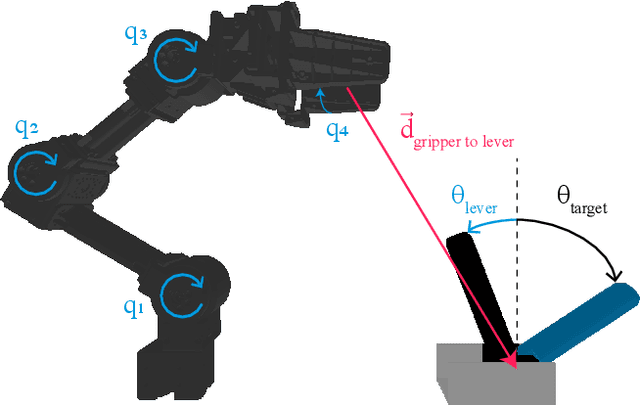

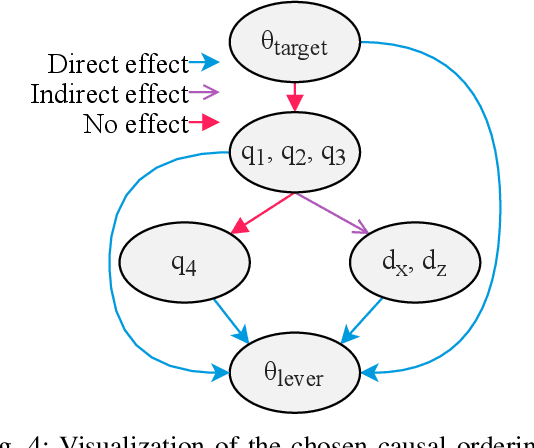

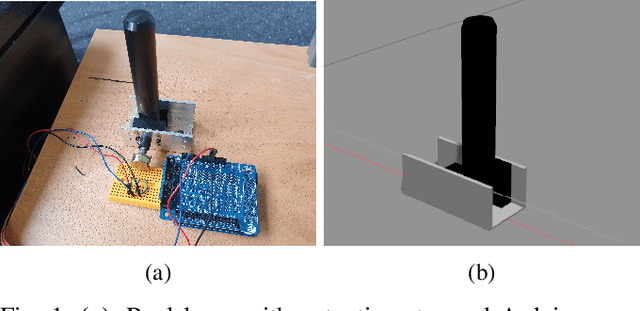

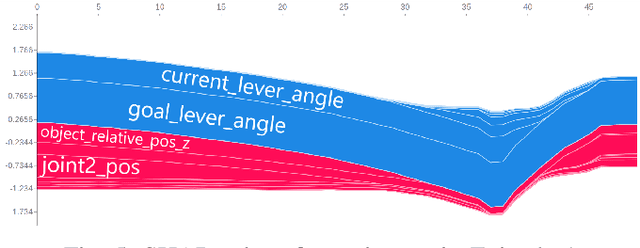

Abstract:We investigate the effect of including domain knowledge about a robotic system's causal relations when generating explanations. To this end, we compare two methods from explainable artificial intelligence, the popular KernelSHAP and the recent causal SHAP, on a deep neural network trained using deep reinforcement learning on the task of controlling a lever using a robotic manipulator. A primary disadvantage of KernelSHAP is that its explanations represent only the features' direct effects on a model's output, not considering the indirect effects a feature can have on the output by affecting other features. Causal SHAP uses a partial causal ordering to alter KernelSHAP's sampling procedure to incorporate these indirect effects. This partial causal ordering defines the causal relations between the features, and we specify this using domain knowledge about the lever control task. We show that enabling an explanation method to account for indirect effects and incorporating some domain knowledge can lead to explanations that better agree with human intuition. This is especially favorable for a real-world robotics task, where there is considerable causality at play, and in addition, the required domain knowledge is often handily available.

Robotic Lever Manipulation using Hindsight Experience Replay and Shapley Additive Explanations

Oct 07, 2021

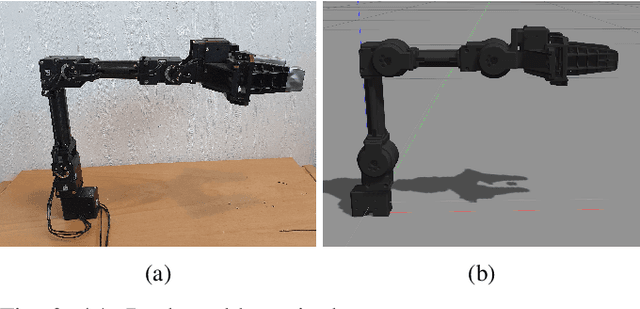

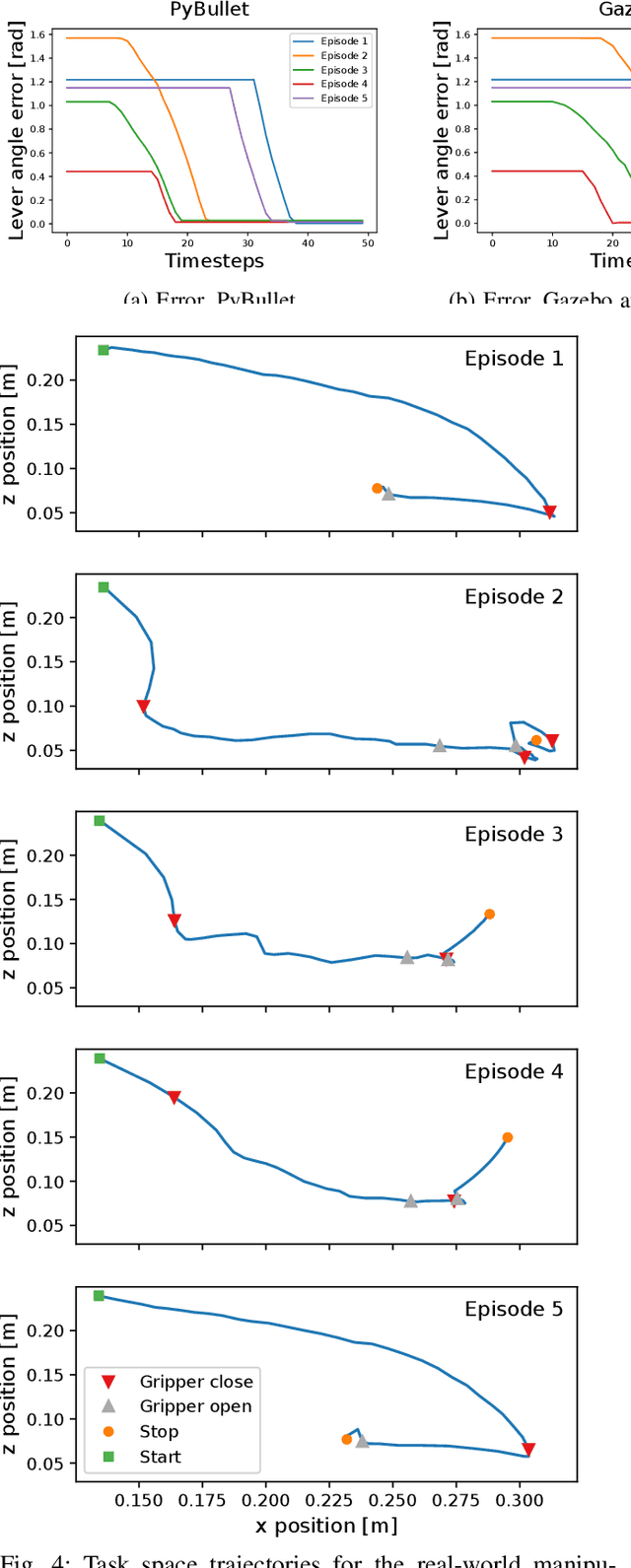

Abstract:This paper deals with robotic lever control using Explainable Deep Reinforcement Learning. First, we train a policy by using the Deep Deterministic Policy Gradient algorithm and the Hindsight Experience Replay technique, where the goal is to control a robotic manipulator to manipulate a lever. This enables us both to use continuous states and actions and to learn with sparse rewards. Being able to learn from sparse rewards is especially desirable for Deep Reinforcement Learning because designing a reward function for complex tasks such as this is challenging. We first train in the PyBullet simulator, which accelerates the training procedure, but is not accurate on this task compared to the real-world environment. After completing the training in PyBullet, we further train in the Gazebo simulator, which runs more slowly than PyBullet, but is more accurate on this task. We then transfer the policy to the real-world environment, where it achieves comparable performance to the simulated environments for most episodes. To explain the decisions of the policy we use the SHAP method to create an explanation model based on the episodes done in the real-world environment. This gives us some results that agree with intuition, and some that do not. We also question whether the independence assumption made when approximating the SHAP values influences the accuracy of these values for a system such as this, where there are some correlations between the states.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge