Sinan Faouri

Examining stability of machine learning methods for predicting dementia at early phases of the disease

Sep 10, 2022

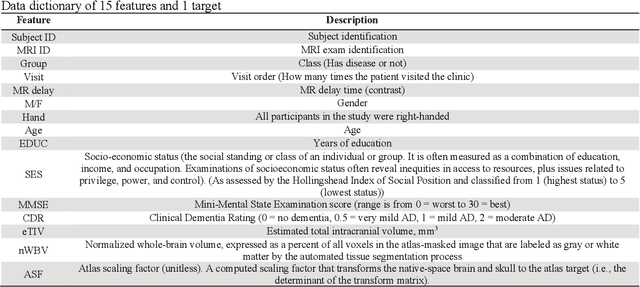

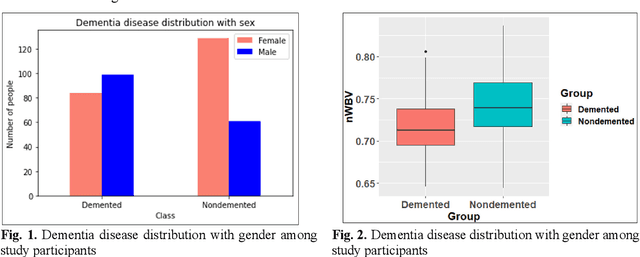

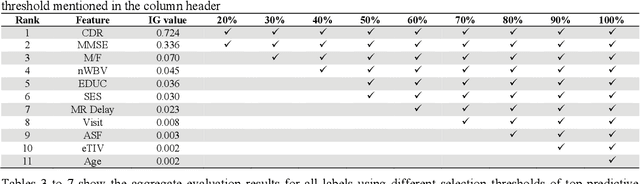

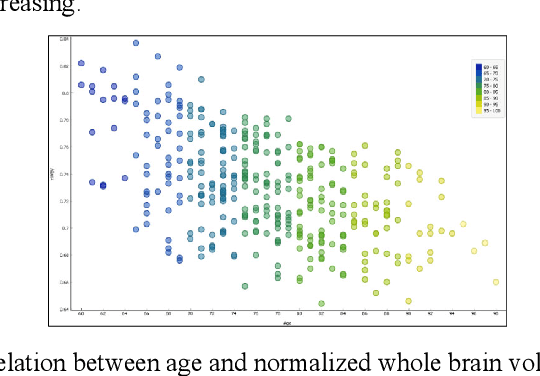

Abstract:Dementia is a neuropsychiatric brain disorder that usually occurs when one or more brain cells stop working partially or at all. Diagnosis of this disorder in the early phases of the disease is a vital task to rescue patients lives from bad consequences and provide them with better healthcare. Machine learning methods have been proven to be accurate in predicting dementia in the early phases of the disease. The prediction of dementia depends heavily on the type of collected data which usually are gathered from Normalized Whole Brain Volume (nWBV) and Atlas Scaling Factor (ASF) which are normally measured and corrected from Magnetic Resonance Imaging (MRIs). Other biological features such as age and gender can also help in the diagnosis of dementia. Although many studies use machine learning for predicting dementia, we could not reach a conclusion on the stability of these methods for which one is more accurate under different experimental conditions. Therefore, this paper investigates the conclusion stability regarding the performance of machine learning algorithms for dementia prediction. To accomplish this, a large number of experiments were run using 7 machine learning algorithms and two feature reduction algorithms namely, Information Gain (IG) and Principal Component Analysis (PCA). To examine the stability of these algorithms, thresholds of feature selection were changed for the IG from 20% to 100% and the PCA dimension from 2 to 8. This has resulted in 7x9 + 7x7= 112 experiments. In each experiment, various classification evaluation data were recorded. The obtained results show that among seven algorithms the support vector machine and Naive Bayes are the most stable algorithms while changing the selection threshold. Also, it was found that using IG would seem more efficient than using PCA for predicting Dementia.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge