Shunsuke Sakai

Few-shot Human Action Anomaly Detection via a Unified Contrastive Learning Framework

Aug 25, 2025

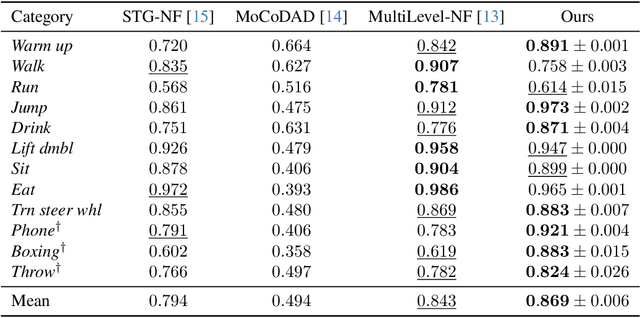

Abstract:Human Action Anomaly Detection (HAAD) aims to identify anomalous actions given only normal action data during training. Existing methods typically follow a one-model-per-category paradigm, requiring separate training for each action category and a large number of normal samples. These constraints hinder scalability and limit applicability in real-world scenarios, where data is often scarce or novel categories frequently appear. To address these limitations, we propose a unified framework for HAAD that is compatible with few-shot scenarios. Our method constructs a category-agnostic representation space via contrastive learning, enabling AD by comparing test samples with a given small set of normal examples (referred to as the support set). To improve inter-category generalization and intra-category robustness, we introduce a generative motion augmentation strategy harnessing a diffusion-based foundation model for creating diverse and realistic training samples. Notably, to the best of our knowledge, our work is the first to introduce such a strategy specifically tailored to enhance contrastive learning for action AD. Extensive experiments on the HumanAct12 dataset demonstrate the state-of-the-art effectiveness of our approach under both seen and unseen category settings, regarding training efficiency and model scalability for few-shot HAAD.

Contrastive Learning-Enhanced Trajectory Matching for Small-Scale Dataset Distillation

May 21, 2025Abstract:Deploying machine learning models in resource-constrained environments, such as edge devices or rapid prototyping scenarios, increasingly demands distillation of large datasets into significantly smaller yet informative synthetic datasets. Current dataset distillation techniques, particularly Trajectory Matching methods, optimize synthetic data so that the model's training trajectory on synthetic samples mirrors that on real data. While demonstrating efficacy on medium-scale synthetic datasets, these methods fail to adequately preserve semantic richness under extreme sample scarcity. To address this limitation, we propose a novel dataset distillation method integrating contrastive learning during image synthesis. By explicitly maximizing instance-level feature discrimination, our approach produces more informative and diverse synthetic samples, even when dataset sizes are significantly constrained. Experimental results demonstrate that incorporating contrastive learning substantially enhances the performance of models trained on very small-scale synthetic datasets. This integration not only guides more effective feature representation but also significantly improves the visual fidelity of the synthesized images. Experimental results demonstrate that our method achieves notable performance improvements over existing distillation techniques, especially in scenarios with extremely limited synthetic data.

Analytical Softmax Temperature Setting from Feature Dimensions for Model- and Domain-Robust Classification

Apr 22, 2025Abstract:In deep learning-based classification tasks, the softmax function's temperature parameter $T$ critically influences the output distribution and overall performance. This study presents a novel theoretical insight that the optimal temperature $T^*$ is uniquely determined by the dimensionality of the feature representations, thereby enabling training-free determination of $T^*$. Despite this theoretical grounding, empirical evidence reveals that $T^*$ fluctuates under practical conditions owing to variations in models, datasets, and other confounding factors. To address these influences, we propose and optimize a set of temperature determination coefficients that specify how $T^*$ should be adjusted based on the theoretical relationship to feature dimensionality. Additionally, we insert a batch normalization layer immediately before the output layer, effectively stabilizing the feature space. Building on these coefficients and a suite of large-scale experiments, we develop an empirical formula to estimate $T^*$ without additional training while also introducing a corrective scheme to refine $T^*$ based on the number of classes and task complexity. Our findings confirm that the derived temperature not only aligns with the proposed theoretical perspective but also generalizes effectively across diverse tasks, consistently enhancing classification performance and offering a practical, training-free solution for determining $T^*$.

Noisy Deep Ensemble: Accelerating Deep Ensemble Learning via Noise Injection

Apr 08, 2025Abstract:Neural network ensembles is a simple yet effective approach for enhancing generalization capabilities. The most common method involves independently training multiple neural networks initialized with different weights and then averaging their predictions during inference. However, this approach increases training time linearly with the number of ensemble members. To address this issue, we propose the novel ``\textbf{Noisy Deep Ensemble}'' method, significantly reducing the training time required for neural network ensembles. In this method, a \textit{parent model} is trained until convergence, and then the weights of the \textit{parent model} are perturbed in various ways to construct multiple \textit{child models}. This perturbation of the \textit{parent model} weights facilitates the exploration of different local minima while significantly reducing the training time for each ensemble member. We evaluated our method using diverse CNN architectures on CIFAR-10 and CIFAR-100 datasets, surpassing conventional efficient ensemble methods and achieving test accuracy comparable to standard ensembles. Code is available at \href{https://github.com/TSTB-dev/NoisyDeepEnsemble}{https://github.com/TSTB-dev/NoisyDeepEnsemble}

Reconstruction-Free Anomaly Detection with Diffusion Models via Direct Latent Likelihood Evaluation

Apr 08, 2025Abstract:Diffusion models, with their robust distribution approximation capabilities, have demonstrated excellent performance in anomaly detection. However, conventional reconstruction-based approaches rely on computing the reconstruction error between the original and denoised images, which requires careful noise-strength tuning and over ten network evaluations per input-leading to significantly slower detection speeds. To address these limitations, we propose a novel diffusion-based anomaly detection method that circumvents the need for resource-intensive reconstruction. Instead of reconstructing the input image, we directly infer its corresponding latent variables and measure their density under the Gaussian prior distribution. Remarkably, the prior density proves effective as an anomaly score even when using a short partial diffusion process of only 2-5 steps. We evaluate our method on the MVTecAD dataset, achieving an AUC of 0.991 at 15 FPS, thereby setting a new state-of-the-art speed-AUC anomaly detection trade-off.

LADMIM: Logical Anomaly Detection with Masked Image Modeling in Discrete Latent Space

Oct 14, 2024Abstract:Detecting anomalies such as incorrect combinations of objects or deviations in their positions is a challenging problem in industrial anomaly detection. Traditional methods mainly focus on local features of normal images, such as scratches and dirt, making detecting anomalies in the relationships between features difficult. Masked image modeling(MIM) is a self-supervised learning technique that predicts the feature representation of masked regions in an image. To reconstruct the masked regions, it is necessary to understand how the image is composed, allowing the learning of relationships between features within the image. We propose a novel approach that leverages the characteristics of MIM to detect logical anomalies effectively. To address blurriness in the reconstructed image, we replace pixel prediction with predicting the probability distribution of discrete latent variables of the masked regions using a tokenizer. We evaluated the proposed method on the MVTecLOCO dataset, achieving an average AUC of 0.867, surpassing traditional reconstruction-based and distillation-based methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge