Shruti Jaiswal

Hyperparameters optimization for Deep Learning based emotion prediction for Human Robot Interaction

Jan 12, 2020

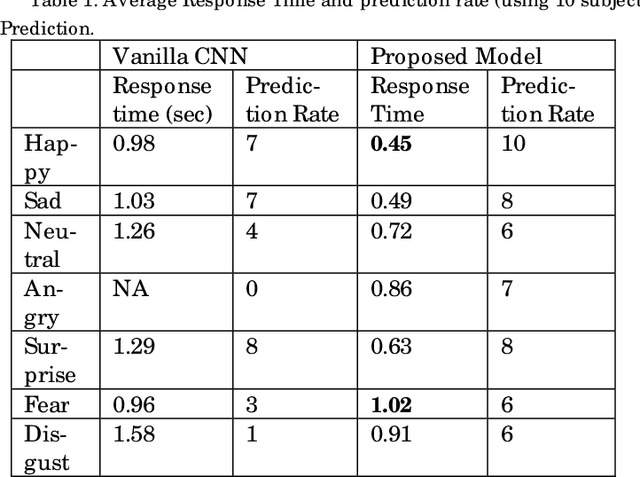

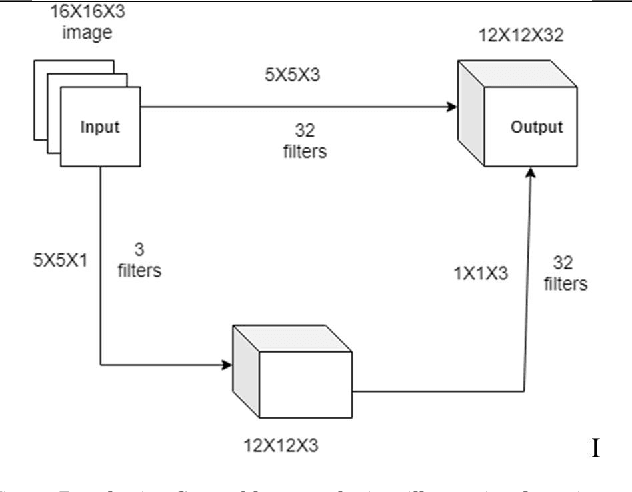

Abstract:To enable humanoid robots to share our social space we need to develop technology for easy interaction with the robots using multiple modes such as speech, gestures and share our emotions with them. We have targeted this research towards addressing the core issue of emotion recognition problem which would require less computation resources and much lesser number of network hyperparameters which will be more adaptive to be computed on low resourced social robots for real time communication. More specifically, here we have proposed an Inception module based Convolutional Neural Network Architecture which has achieved improved accuracy of upto 6% improvement over the existing network architecture for emotion classification when combinedly tested over multiple datasets when tried over humanoid robots in real - time. Our proposed model is reducing the trainable Hyperparameters to an extent of 94% as compared to vanilla CNN model which clearly indicates that it can be used in real time based application such as human robot interaction. Rigorous experiments have been performed to validate our methodology which is sufficiently robust and could achieve high level of accuracy. Finally, the model is implemented in a humanoid robot, NAO in real time and robustness of the model is evaluated.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge