Shizhao Wang

asKAN: Active Subspace embedded Kolmogorov-Arnold Network

Apr 09, 2025

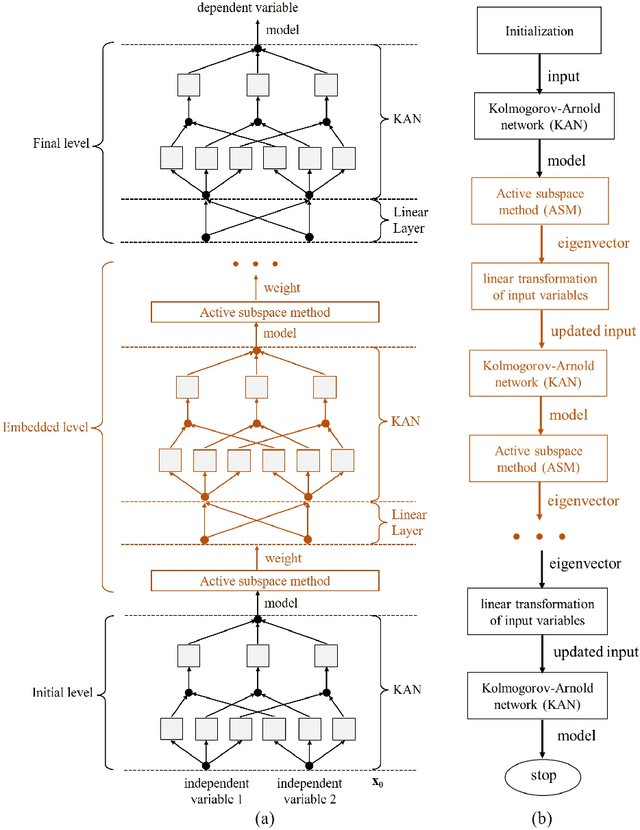

Abstract:The Kolmogorov-Arnold Network (KAN) has emerged as a promising neural network architecture for small-scale AI+Science applications. However, it suffers from inflexibility in modeling ridge functions, which is widely used in representing the relationships in physical systems. This study investigates this inflexibility through the lens of the Kolmogorov-Arnold theorem, which starts the representation of multivariate functions from constructing the univariate components rather than combining the independent variables. Our analysis reveals that incorporating linear combinations of independent variables can substantially simplify the network architecture in representing the ridge functions. Inspired by this finding, we propose active subspace embedded KAN (asKAN), a hierarchical framework that synergizes KAN's function representation with active subspace methodology. The architecture strategically embeds active subspace detection between KANs, where the active subspace method is used to identify the primary ridge directions and the independent variables are adaptively projected onto these critical dimensions. The proposed asKAN is implemented in an iterative way without increasing the number of neurons in the original KAN. The proposed method is validated through function fitting, solving the Poisson equation, and reconstructing sound field. Compared with KAN, asKAN significantly reduces the error using the same network architecture. The results suggest that asKAN enhances the capability of KAN in fitting and solving equations in the form of ridge functions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge