Shivsubramani Krishnamoorthy

Stain Normalized Breast Histopathology Image Recognition using Convolutional Neural Networks for Cancer Detection

Jan 04, 2022

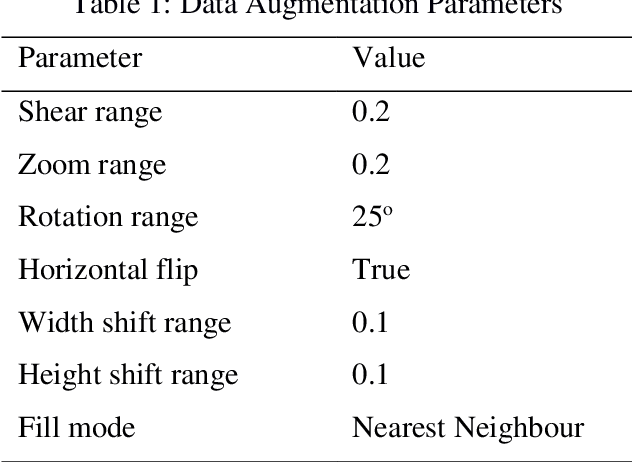

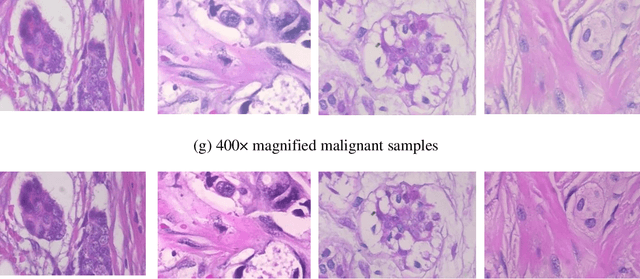

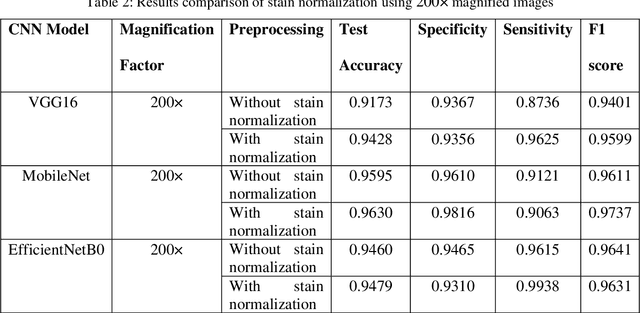

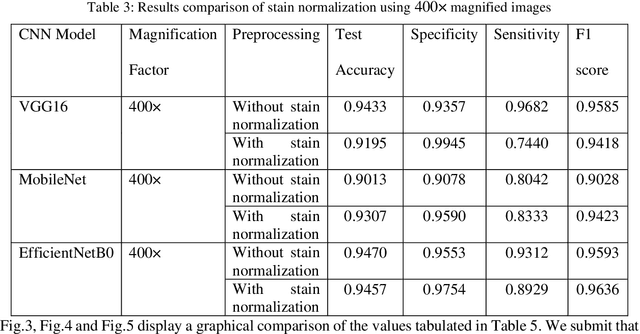

Abstract:Computer assisted diagnosis in digital pathology is becoming ubiquitous as it can provide more efficient and objective healthcare diagnostics. Recent advances have shown that the convolutional Neural Network (CNN) architectures, a well-established deep learning paradigm, can be used to design a Computer Aided Diagnostic (CAD) System for breast cancer detection. However, the challenges due to stain variability and the effect of stain normalization with such deep learning frameworks are yet to be well explored. Moreover, performance analysis with arguably more efficient network models, which may be important for high throughput screening, is also not well explored.To address this challenge, we consider some contemporary CNN models for binary classification of breast histopathology images that involves (1) the data preprocessing with stain normalized images using an adaptive colour deconvolution (ACD) based color normalization algorithm to handle the stain variabilities; and (2) applying transfer learning based training of some arguably more efficient CNN models, namely Visual Geometry Group Network (VGG16), MobileNet and EfficientNet. We have validated the trained CNN networks on a publicly available BreaKHis dataset, for 200x and 400x magnified histopathology images. The experimental analysis shows that pretrained networks in most cases yield better quality results on data augmented breast histopathology images with stain normalization, than the case without stain normalization. Further, we evaluated the performance and efficiency of popular lightweight networks using stain normalized images and found that EfficientNet outperforms VGG16 and MobileNet in terms of test accuracy and F1 Score. We observed that efficiency in terms of test time is better in EfficientNet than other networks; VGG Net, MobileNet, without much drop in the classification accuracy.

Modeling context and situations in pervasive computing environments

Mar 24, 2015

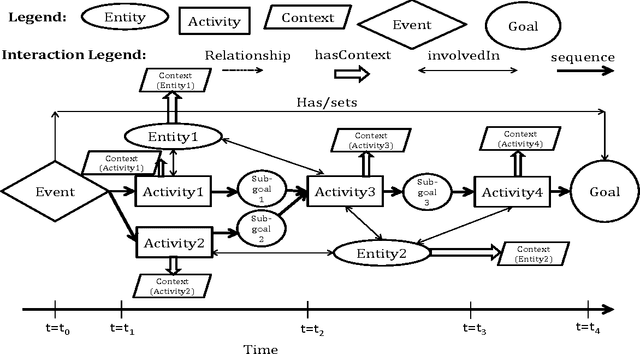

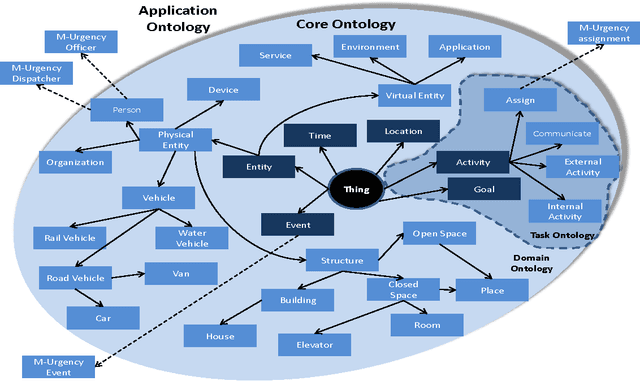

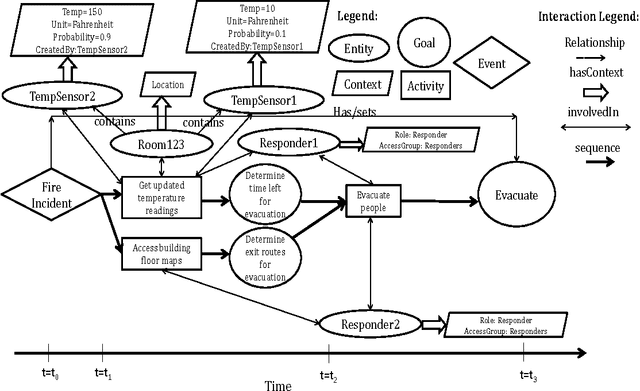

Abstract:In pervasive computing environments, various entities often have to cooperate and integrate seamlessly in a \emph{situation} which can, thus, be considered as an amalgamation of the context of several entities interacting and coordinating with each other, and often performing one or more activities. However, none of the existing context models and ontologies address situation modeling. In this paper, we describe the design, structure and implementation of a generic, flexible and extensible context ontology called Rover Context Model Ontology (RoCoMO) for context and situation modeling in pervasive computing systems and environments. We highlight several limitations of the existing context models and ontologies, such as lack of provision for provenance, traceability, quality of context, multiple representation of contextual information, as well as support for security, privacy and interoperability, and explain how we are addressing these limitations in our approach. We also illustrate the applicability and utility of RoCoMO using a practical and extensive case study.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge