Shivani Shimpi

Multimodal Depression Severity Prediction from medical bio-markers using Machine Learning Tools and Technologies

Oct 06, 2020

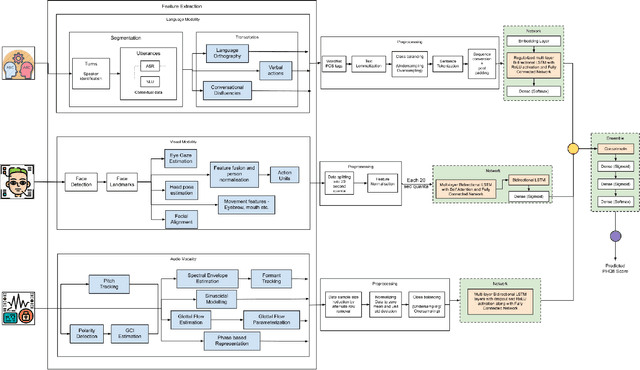

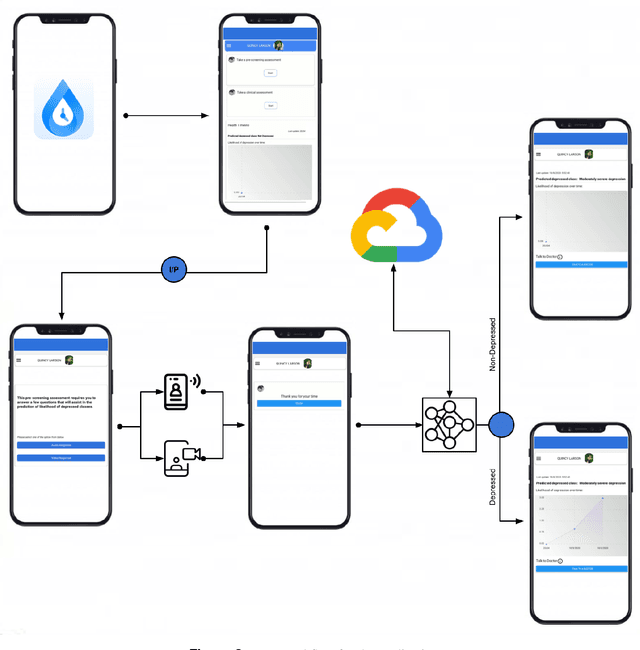

Abstract:Depression has been a leading cause of mental-health illnesses across the world. While the loss of lives due to unmanaged depression is a subject of attention, so is the lack of diagnostic tests and subjectivity involved. Using behavioural cues to automate depression diagnosis and stage prediction in recent years has relatively increased. However, the absence of labelled behavioural datasets and a vast amount of possible variations prove to be a major challenge in accomplishing the task. This paper proposes a novel Custom CM Ensemble approach and focuses on a paradigm of a cross-platform smartphone application that takes multimodal inputs from a user through a series of pre-defined questions, sends it to the Cloud ML architecture and conveys back a depression quotient, representative of its severity. Our app estimates the severity of depression based on a multi-class classification model by utilizing the language, audio, and visual modalities. The given approach attempts to detect, emphasize, and classify the features of a depressed person based on the low-level descriptors for verbal and visual features, and context of the language features when prompted with a question. The model achieved a precision value of 0.88 and an accuracy of 91.56%. Further optimization reveals the intramodality and intermodality relevance through the selection of the most influential features within each modality for decision making.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge