Get our free extension to see links to code for papers anywhere online!Free add-on: code for papers everywhere!Free add-on: See code for papers anywhere!

Sheng-Wei Chen

BDA-PCH: Block-Diagonal Approximation of Positive-Curvature Hessian for Training Neural Networks

Feb 20, 2018Figures and Tables:

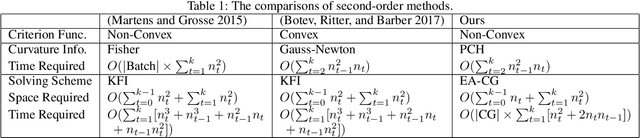

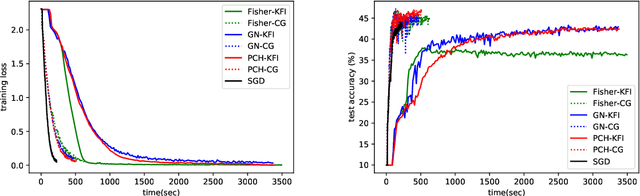

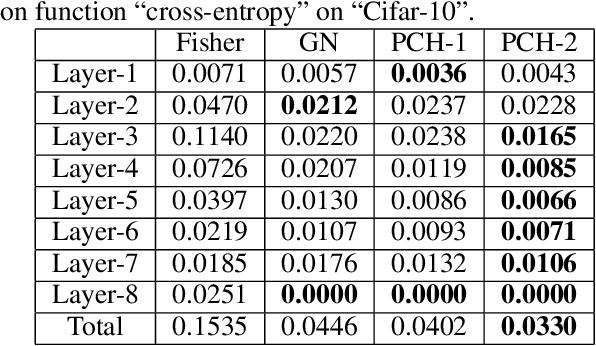

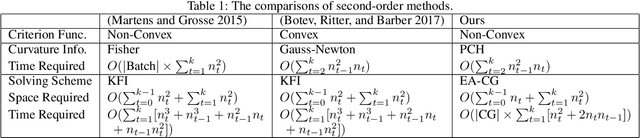

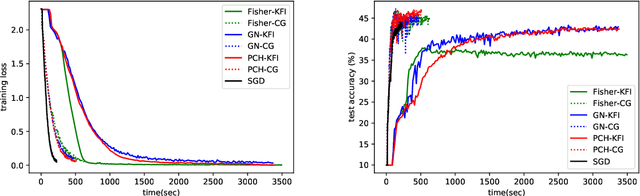

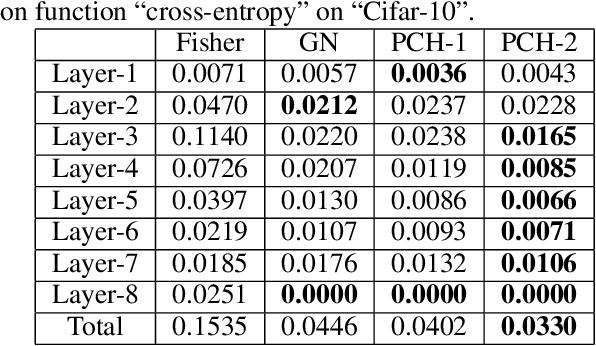

Abstract:We propose a block-diagonal approximation of the positive-curvature Hessian (BDA-PCH) matrix to measure curvature. Our proposed BDAPCH matrix is memory efficient and can be applied to any fully-connected neural networks where the activation and criterion functions are twice differentiable. Particularly, our BDA-PCH matrix can handle non-convex criterion functions. We devise an efficient scheme utilizing the conjugate gradient method to derive Newton directions for mini-batch setting. Empirical studies show that our method outperforms the competing second-order methods in convergence speed.

* Correct the author's name

Via

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge