Shaorong Zhang

Generation Order and Parallel Decoding in Masked Diffusion Models: An Information-Theoretic Perspective

Jan 30, 2026Abstract:Masked Diffusion Models (MDMs) significantly accelerate inference by trading off sequential determinism. However, the theoretical mechanisms governing generation order and the risks inherent in parallelization remain under-explored. In this work, we provide a unified information-theoretic framework to decouple and analyze two fundamental sources of failure: order sensitivity and parallelization bias. Our analysis yields three key insights: (1) The benefits of Easy-First decoding (prioritizing low-entropy tokens) are magnified as model error increases; (2) factorized parallel decoding introduces intrinsic sampling errors that can lead to arbitrary large Reverse KL divergence, capturing "incoherence" failures that standard Forward KL metrics overlook; and (3) while verification can eliminate sampling error, it incurs an exponential cost governed by the total correlation within a block. Conversely, heuristics like remasking, though computationally efficient, cannot guarantee distributional correctness. Experiments on a controlled Block-HMM and large-scale MDMs (LLaDA) for arithmetic reasoning validate our theoretical framework.

Thinking Out of Order: When Output Order Stops Reflecting Reasoning Order in Diffusion Language Models

Jan 29, 2026Abstract:Autoregressive (AR) language models enforce a fixed left-to-right generation order, creating a fundamental limitation when the required output structure conflicts with natural reasoning (e.g., producing answers before explanations due to presentation or schema constraints). In such cases, AR models must commit to answers before generating intermediate reasoning, and this rigid constraint forces premature commitment. Masked diffusion language models (MDLMs), which iteratively refine all tokens in parallel, offer a way to decouple computation order from output structure. We validate this capability on GSM8K, Math500, and ReasonOrderQA, a benchmark we introduce with controlled difficulty and order-level evaluation. When prompts request answers before reasoning, AR models exhibit large accuracy gaps compared to standard chain-of-thought ordering (up to 67% relative drop), while MDLMs remain stable ($\leq$14% relative drop), a property we term "order robustness". Using ReasonOrderQA, we present evidence that MDLMs achieve order robustness by stabilizing simpler tokens (e.g., reasoning steps) earlier in the diffusion process than complex ones (e.g., final answers), enabling reasoning tokens to stabilize before answer commitment. Finally, we identify failure conditions where this advantage weakens, outlining the limits required for order robustness.

Measurement-aligned Flow for Inverse Problem

Jun 13, 2025Abstract:Diffusion models provide a powerful way to incorporate complex prior information for solving inverse problems. However, existing methods struggle to correctly incorporate guidance from conflicting signals in the prior and measurement, especially in the challenging setting of non-Gaussian or unknown noise. To bridge these gaps, we propose Measurement-Aligned Sampling (MAS), a novel framework for linear inverse problem solving that can more flexibly balance prior and measurement information. MAS unifies and extends existing approaches like DDNM and DAPS, and offers a new optimization perspective. MAS can generalize to handle known Gaussian noise, unknown or non-Gaussian noise types. Extensive experiments show that MAS consistently outperforms state-of-the-art methods across a range of tasks.

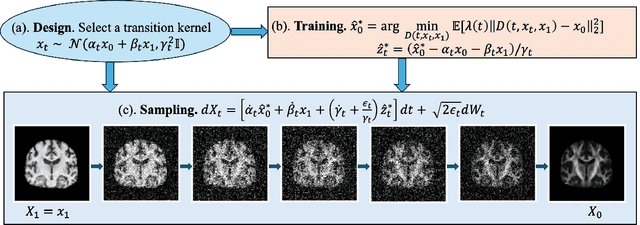

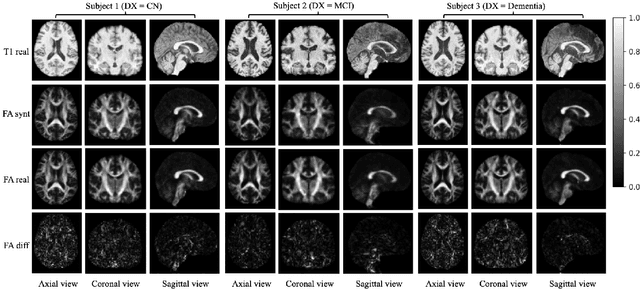

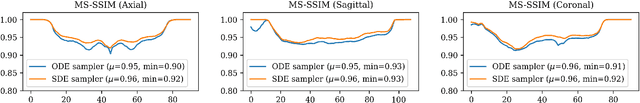

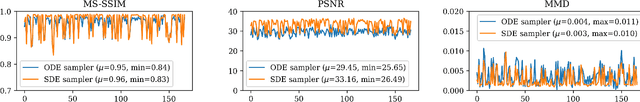

Diffusion Bridge Models for 3D Medical Image Translation

Apr 21, 2025

Abstract:Diffusion tensor imaging (DTI) provides crucial insights into the microstructure of the human brain, but it can be time-consuming to acquire compared to more readily available T1-weighted (T1w) magnetic resonance imaging (MRI). To address this challenge, we propose a diffusion bridge model for 3D brain image translation between T1w MRI and DTI modalities. Our model learns to generate high-quality DTI fractional anisotropy (FA) images from T1w images and vice versa, enabling cross-modality data augmentation and reducing the need for extensive DTI acquisition. We evaluate our approach using perceptual similarity, pixel-level agreement, and distributional consistency metrics, demonstrating strong performance in capturing anatomical structures and preserving information on white matter integrity. The practical utility of the synthetic data is validated through sex classification and Alzheimer's disease classification tasks, where the generated images achieve comparable performance to real data. Our diffusion bridge model offers a promising solution for improving neuroimaging datasets and supporting clinical decision-making, with the potential to significantly impact neuroimaging research and clinical practice.

Generating Synthetic Net Load Data with Physics-informed Diffusion Model

Jun 04, 2024Abstract:This paper presents a novel physics-informed diffusion model for generating synthetic net load data, addressing the challenges of data scarcity and privacy concerns. The proposed framework embeds physical models within denoising networks, offering a versatile approach that can be readily generalized to unforeseen scenarios. A conditional denoising neural network is designed to jointly train the parameters of the transition kernel of the diffusion model and the parameters of the physics-informed function. Utilizing the real-world smart meter data from Pecan Street, we validate the proposed method and conduct a thorough numerical study comparing its performance with state-of-the-art generative models, including generative adversarial networks, variational autoencoders, normalizing flows, and a well calibrated baseline diffusion model. A comprehensive set of evaluation metrics is used to assess the accuracy and diversity of the generated synthetic net load data. The numerical study results demonstrate that the proposed physics-informed diffusion model outperforms state-of-the-art models across all quantitative metrics, yielding at least 20% improvement.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge