Get our free extension to see links to code for papers anywhere online!Free add-on: code for papers everywhere!Free add-on: See code for papers anywhere!

Shane Sims

A Neural Architecture for Detecting Confusion in Eye-tracking Data

Mar 13, 2020Figures and Tables:

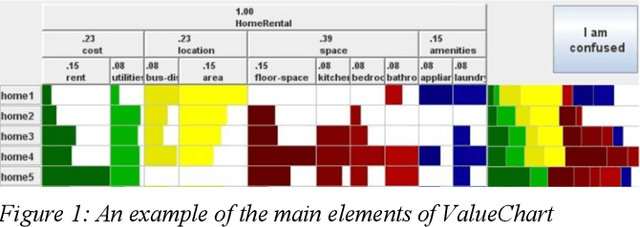

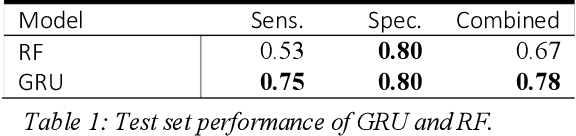

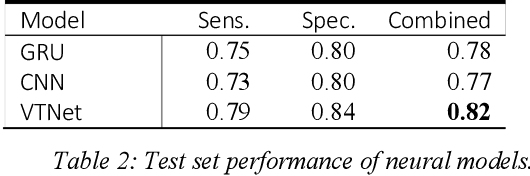

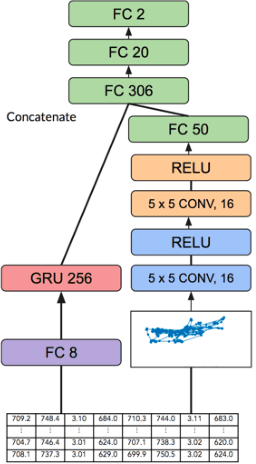

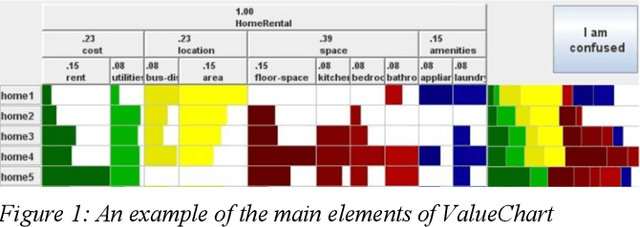

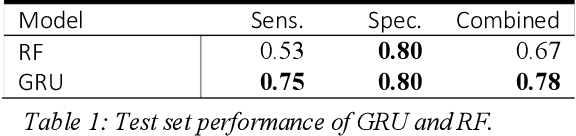

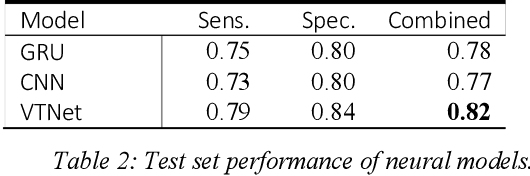

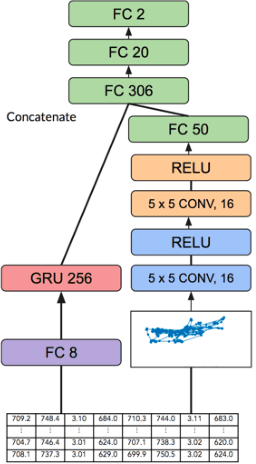

Abstract:Encouraged by the success of deep learning in a variety of domains, we investigate a novel application of its methods on the effectiveness of detecting user confusion in eye-tracking data. We introduce an architecture that uses RNN and CNN sub-models in parallel to take advantage of the temporal and visuospatial aspects of our data. Experiments with a dataset of user interactions with the ValueChart visualization tool show that our model outperforms an existing model based on Random Forests resulting in a 22% improvement in combined sensitivity & specificity.

Via

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge