Shahrzad Khaleghian

RetinotopicNet: An Iterative Attention Mechanism Using Local Descriptors with Global Context

May 12, 2020

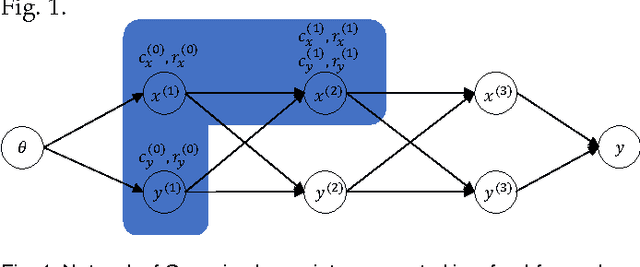

Abstract:Convolutional Neural Networks (CNNs) were the driving force behind many advancements in Computer Vision research in recent years. This progress has spawned many practical applications and we see an increased need to efficiently move CNNs to embedded systems today. However traditional CNNs lack the property of scale and rotation invariance: two of the most frequently encountered transformations in natural images. As a consequence CNNs have to learn different features for same objects at different scales. This redundancy is the main reason why CNNs need to be very deep in order to achieve the desired accuracy. In this paper we develop an efficient solution by reproducing how nature has solved the problem in the human brain. To this end we let our CNN operate on small patches extracted using the log-polar transform, which is known to be scale and rotation equivariant. Patches extracted in this way have the nice property of magnifying the central field and compressing the periphery. Hence we obtain local descriptors with global context information. However the processing of a single patch is usually not sufficient to achieve high accuracies in e.g. classification tasks. We therefore successively jump to several different locations, called saccades, thus building an understanding of the whole image. Since log-polar patches contain global context information, we can efficiently calculate following saccades using only the small patches. Saccades efficiently compensate for the lack of translation equivariance of the log-polar transform.

Training of Deep Neural Networks based on Distance Measures using RMSProp

Aug 06, 2017

Abstract:The vanishing gradient problem was a major obstacle for the success of deep learning. In recent years it was gradually alleviated through multiple different techniques. However the problem was not really overcome in a fundamental way, since it is inherent to neural networks with activation functions based on dot products. In a series of papers, we are going to analyze alternative neural network structures which are not based on dot products. In this first paper, we revisit neural networks built up of layers based on distance measures and Gaussian activation functions. These kinds of networks were only sparsely used in the past since they are hard to train when using plain stochastic gradient descent methods. We show that by using Root Mean Square Propagation (RMSProp) it is possible to efficiently learn multi-layer neural networks. Furthermore we show that when appropriately initialized these kinds of neural networks suffer much less from the vanishing and exploding gradient problem than traditional neural networks even for deep networks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge