Sergiu Hart

Forecast Hedging and Calibration

Oct 13, 2022Abstract:Calibration means that forecasts and average realized frequencies are close. We develop the concept of forecast hedging, which consists of choosing the forecasts so as to guarantee that the expected track record can only improve. This yields all the calibration results by the same simple basic argument while differentiating between them by the forecast-hedging tools used: deterministic and fixed point based versus stochastic and minimax based. Additional contributions are an improved definition of continuous calibration, ensuing game dynamics that yield Nash equilibria in the long run, and a new calibrated forecasting procedure for binary events that is simpler than all known such procedures.

* http://www.ma.huji.ac.il/hart/publ.html#calib-int

Smooth Calibration, Leaky Forecasts, Finite Recall, and Nash Dynamics

Oct 13, 2022

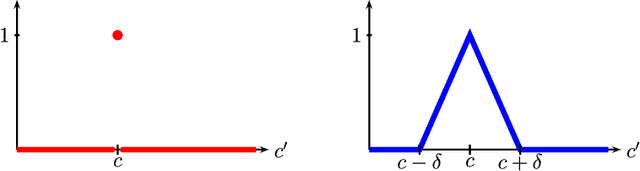

Abstract:We propose to smooth out the calibration score, which measures how good a forecaster is, by combining nearby forecasts. While regular calibration can be guaranteed only by randomized forecasting procedures, we show that smooth calibration can be guaranteed by deterministic procedures. As a consequence, it does not matter if the forecasts are leaked, i.e., made known in advance: smooth calibration can nevertheless be guaranteed (while regular calibration cannot). Moreover, our procedure has finite recall, is stationary, and all forecasts lie on a finite grid. To construct the procedure, we deal also with the related setups of online linear regression and weak calibration. Finally, we show that smooth calibration yields uncoupled finite-memory dynamics in n-person games "smooth calibrated learning" in which the players play approximate Nash equilibria in almost all periods (by contrast, calibrated learning, which uses regular calibration, yields only that the time-averages of play are approximate correlated equilibria).

* http://www.ma.huji.ac.il/hart/publ.html#calib-eq

Calibrated Forecasts: The Minimax Proof

Sep 13, 2022Abstract:A formal write-up of the simple proof (1995) of the existence of calibrated forecasts by the minimax theorem, which moreover shows that N^3 periods suffice to guarantee a 1/N calibration error.

"Calibeating": Beating Forecasters at Their Own Game

Sep 11, 2022

Abstract:In order to identify expertise, forecasters should not be tested by their calibration score, which can always be made arbitrarily small, but rather by their Brier score. The Brier score is the sum of the calibration score and the refinement score; the latter measures how good the sorting into bins with the same forecast is, and thus attests to "expertise." This raises the question of whether one can gain calibration without losing expertise, which we refer to as "calibeating." We provide an easy way to calibeat any forecast, by a deterministic online procedure. We moreover show that calibeating can be achieved by a stochastic procedure that is itself calibrated, and then extend the results to simultaneously calibeating multiple procedures, and to deterministic procedures that are continuously calibrated.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge