Sergey Kolyubin

OSMa-Bench: Evaluating Open Semantic Mapping Under Varying Lighting Conditions

Mar 13, 2025Abstract:Open Semantic Mapping (OSM) is a key technology in robotic perception, combining semantic segmentation and SLAM techniques. This paper introduces a dynamically configurable and highly automated LLM/LVLM-powered pipeline for evaluating OSM solutions called OSMa-Bench (Open Semantic Mapping Benchmark). The study focuses on evaluating state-of-the-art semantic mapping algorithms under varying indoor lighting conditions, a critical challenge in indoor environments. We introduce a novel dataset with simulated RGB-D sequences and ground truth 3D reconstructions, facilitating the rigorous analysis of mapping performance across different lighting conditions. Through experiments on leading models such as ConceptGraphs, BBQ and OpenScene, we evaluate the semantic fidelity of object recognition and segmentation. Additionally, we introduce a Scene Graph evaluation method to analyze the ability of models to interpret semantic structure. The results provide insights into the robustness of these models, forming future research directions for developing resilient and adaptable robotic systems. Our code is available at https://be2rlab.github.io/OSMa-Bench/.

Synergizing Morphological Computation and Generative Design: Automatic Synthesis of Tendon-Driven Grippers

Oct 10, 2024

Abstract:Robots' behavior and performance are determined both by hardware and software. The design process of robotic systems is a complex journey that involves multiple phases. Throughout this process, the aim is to tackle various criteria simultaneously, even though they often contradict each other. The ultimate goal is to uncover the optimal solution that resolves these conflicting factors. Generative, computation or automatic designs are the paradigms aimed at accelerating the whole design process. Within this paper we propose a design methodology to generate linkage mechanisms for robots with morphological computation. We use a graph grammar and a heuristic search algorithm to create robot mechanism graphs that are converted into simulation models for testing the design output. To verify the design methodology we have applied it to a relatively simple quasi-static problem of object grasping. We found a way to automatically design an underactuated tendon-driven gripper that can grasp a wide range of objects. This is possible because of its structure, not because of sophisticated planning or learning.

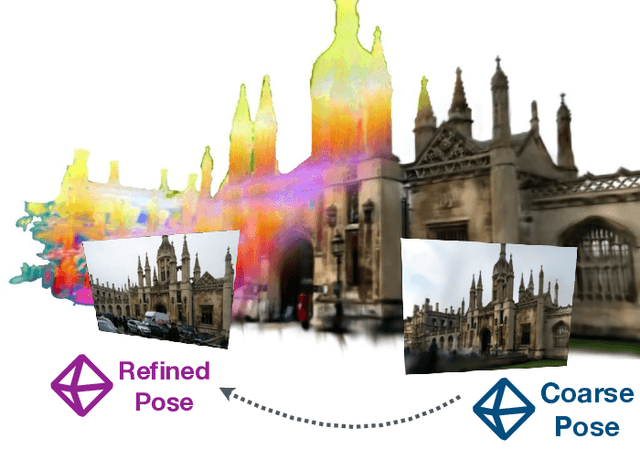

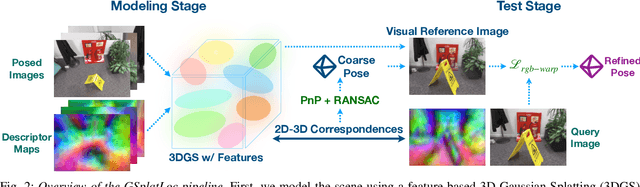

GSplatLoc: Grounding Keypoint Descriptors into 3D Gaussian Splatting for Improved Visual Localization

Sep 24, 2024

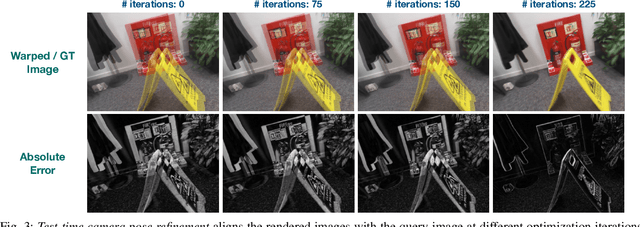

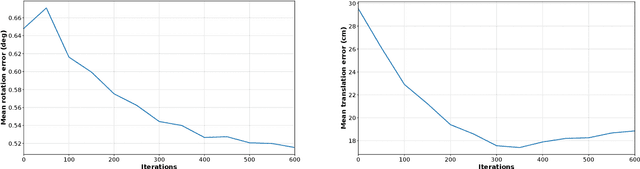

Abstract:Although various visual localization approaches exist, such as scene coordinate and pose regression, these methods often struggle with high memory consumption or extensive optimization requirements. To address these challenges, we utilize recent advancements in novel view synthesis, particularly 3D Gaussian Splatting (3DGS), to enhance localization. 3DGS allows for the compact encoding of both 3D geometry and scene appearance with its spatial features. Our method leverages the dense description maps produced by XFeat's lightweight keypoint detection and description model. We propose distilling these dense keypoint descriptors into 3DGS to improve the model's spatial understanding, leading to more accurate camera pose predictions through 2D-3D correspondences. After estimating an initial pose, we refine it using a photometric warping loss. Benchmarking on popular indoor and outdoor datasets shows that our approach surpasses state-of-the-art Neural Render Pose (NRP) methods, including NeRFMatch and PNeRFLoc.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge