Sergei Turitsyn

Improving Analog Neural Network Robustness: A Noise-Agnostic Approach with Explainable Regularizations

Sep 13, 2024

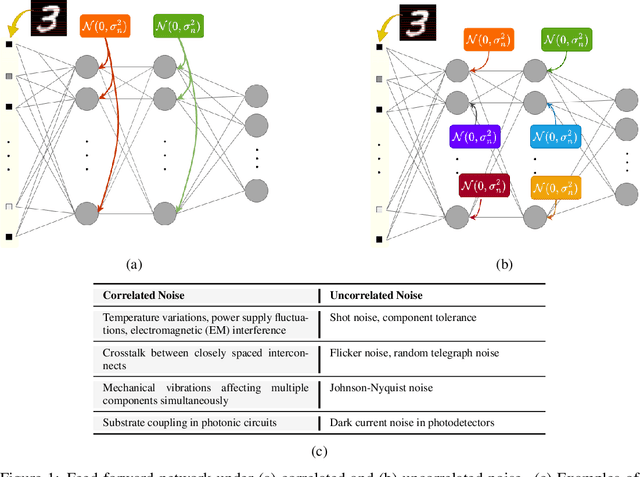

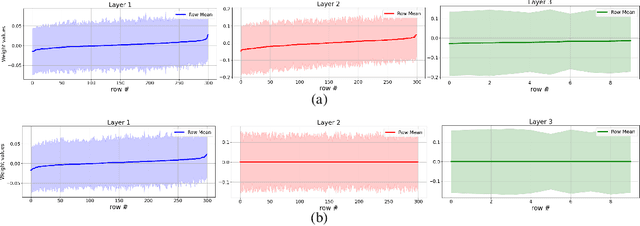

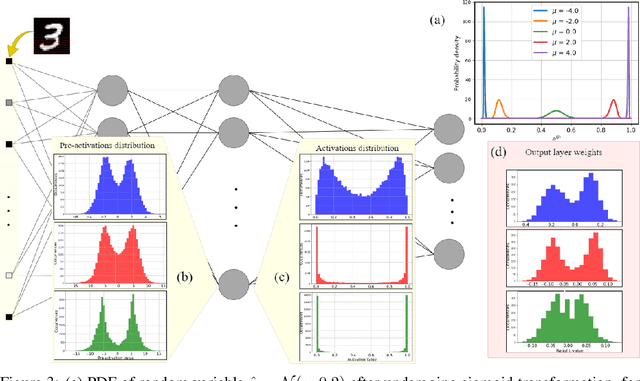

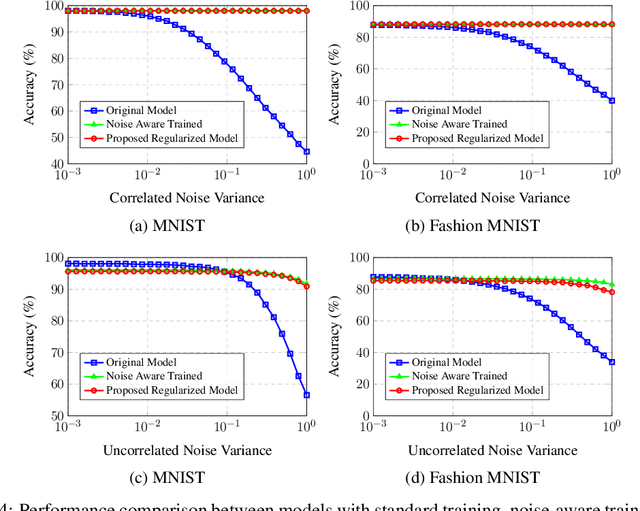

Abstract:This work tackles the critical challenge of mitigating "hardware noise" in deep analog neural networks, a major obstacle in advancing analog signal processing devices. We propose a comprehensive, hardware-agnostic solution to address both correlated and uncorrelated noise affecting the activation layers of deep neural models. The novelty of our approach lies in its ability to demystify the "black box" nature of noise-resilient networks by revealing the underlying mechanisms that reduce sensitivity to noise. In doing so, we introduce a new explainable regularization framework that harnesses these mechanisms to significantly enhance noise robustness in deep neural architectures.

Stochastic resonance neurons in artificial neural networks

May 06, 2022

Abstract:Many modern applications of the artificial neural networks ensue large number of layers making traditional digital implementations increasingly complex. Optical neural networks offer parallel processing at high bandwidth, but have the challenge of noise accumulation. We propose here a new type of neural networks using stochastic resonances as an inherent part of the architecture and demonstrate a possibility of significant reduction of the required number of neurons for a given performance accuracy. We also show that such a neural network is more robust against the impact of noise.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge