Sayan Chatterjee

The Impact of AI Tool on Engineering at ANZ Bank An Emperical Study on GitHub Copilot within Coporate Environment

Feb 08, 2024Abstract:The increasing popularity of AI, particularly Large Language Models (LLMs), has significantly impacted various domains, including Software Engineering. This study explores the integration of AI tools in software engineering practices within a large organization. We focus on ANZ Bank, which employs over 5000 engineers covering all aspects of the software development life cycle. This paper details an experiment conducted using GitHub Copilot, a notable AI tool, within a controlled environment to evaluate its effectiveness in real-world engineering tasks. Additionally, this paper shares initial findings on the productivity improvements observed after GitHub Copilot was adopted on a large scale, with about 1000 engineers using it. ANZ Bank's six-week experiment with GitHub Copilot included two weeks of preparation and four weeks of active testing. The study evaluated participant sentiment and the tool's impact on productivity, code quality, and security. Initially, participants used GitHub Copilot for proposed use-cases, with their feedback gathered through regular surveys. In the second phase, they were divided into Control and Copilot groups, each tackling the same Python challenges, and their experiences were again surveyed. Results showed a notable boost in productivity and code quality with GitHub Copilot, though its impact on code security remained inconclusive. Participant responses were overall positive, confirming GitHub Copilot's effectiveness in large-scale software engineering environments. Early data from 1000 engineers also indicated a significant increase in productivity and job satisfaction.

Federated Learning for Intrusion Detection in IoT Security: A Hybrid Ensemble Approach

Jun 25, 2021

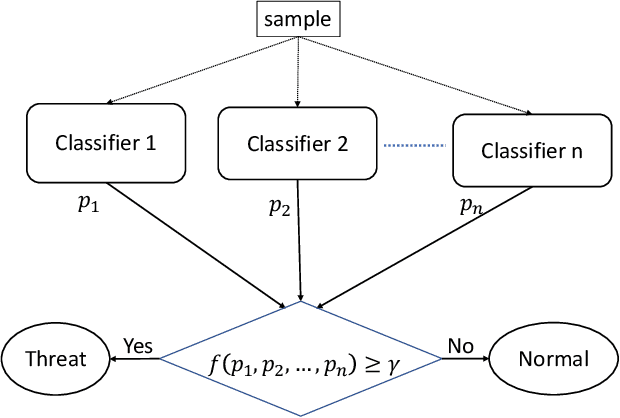

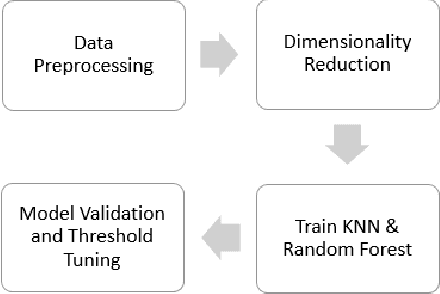

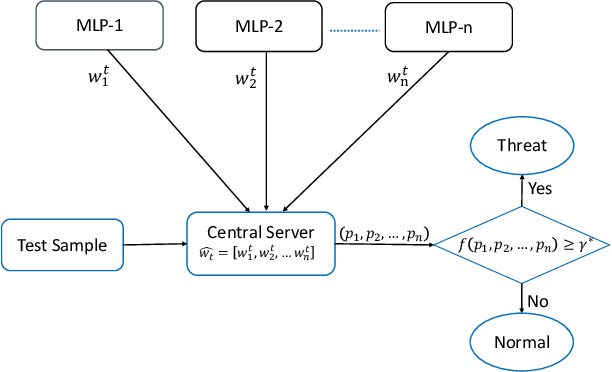

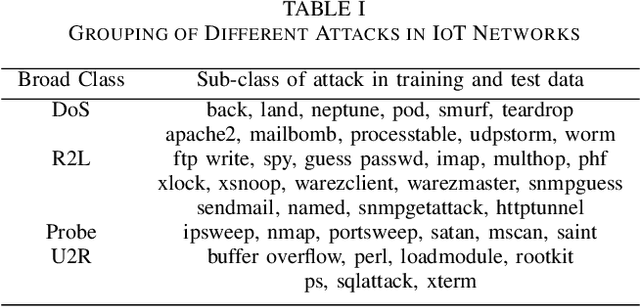

Abstract:Critical role of Internet of Things (IoT) in various domains like smart city, healthcare, supply chain and transportation has made them the target of malicious attacks. Past works in this area focused on centralized Intrusion Detection System (IDS), assuming the existence of a central entity to perform data analysis and identify threats. However, such IDS may not always be feasible, mainly due to spread of data across multiple sources and gathering at central node can be costly. Also, the earlier works primarily focused on improving True Positive Rate (TPR) and ignored the False Positive Rate (FPR), which is also essential to avoid unnecessary downtime of the systems. In this paper, we first present an architecture for IDS based on hybrid ensemble model, named PHEC, which gives improved performance compared to state-of-the-art architectures. We then adapt this model to a federated learning framework that performs local training and aggregates only the model parameters. Next, we propose Noise-Tolerant PHEC in centralized and federated settings to address the label-noise problem. The proposed idea uses classifiers using weighted convex surrogate loss functions. Natural robustness of KNN classifier towards noisy data is also used in the proposed architecture. Experimental results on four benchmark datasets drawn from various security attacks show that our model achieves high TPR while keeping FPR low on noisy and clean data. Further, they also demonstrate that the hybrid ensemble models achieve performance in federated settings close to that of the centralized settings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge