Sanghyuck Ha

A Simple Method to Reduce Off-chip Memory Accesses on Convolutional Neural Networks

Jan 28, 2019

Abstract:For convolutional neural networks, a simple algorithm to reduce off-chip memory accesses is proposed by maximally utilizing on-chip memory in a neural process unit. Especially, the algorithm provides an effective way to process a module which consists of multiple branches and a merge layer. For Inception-V3 on Samsung's NPU in Exynos, our evaluation shows that the proposed algorithm makes off-chip memory accesses reduced by 1/50, and accordingly achieves 97.59 % reduction in the amount of feature-map data to be transferred from/to off-chip memory.

Convolutional Neural Network Quantization using Generalized Gamma Distribution

Oct 31, 2018

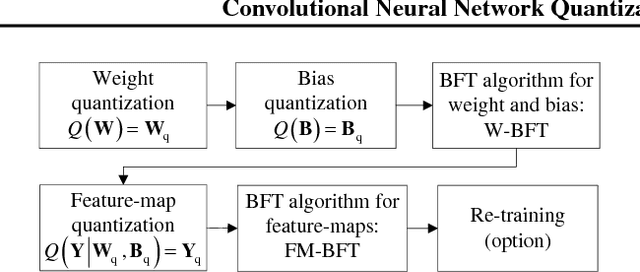

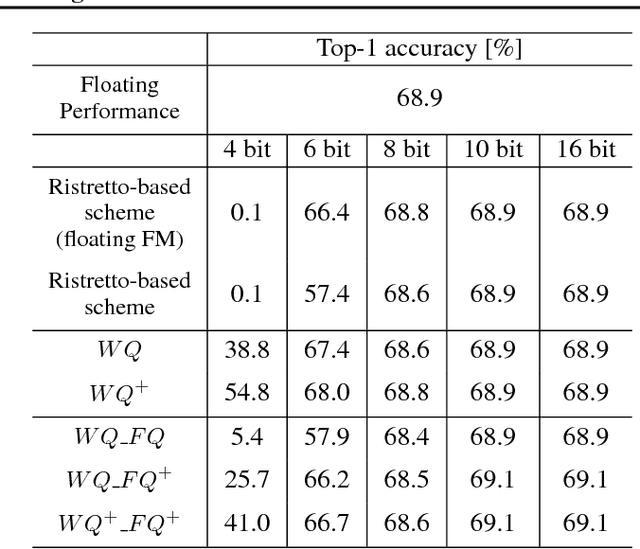

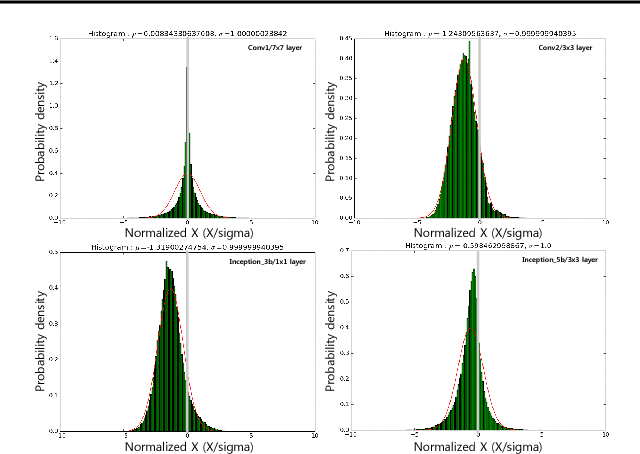

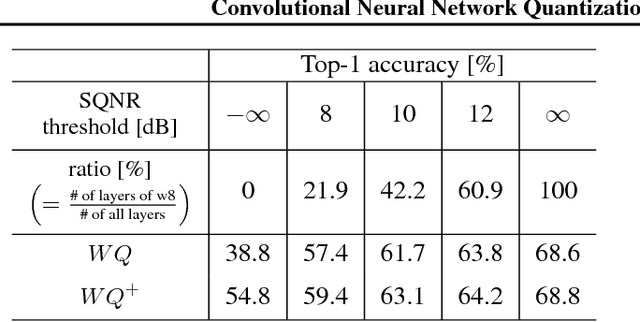

Abstract:As edge applications using convolutional neural networks (CNN) models grow, it is becoming necessary to introduce dedicated hardware accelerators in which network parameters and feature-map data are represented with limited precision. In this paper we propose a novel quantization algorithm for energy-efficient deployment of the hardware accelerators. For weights and biases, the optimal bit length of the fractional part is determined so that the quantization error is minimized over their distribution. For feature-map data, meanwhile, their sample distribution is well approximated with the generalized gamma distribution (GGD), and accordingly the optimal quantization step size can be obtained through the asymptotical closed form solution of GGD. The proposed quantization algorithm has a higher signal-to-quantization-noise ratio (SQNR) than other quantization schemes previously proposed for CNNs, and even can be more improved by tuning the quantization parameters, resulting in efficient implementation of the hardware accelerators for CNNs in terms of power consumption and memory bandwidth.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge