Sam Keene

Autoencoding Neural Networks as Musical Audio Synthesizers

Apr 27, 2020

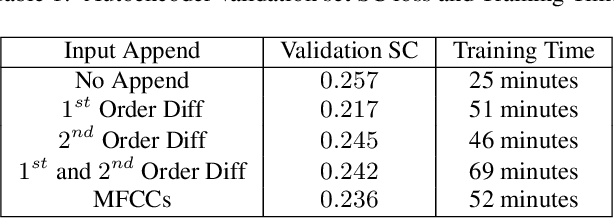

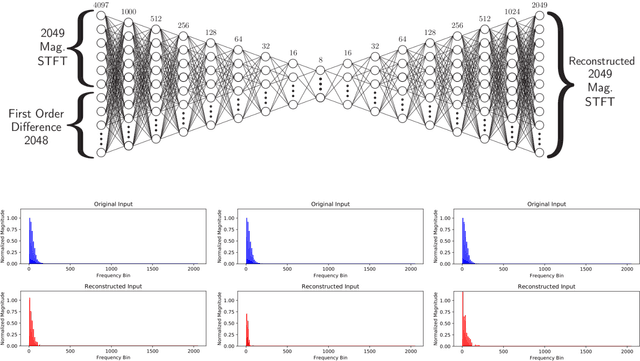

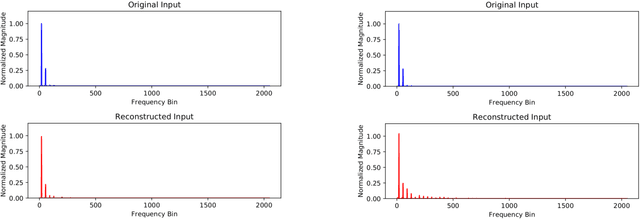

Abstract:A method for musical audio synthesis using autoencoding neural networks is proposed. The autoencoder is trained to compress and reconstruct magnitude short-time Fourier transform frames. The autoencoder produces a spectrogram by activating its smallest hidden layer, and a phase response is calculated using real-time phase gradient heap integration. Taking an inverse short-time Fourier transform produces the audio signal. Our algorithm is light-weight when compared to current state-of-the-art audio-producing machine learning algorithms. We outline our design process, produce metrics, and detail an open-source Python implementation of our model.

Conditioning Autoencoder Latent Spaces for Real-Time Timbre Interpolation and Synthesis

Jan 30, 2020

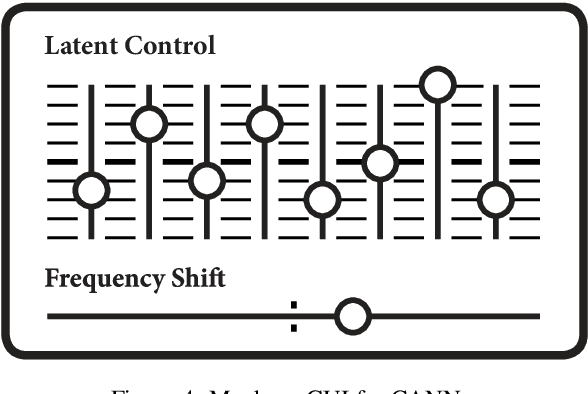

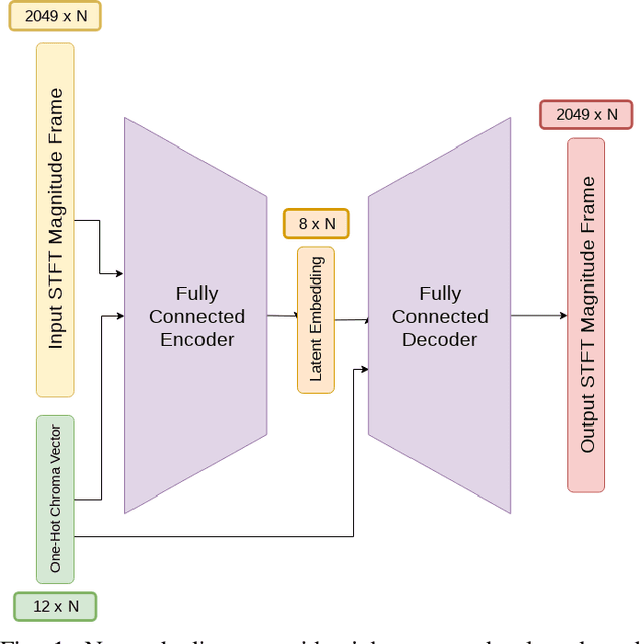

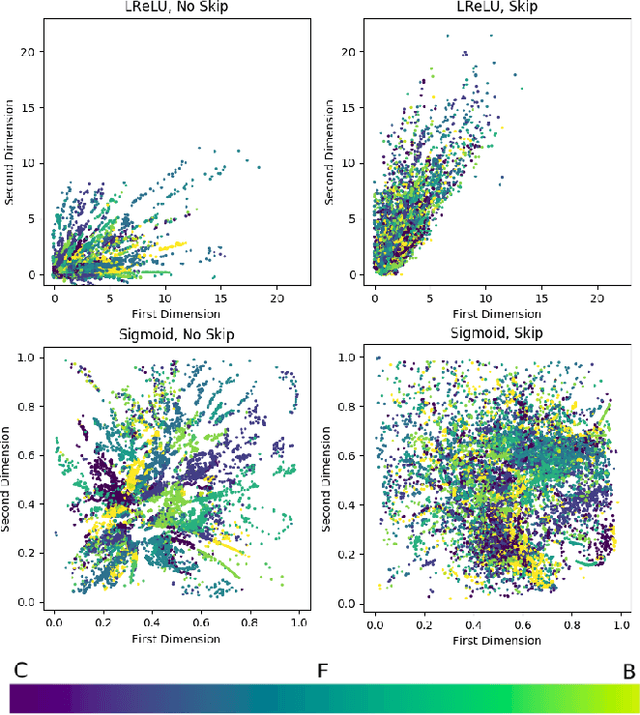

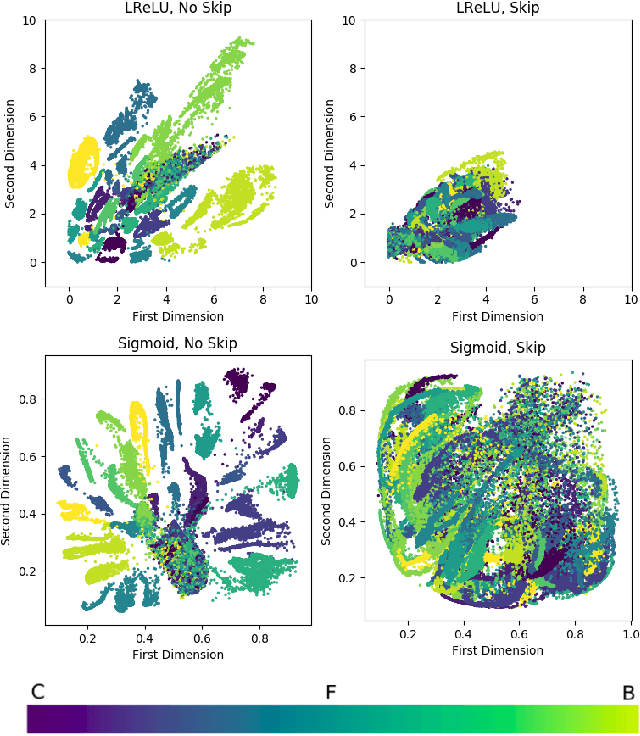

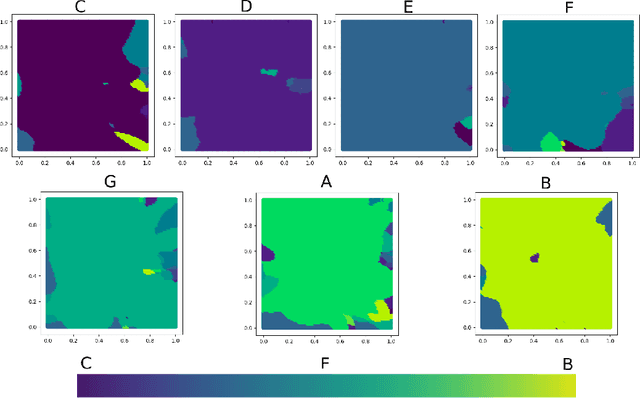

Abstract:We compare standard autoencoder topologies' performances for timbre generation. We demonstrate how different activation functions used in the autoencoder's bottleneck distributes a training corpus's embedding. We show that the choice of sigmoid activation in the bottleneck produces a more bounded and uniformly distributed embedding than a leaky rectified linear unit activation. We propose a one-hot encoded chroma feature vector for use in both input augmentation and latent space conditioning. We measure the performance of these networks, and characterize the latent embeddings that arise from the use of this chroma conditioning vector. An open source, real-time timbre synthesis algorithm in Python is outlined and shared.

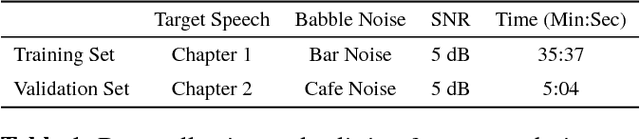

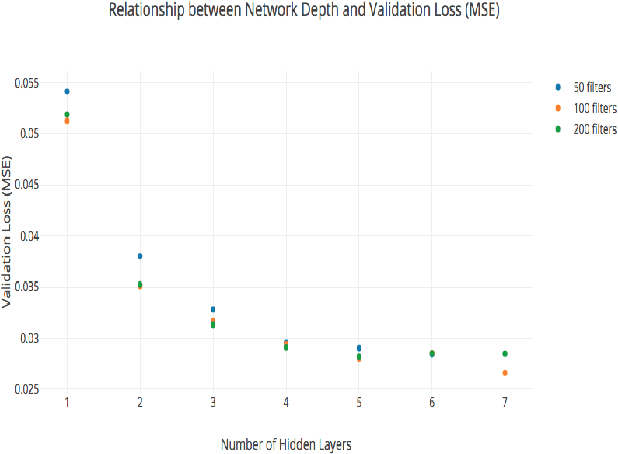

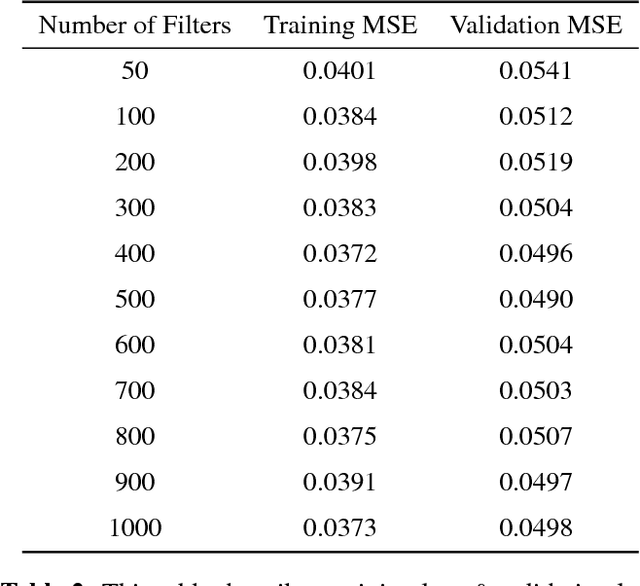

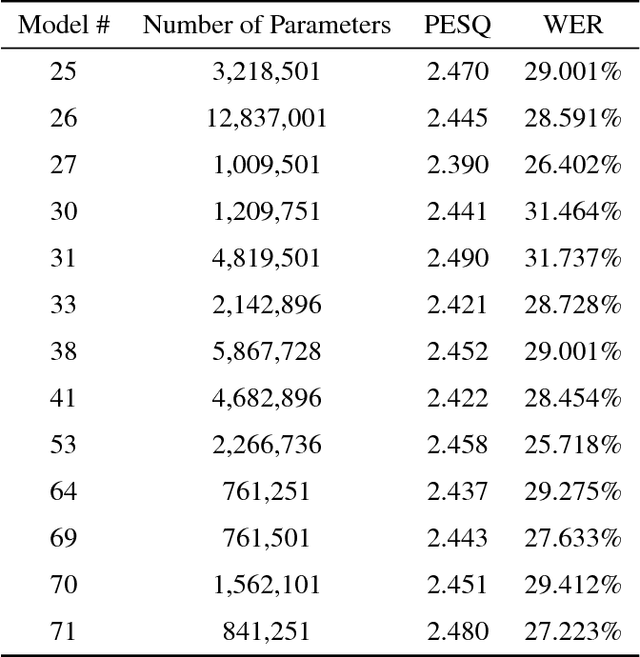

A Fully Convolutional Neural Network Approach to End-to-End Speech Enhancement

Jul 20, 2018

Abstract:This paper will describe a novel approach to the cocktail party problem that relies on a fully convolutional neural network (FCN) architecture. The FCN takes noisy audio data as input and performs nonlinear, filtering operations to produce clean audio data of the target speech at the output. Our method learns a model for one specific speaker, and is then able to extract that speakers voice from babble background noise. Results from experimentation indicate the ability to generalize to new speakers and robustness to new noise environments of varying signal-to-noise ratios. A potential application of this method would be for use in hearing aids. A pre-trained model could be quickly fine tuned for an individuals family members and close friends, and deployed onto a hearing aid to assist listeners in noisy environments.

An Open Source Pattern Recognition Toolbox for MATLAB

Jun 21, 2014

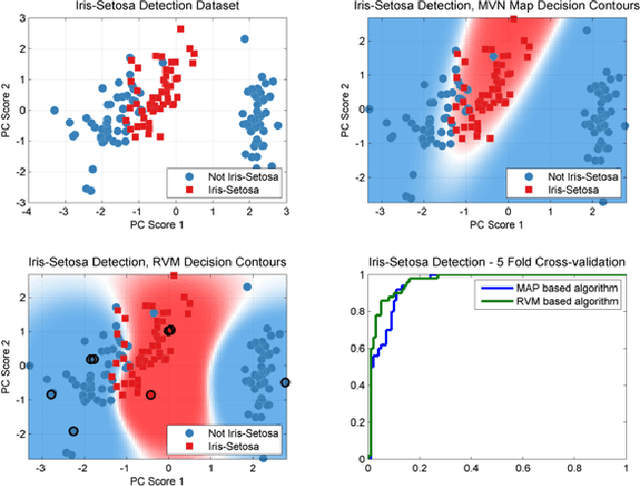

Abstract:Pattern recognition and machine learning are becoming integral parts of algorithms in a wide range of applications. Different algorithms and approaches for machine learning include different tradeoffs between performance and computation, so during algorithm development it is often necessary to explore a variety of different approaches to a given task. A toolbox with a unified framework across multiple pattern recognition techniques enables algorithm developers the ability to rapidly evaluate different choices prior to deployment. MATLAB is a widely used environment for algorithm development and prototyping, and although several MATLAB toolboxes for pattern recognition are currently available these are either incomplete, expensive, or restrictively licensed. In this work we describe a MATLAB toolbox for pattern recognition and machine learning known as the PRT (Pattern Recognition Toolbox), licensed under the permissive MIT license. The PRT includes many popular techniques for data preprocessing, supervised learning, clustering, regression and feature selection, as well as a methodology for combining these components using a simple, uniform syntax. The resulting algorithms can be evaluated using cross-validation and a variety of scoring metrics to ensure robust performance when the algorithm is deployed. This paper presents an overview of the PRT as well as an example of usage on Fisher's Iris dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge