Sally Khaidem

A Novel Approach for Neuromorphic Vision Data Compression based on Deep Belief Network

Oct 27, 2022

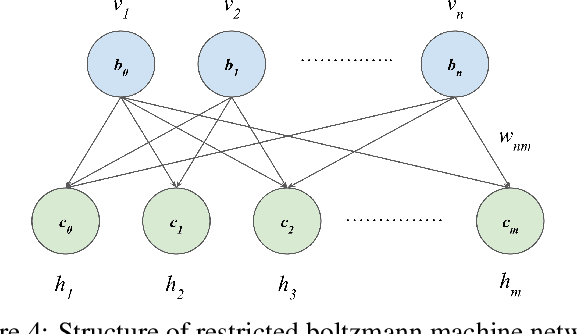

Abstract:A neuromorphic camera is an image sensor that emulates the human eyes capturing only changes in local brightness levels. They are widely known as event cameras, silicon retinas or dynamic vision sensors (DVS). DVS records asynchronous per-pixel brightness changes, resulting in a stream of events that encode the brightness change's time, location, and polarity. DVS consumes little power and can capture a wider dynamic range with no motion blur and higher temporal resolution than conventional frame-based cameras. Although this method of event capture results in a lower bit rate than traditional video capture, it is further compressible. This paper proposes a novel deep learning-based compression scheme for event data. Using a deep belief network (DBN), the high dimensional event data is reduced into a latent representation and later encoded using an entropy-based coding technique. The proposed scheme is among the first to incorporate deep learning for event compression. It achieves a high compression ratio while maintaining good reconstruction quality outperforming state-of-the-art event data coders and other lossless benchmark techniques.

A Novel Light Field Coding Scheme Based on Deep Belief Network and Weighted Binary Images for Additive Layered Displays

Oct 04, 2022

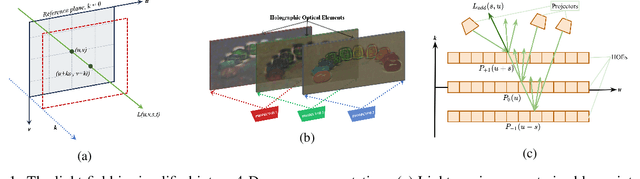

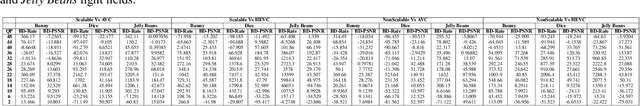

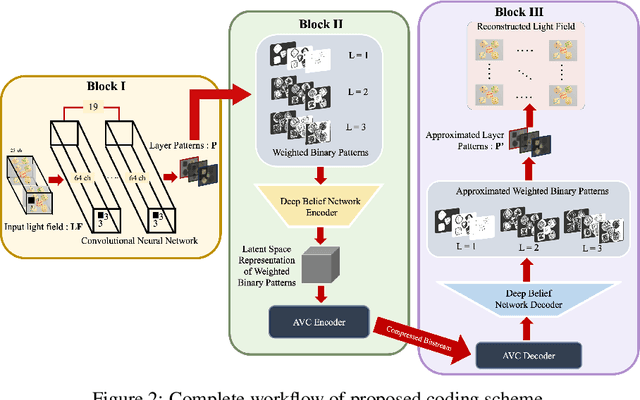

Abstract:Light field display caters to the viewer's immersive experience by providing binocular depth sensation and motion parallax. Glasses-free tensor light field display is becoming a prominent area of research in auto-stereoscopic display technology. Stacking light attenuating layers is one of the approaches to implement a light field display with a good depth of field, wide viewing angles and high resolution. This paper presents a compact and efficient representation of light field data based on scalable compression of the binary represented image layers suitable for additive layered display using a Deep Belief Network (DBN). The proposed scheme learns and optimizes the additive layer patterns using a convolutional neural network (CNN). Weighted binary images represent the optimized patterns, reducing the file size and introducing scalable encoding. The DBN further compresses the weighted binary patterns into a latent space representation followed by encoding the latent data using an h.254 codec. The proposed scheme is compared with benchmark codecs such as h.264 and h.265 and achieved competitive performance on light field data.

An Integrated Representation & Compression Scheme Based on Convolutional Autoencoders with 4D DCT Perceptual Encoding for High Dynamic Range Light Fields

Jun 21, 2022

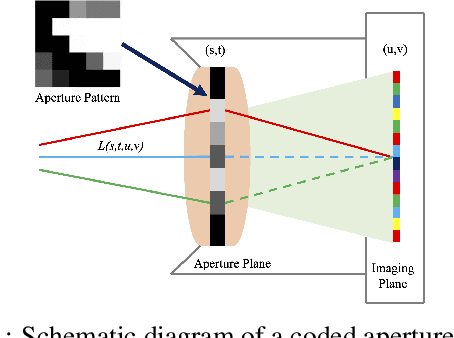

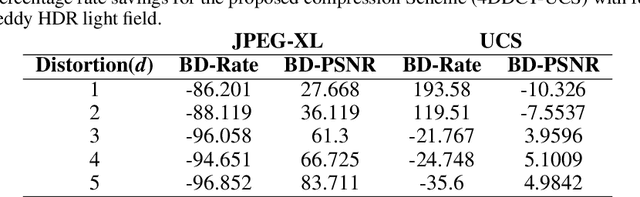

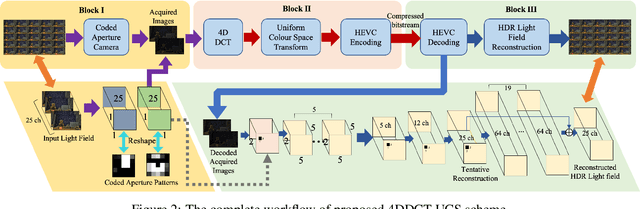

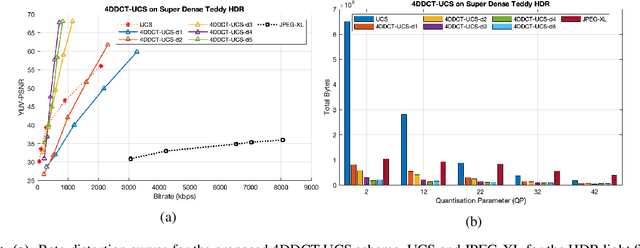

Abstract:The emerging and existing light field displays are highly capable of realistic presentation of 3D scenes on auto-stereoscopic glasses-free platforms. The light field size is a major drawback while utilising 3D displays and streaming purposes. When a light field is of high dynamic range, the size increases drastically. In this paper, we propose a novel compression algorithm for a high dynamic range light field which yields a perceptually lossless compression. The algorithm exploits the inter and intra view correlations of the HDR light field by interpreting it to be a four-dimension volume. The HDR light field compression is based on a novel 4DDCT-UCS (4D-DCT Uniform Colour Space) algorithm. Additional encoding of 4DDCT-UCS acquired images by HEVC eliminates intra-frame, inter-frame and intrinsic redundancies in HDR light field data. Comparison with state-of-the-art coders like JPEG-XL and HDR video coding algorithm exhibits superior compression performance of the proposed scheme for real-world light fields.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge