Saida Bouakaz

A novel database of Children's Spontaneous Facial Expressions

Jan 20, 2019

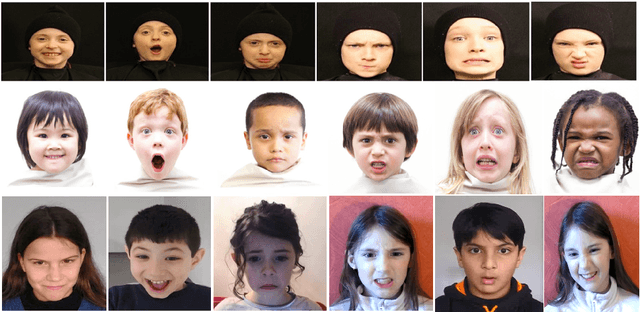

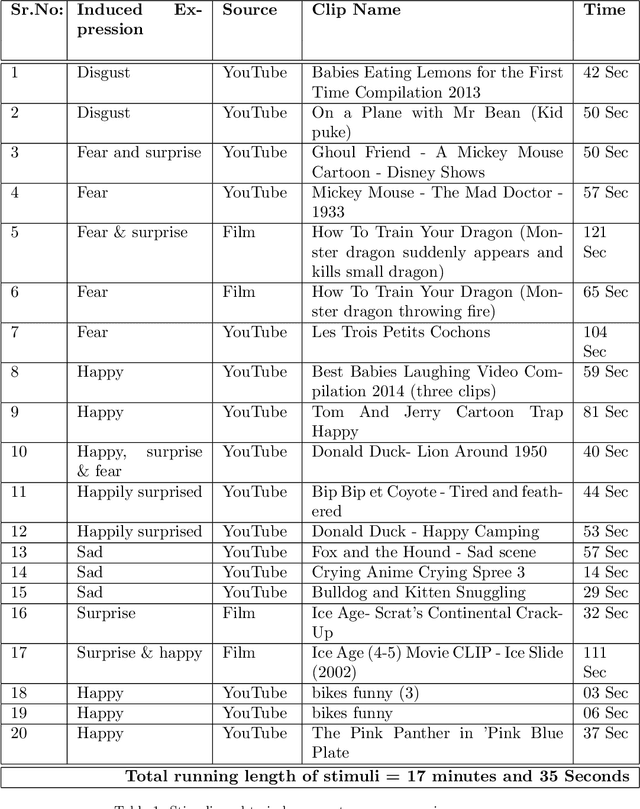

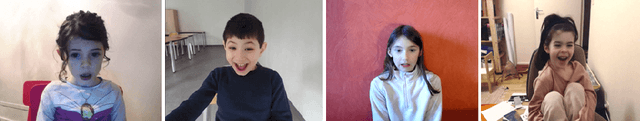

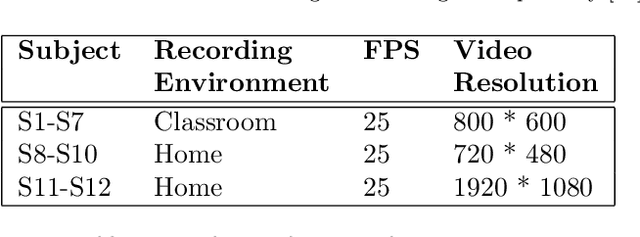

Abstract:Computing environment is moving towards human-centered designs instead of computer centered designs and human's tend to communicate wealth of information through affective states or expressions. Traditional Human Computer Interaction (HCI) based systems ignores bulk of information communicated through those affective states and just caters for user's intentional input. Generally, for evaluating and benchmarking different facial expression analysis algorithms, standardized databases are needed to enable a meaningful comparison. In the absence of comparative tests on such standardized databases it is difficult to find relative strengths and weaknesses of different facial expression recognition algorithms. In this article we present a novel video database for Children's Spontaneous facial Expressions (LIRIS-CSE). Proposed video database contains six basic spontaneous facial expressions shown by 12 ethnically diverse children between the ages of 6 and 12 years with mean age of 7.3 years. To the best of our knowledge, this database is first of its kind as it records and shows spontaneous facial expressions of children. Previously there were few database of children expressions and all of them show posed or exaggerated expressions which are different from spontaneous or natural expressions. Thus, this database will be a milestone for human behavior researchers. This database will be a excellent resource for vision community for benchmarking and comparing results. In this article, we have also proposed framework for automatic expression recognition based on convolutional neural network (CNN) architecture with transfer learning approach. Proposed architecture achieved average classification accuracy of 75% on our proposed database i.e. LIRIS-CSE.

Extraction of Facial Feature Points Using Cumulative Histogram

Mar 15, 2012

Abstract:This paper proposes a novel adaptive algorithm to extract facial feature points automatically such as eyebrows corners, eyes corners, nostrils, nose tip, and mouth corners in frontal view faces, which is based on cumulative histogram approach by varying different threshold values. At first, the method adopts the Viola-Jones face detector to detect the location of face and also crops the face region in an image. From the concept of the human face structure, the six relevant regions such as right eyebrow, left eyebrow, right eye, left eye, nose, and mouth areas are cropped in a face image. Then the histogram of each cropped relevant region is computed and its cumulative histogram value is employed by varying different threshold values to create a new filtering image in an adaptive way. The connected component of interested area for each relevant filtering image is indicated our respective feature region. A simple linear search algorithm for eyebrows, eyes and mouth filtering images and contour algorithm for nose filtering image are applied to extract our desired corner points automatically. The method was tested on a large BioID frontal face database in different illuminations, expressions and lighting conditions and the experimental results have achieved average success rates of 95.27%.

* 8 pages, 6 figures,3 equations, 2 tables, 20 references

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge