Sahar Imtiaz

Coordinates-based Resource Allocation Through Supervised Machine Learning

May 13, 2020

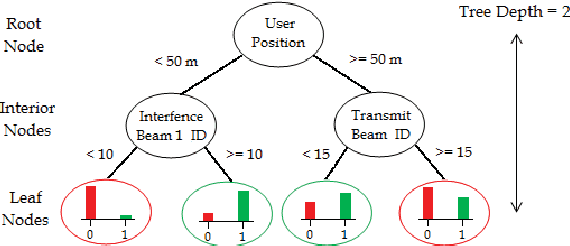

Abstract:Appropriate allocation of system resources is essential for meeting the increased user-traffic demands in the next generation wireless technologies. Traditionally, the system relies on channel state information (CSI) of the users for optimizing the resource allocation, which becomes costly for fast-varying channel conditions. Considering that future wireless technologies will be based on dense network deployment, where the mobile terminals are in line-of-sight of the transmitters, the position information of terminals provides an alternative to estimate the channel condition. In this work, we propose a coordinates-based resource allocation scheme using supervised machine learning techniques, and investigate how efficiently this scheme performs in comparison to the traditional approach under various propagation conditions. We consider a simplistic system set up as a first step, where a single transmitter serves a single mobile user. The performance results show that the coordinates-based resource allocation scheme achieves a performance very close to the CSI-based scheme, even when the available coordinates of terminals are erroneous. The proposed scheme performs consistently well with realistic-system simulation, requiring only 4 s of training time, and the appropriate resource allocation is predicted in less than 90 microseconds with a learnt model of size less than 1 kB.

Learning-Based Resource Allocation Scheme for TDD-Based CRAN System

Aug 29, 2016

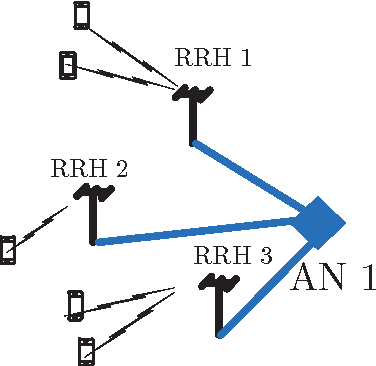

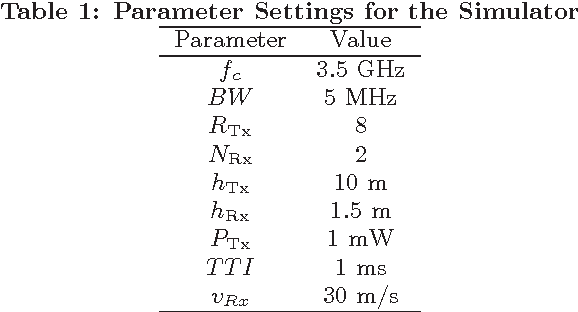

Abstract:Explosive growth in the use of smart wireless devices has necessitated the provision of higher data rates and always-on connectivity, which are the main motivators for designing the fifth generation (5G) systems. To achieve higher system efficiency, massive antenna deployment with tight coordination is one potential strategy for designing 5G systems, but has two types of associated system overhead. First is the synchronization overhead, which can be reduced by implementing a cloud radio access network (CRAN)-based architecture design, that separates the baseband processing and radio access functionality to achieve better system synchronization. Second is the overhead for acquiring channel state information (CSI) of the users present in the system, which, however, increases tremendously when instantaneous CSI is used to serve high-mobility users. To serve a large number of users, a CRAN system with a dense deployment of remote radio heads (RRHs) is considered, such that each user has a line-of-sight (LOS) link with the corresponding RRH. Since, the trajectory of movement for high-mobility users is predictable; therefore, fairly accurate position estimates for those users can be obtained, and can be used for resource allocation to serve the considered users. The resource allocation is dependent upon various correlated system parameters, and these correlations can be learned using well-known \emph{machine learning} algorithms. This paper proposes a novel \emph{learning-based resource allocation scheme} for time division duplex (TDD) based 5G CRAN systems with dense RRH deployment, by using only the users' position estimates for resource allocation, thus avoiding the need for CSI acquisition. This reduces the overall system overhead significantly, while still achieving near-optimal system performance; thus, better (effective) system efficiency is achieved. (See the paper for full abstract)

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge