Ryan P. O'Shea

Naval Air Warfare Center Aircraft Division Lakehurst

Monocular Simultaneous Localization and Mapping using Ground Textures

Mar 10, 2023

Abstract:Recent work has shown impressive localization performance using only images of ground textures taken with a downward facing monocular camera. This provides a reliable navigation method that is robust to feature sparse environments and challenging lighting conditions. However, these localization methods require an existing map for comparison. Our work aims to relax the need for a map by introducing a full simultaneous localization and mapping (SLAM) system. By not requiring an existing map, setup times are minimized and the system is more robust to changing environments. This SLAM system uses a combination of several techniques to accomplish this. Image keypoints are identified and projected into the ground plane. These keypoints, visual bags of words, and several threshold parameters are then used to identify overlapping images and revisited areas. The system then uses robust M-estimators to estimate the transform between robot poses with overlapping images and revisited areas. These optimized estimates make up the map used for navigation. We show, through experimental data, that this system performs reliably on many ground textures, but not all.

Automatic Generation of Machine Learning Synthetic Data Using ROS

Jun 08, 2021

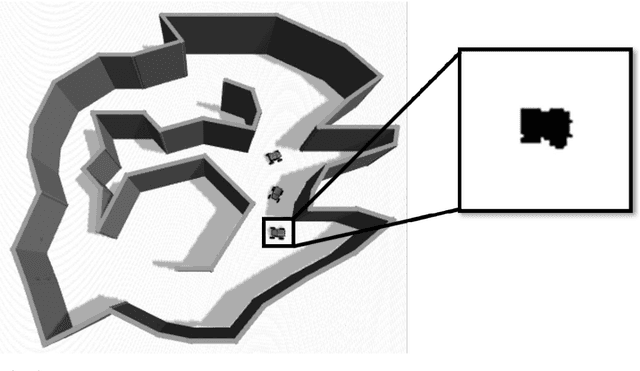

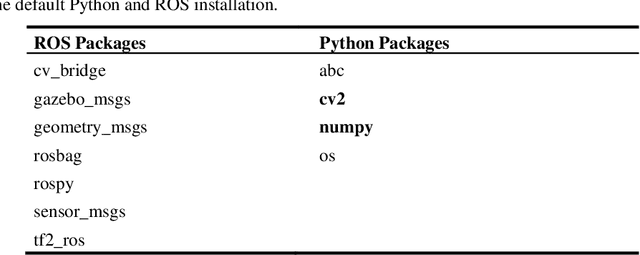

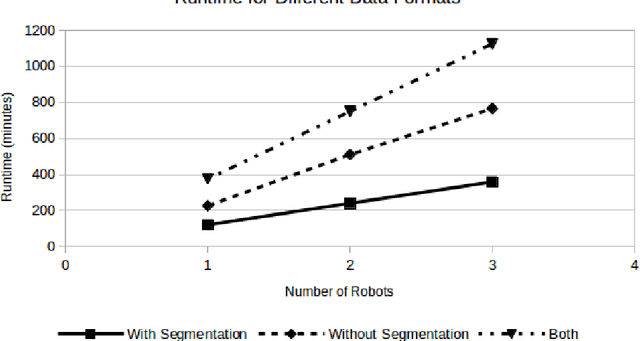

Abstract:Data labeling is a time intensive process. As such, many data scientists use various tools to aid in the data generation and labeling process. While these tools help automate labeling, many still require user interaction throughout the process. Additionally, most target only a few network frameworks. Any researchers exploring multiple frameworks must find additional tools orwrite conversion scripts. This paper presents an automated tool for generating synthetic data in arbitrary network formats. It uses Robot Operating System (ROS) and Gazebo, which are common tools in the robotics community. Through ROS paradigms, it allows extensive user customization of the simulation environment and data generation process. Additionally, a plugin-like framework allows the development of arbitrary data format writers without the need to change the main body of code. Using this tool, the authors were able to generate an arbitrarily large image dataset for three unique training formats using approximately 15 min of user setup time and a variable amount of hands-off run time, depending on the dataset size. The source code for this data generation tool is available at https://github.com/Navy-RISE-Lab/nn_data_collection

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge