Ruyi Tao

Predicting Company Growth by Econophysics informed Machine Learning

Oct 23, 2024Abstract:Predicting company growth is crucial for strategic adjustment, operational decision-making, risk assessment, and loan eligibility reviews. Traditional models for company growth often focus too much on theory, overlooking practical forecasting, or they rely solely on time series forecasting techniques, ignoring interpretability and the inherent mechanisms of company growth. In this paper, we propose a machine learning-based prediction framework that incorporates an econophysics model for company growth. Our model captures both the intrinsic growth mechanisms of companies led by scaling laws and the fluctuations influenced by random factors and individual decisions, demonstrating superior predictive performance compared with methods that use time series techniques alone. Its advantages are more pronounced in long-range prediction tasks. By explicitly modeling the baseline growth and volatility components, our model is more interpretable.

Neural Network Pruning by Gradient Descent

Nov 22, 2023

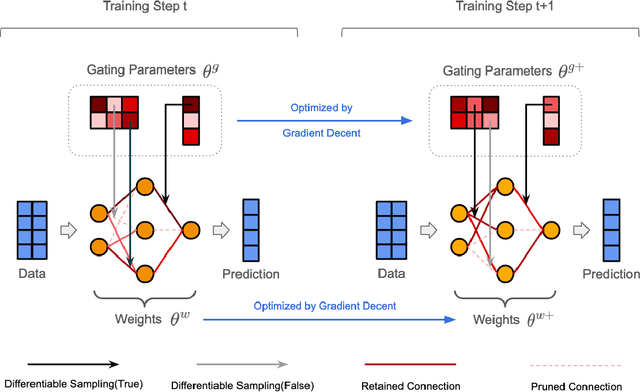

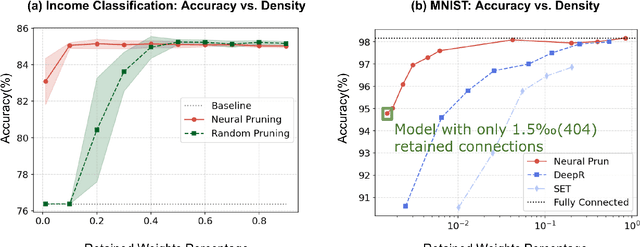

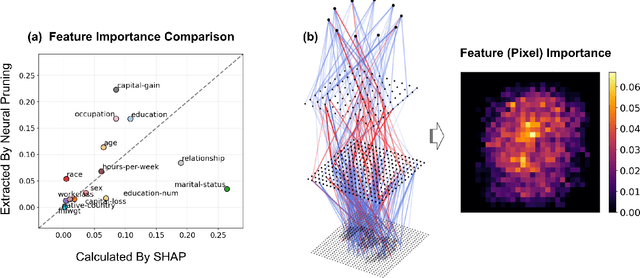

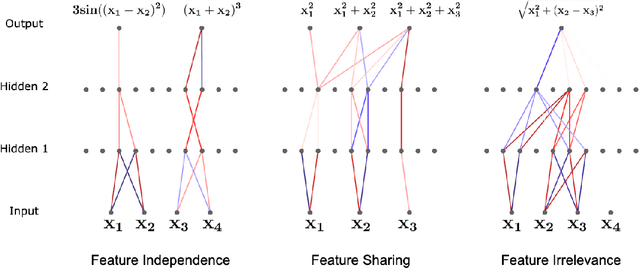

Abstract:The rapid increase in the parameters of deep learning models has led to significant costs, challenging computational efficiency and model interpretability. In this paper, we introduce a novel and straightforward neural network pruning framework that incorporates the Gumbel-Softmax technique. This framework enables the simultaneous optimization of a network's weights and topology in an end-to-end process using stochastic gradient descent. Empirical results demonstrate its exceptional compression capability, maintaining high accuracy on the MNIST dataset with only 0.15\% of the original network parameters. Moreover, our framework enhances neural network interpretability, not only by allowing easy extraction of feature importance directly from the pruned network but also by enabling visualization of feature symmetry and the pathways of information propagation from features to outcomes. Although the pruning strategy is learned through deep learning, it is surprisingly intuitive and understandable, focusing on selecting key representative features and exploiting data patterns to achieve extreme sparse pruning. We believe our method opens a promising new avenue for deep learning pruning and the creation of interpretable machine learning systems.

Graph Auto-Encoders for Network Completion

Apr 25, 2022

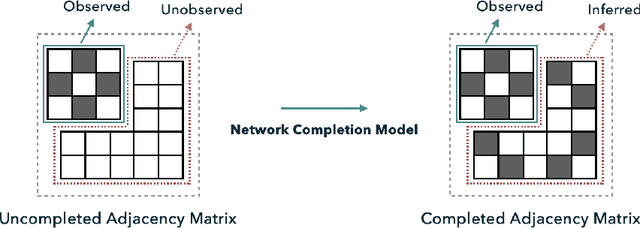

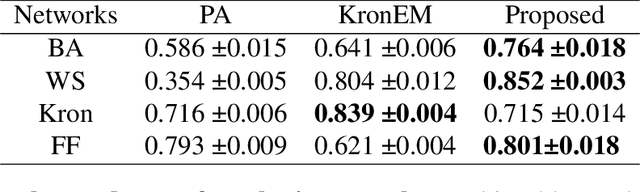

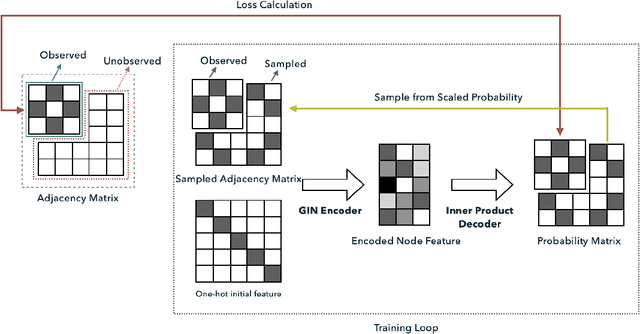

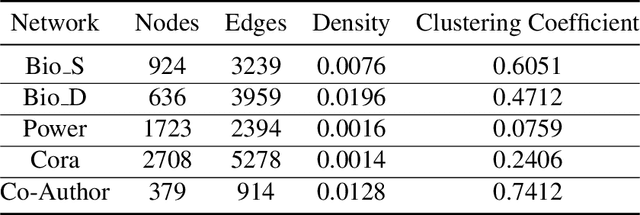

Abstract:Completing a graph means inferring the missing nodes and edges from a partially observed network. Different methods have been proposed to solve this problem, but none of them employed the pattern similarity of parts of the graph. In this paper, we propose a model to use the learned pattern of connections from the observed part of the network based on the Graph Auto-Encoder technique and generalize these patterns to complete the whole graph. Our proposed model achieved competitive performance with less information needed. Empirical analysis of synthetic datasets and real-world datasets from different domains show that our model can complete the network with higher accuracy compared with baseline prediction models in most cases. Furthermore, we also studied the character of the model and found it is particularly suitable to complete a network that has more complex local connection patterns.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge