Rucheng Wu

TransCompressor: LLM-Powered Multimodal Data Compression for Smart Transportation

Nov 25, 2024

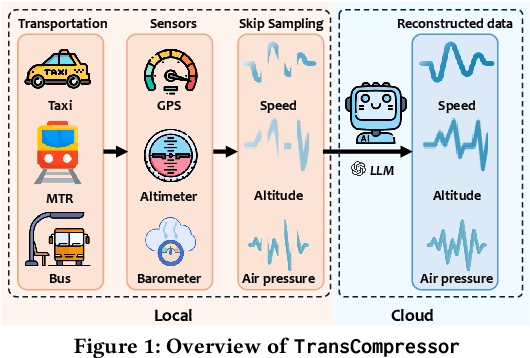

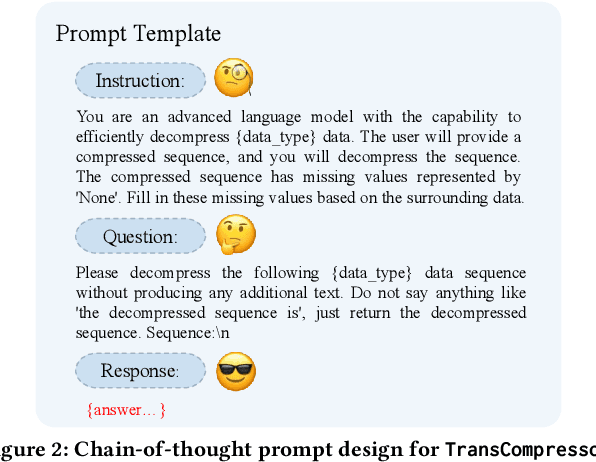

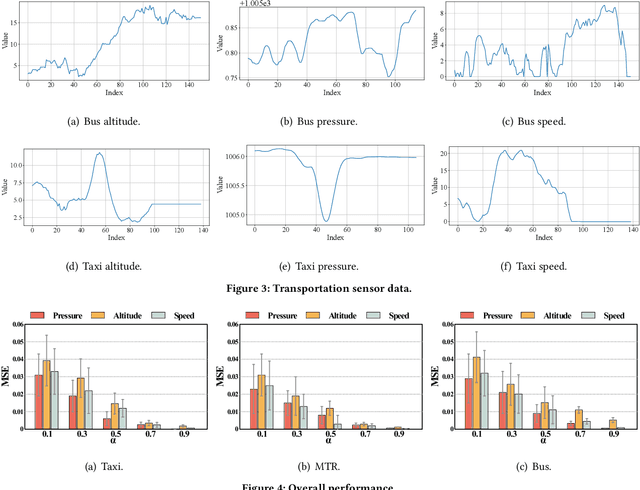

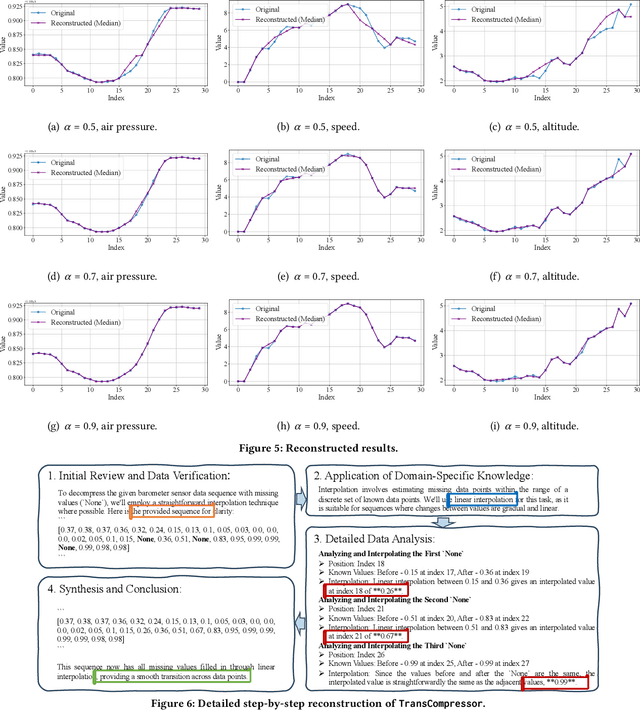

Abstract:The incorporation of Large Language Models (LLMs) into smart transportation systems has paved the way for improving data management and operational efficiency. This study introduces TransCompressor, a novel framework that leverages LLMs for efficient compression and decompression of multimodal transportation sensor data. TransCompressor has undergone thorough evaluation with diverse sensor data types, including barometer, speed, and altitude measurements, across various transportation modes like buses, taxis, and MTRs. Comprehensive evaluation illustrates the effectiveness of TransCompressor in reconstructing transportation sensor data at different compression ratios. The results highlight that, with well-crafted prompts, LLMs can utilize their vast knowledge base to contribute to data compression processes, enhancing data storage, analysis, and retrieval in smart transportation settings.

Are You Being Tracked? Discover the Power of Zero-Shot Trajectory Tracing with LLMs!

Mar 10, 2024

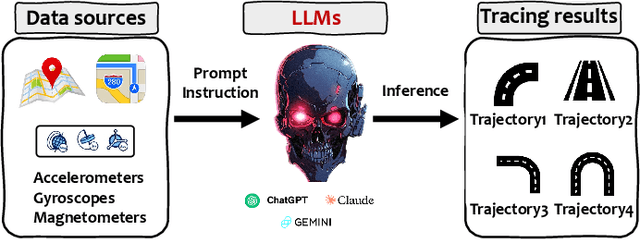

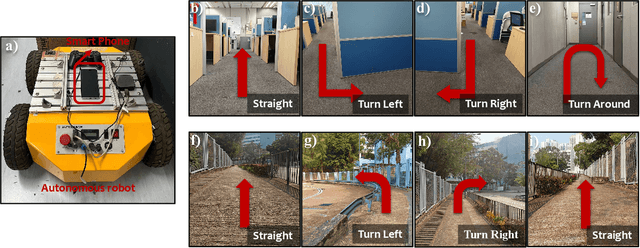

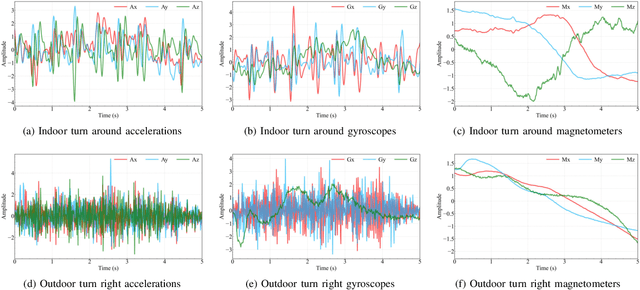

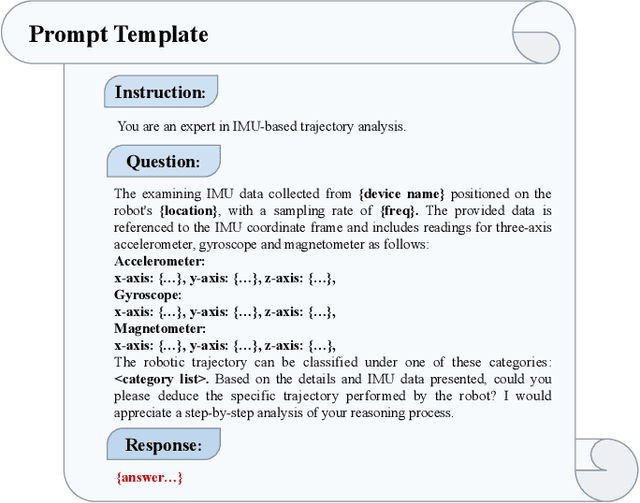

Abstract:There is a burgeoning discussion around the capabilities of Large Language Models (LLMs) in acting as fundamental components that can be seamlessly incorporated into Artificial Intelligence of Things (AIoT) to interpret complex trajectories. This study introduces LLMTrack, a model that illustrates how LLMs can be leveraged for Zero-Shot Trajectory Recognition by employing a novel single-prompt technique that combines role-play and think step-by-step methodologies with unprocessed Inertial Measurement Unit (IMU) data. We evaluate the model using real-world datasets designed to challenge it with distinct trajectories characterized by indoor and outdoor scenarios. In both test scenarios, LLMTrack not only meets but exceeds the performance benchmarks set by traditional machine learning approaches and even contemporary state-of-the-art deep learning models, all without the requirement of training on specialized datasets. The results of our research suggest that, with strategically designed prompts, LLMs can tap into their extensive knowledge base and are well-equipped to analyze raw sensor data with remarkable effectiveness.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge