Ross Harper

End-To-End Prediction of Emotion From Heartbeat Data Collected by a Consumer Fitness Tracker

Jul 16, 2019

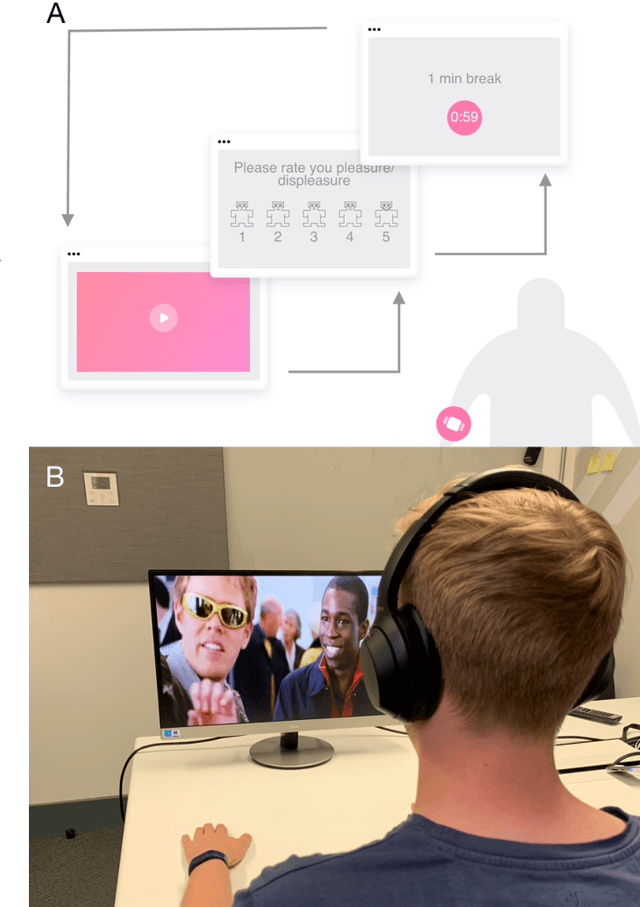

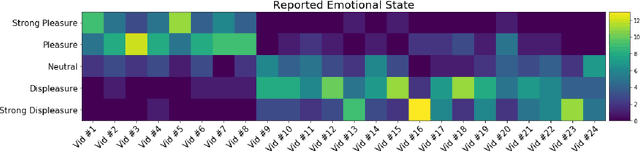

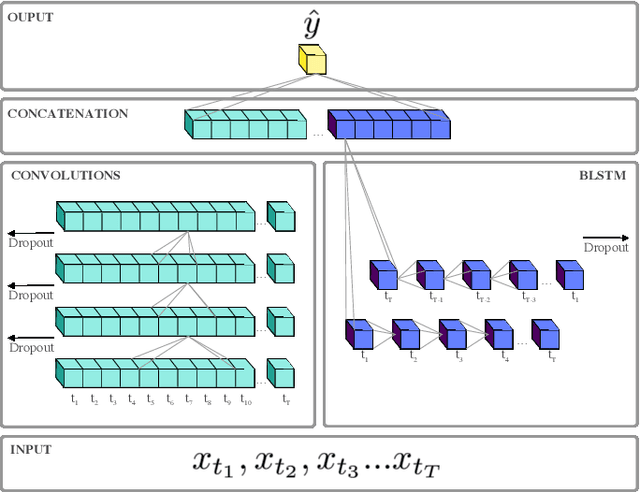

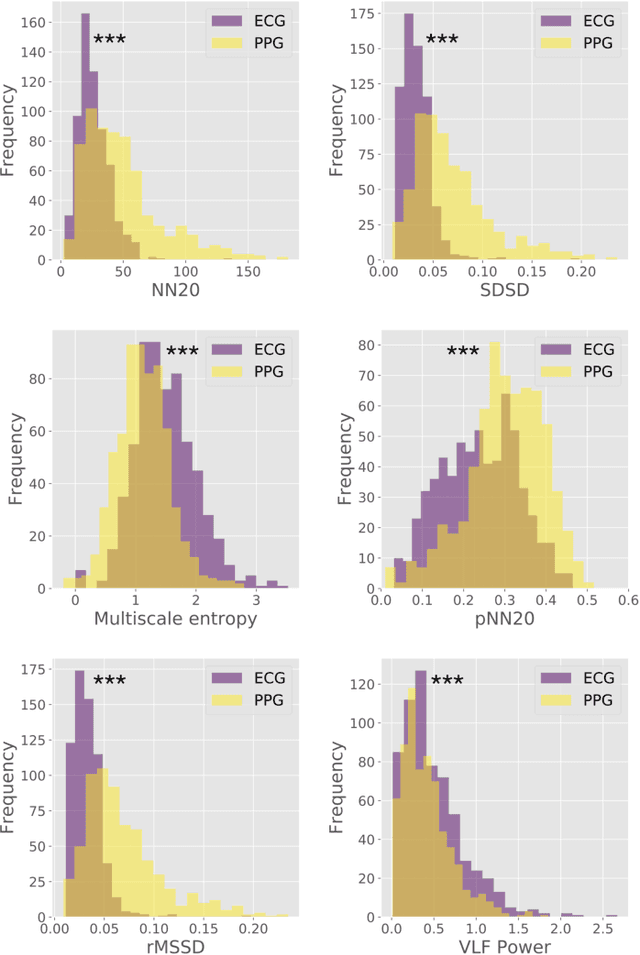

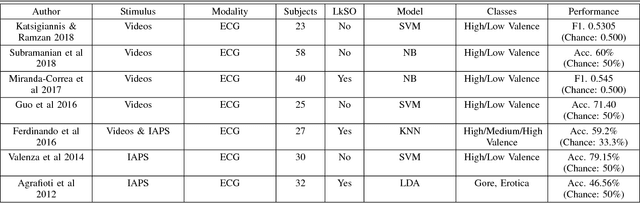

Abstract:Automatic detection of emotion has the potential to revolutionize mental health and wellbeing. Recent work has been successful in predicting affect from unimodal electrocardiogram (ECG) data. However, to be immediately relevant for real-world applications, physiology-based emotion detection must make use of ubiquitous photoplethysmogram (PPG) data collected by affordable consumer fitness trackers. Additionally, applications of emotion detection in healthcare settings will require some measure of uncertainty over model predictions. We present here a Bayesian deep learning model for end-to-end classification of emotional valence, using only the unimodal heartbeat time series collected by a consumer fitness tracker (Garmin V\'ivosmart 3). We collected a new dataset for this task, and report a peak F1 score of 0.7. This demonstrates a practical relevance of physiology-based emotion detection `in the wild' today.

A Bayesian Deep Learning Framework for End-To-End Prediction of Emotion from Heartbeat

Feb 08, 2019

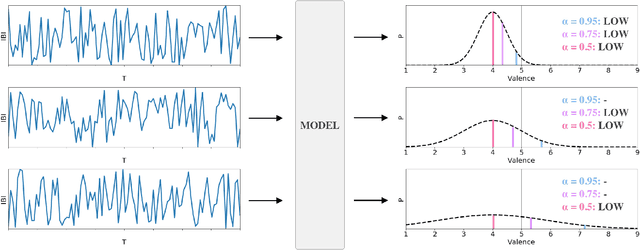

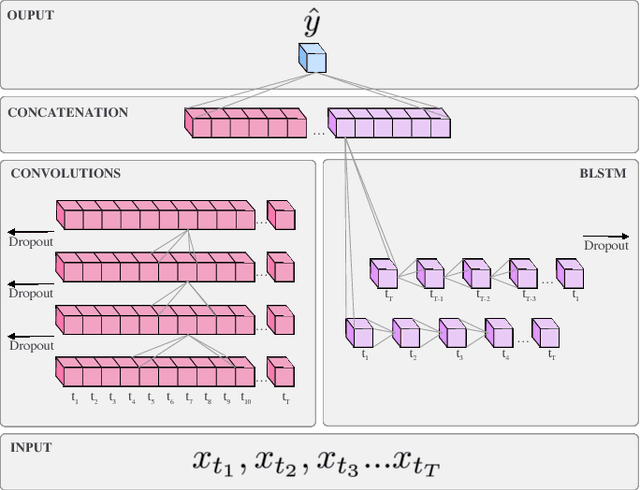

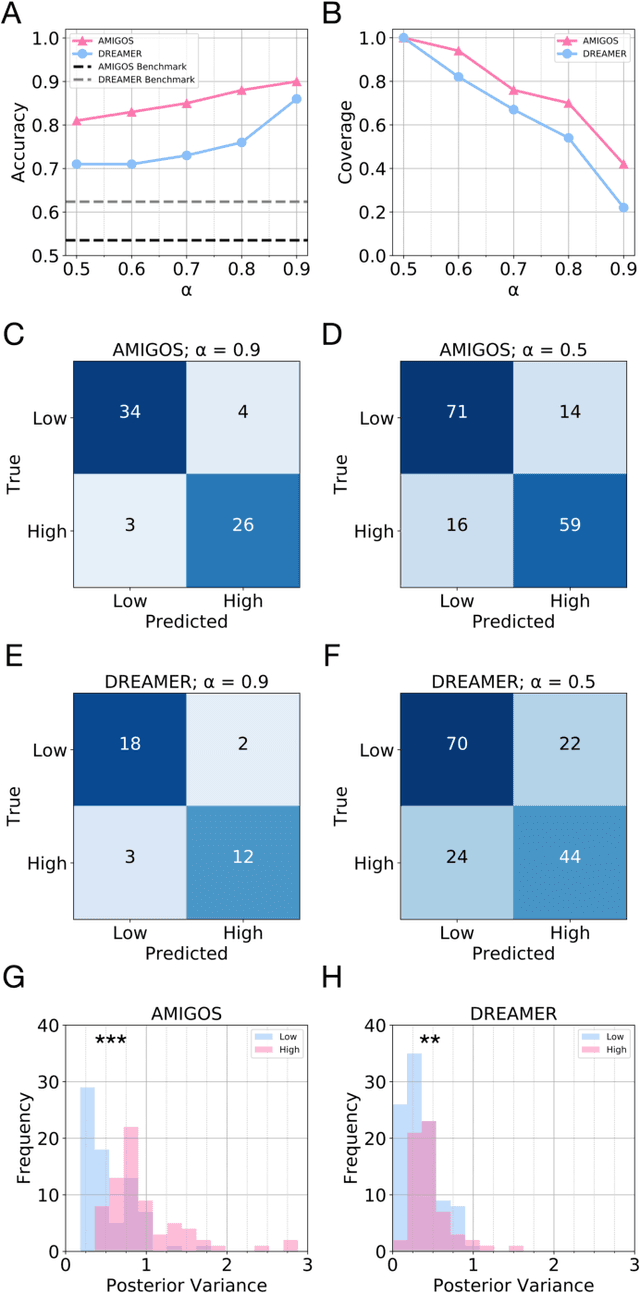

Abstract:Automatic prediction of emotion promises to revolutionise human-computer interaction. Recent trends involve fusion of multiple modalities - audio, visual, and physiological - to classify emotional state. However, practical considerations 'in the wild' limit collection of this physiological data to commoditised heartbeat sensors. Furthermore, real-world applications often require some measure of uncertainty over model output. We present here an end-to-end deep learning model for classifying emotional valence from unimodal heartbeat data. We further propose a Bayesian framework for modelling uncertainty over valence predictions, and describe a procedure for tuning output according to varying demands on confidence. We benchmarked our framework against two established datasets within the field and achieved peak classification accuracy of 90%. These results lay the foundation for applications of affective computing in real-world domains such as healthcare, where a high premium is placed on non-invasive collection of data, and predictive certainty.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge