A Bayesian Deep Learning Framework for End-To-End Prediction of Emotion from Heartbeat

Paper and Code

Feb 08, 2019

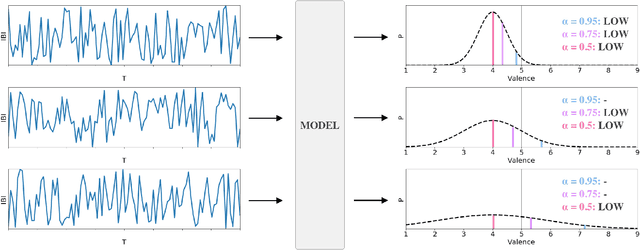

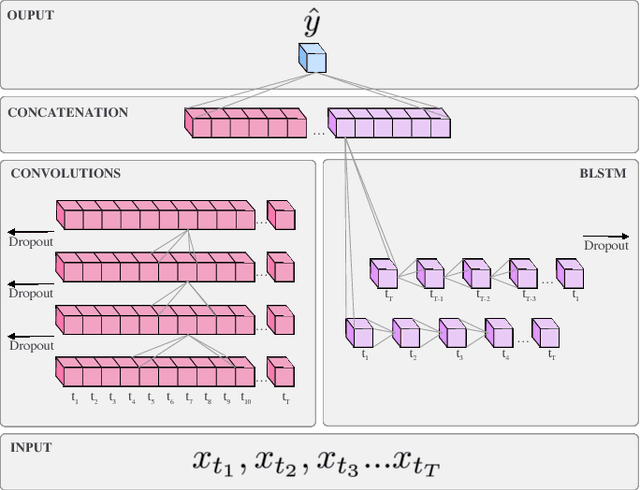

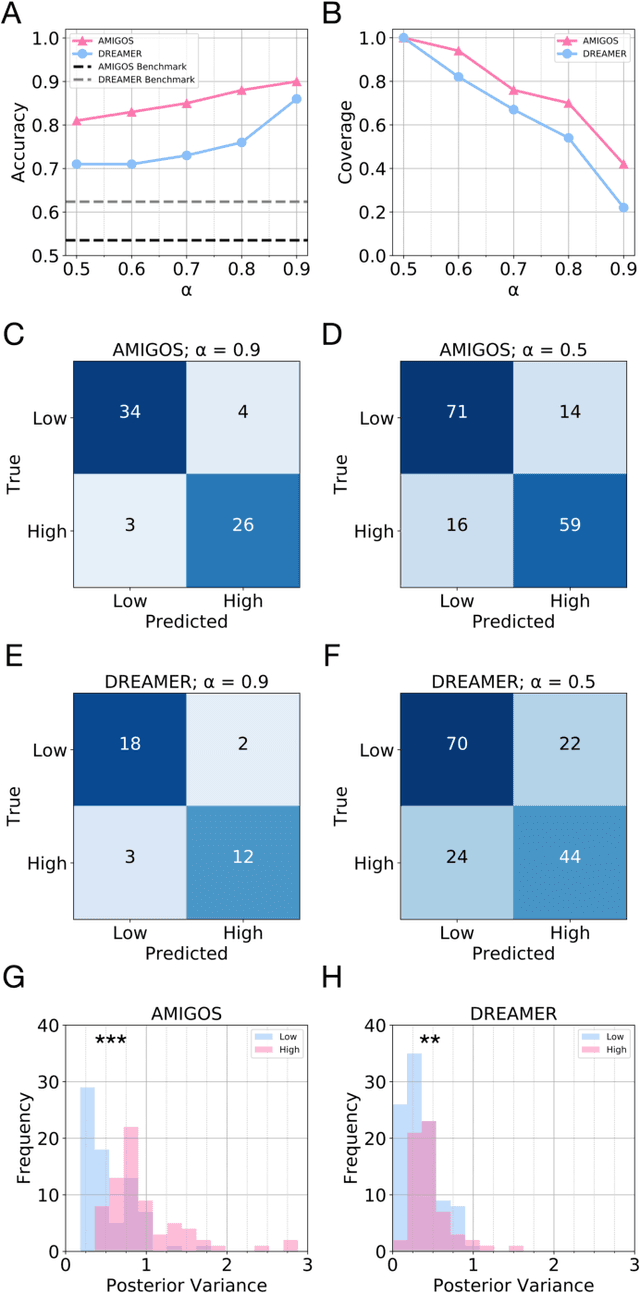

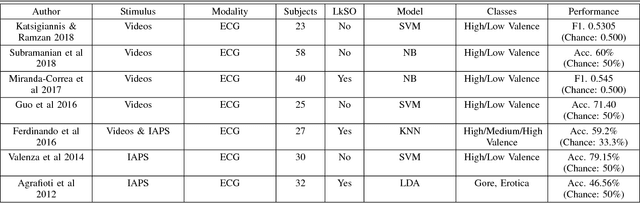

Automatic prediction of emotion promises to revolutionise human-computer interaction. Recent trends involve fusion of multiple modalities - audio, visual, and physiological - to classify emotional state. However, practical considerations 'in the wild' limit collection of this physiological data to commoditised heartbeat sensors. Furthermore, real-world applications often require some measure of uncertainty over model output. We present here an end-to-end deep learning model for classifying emotional valence from unimodal heartbeat data. We further propose a Bayesian framework for modelling uncertainty over valence predictions, and describe a procedure for tuning output according to varying demands on confidence. We benchmarked our framework against two established datasets within the field and achieved peak classification accuracy of 90%. These results lay the foundation for applications of affective computing in real-world domains such as healthcare, where a high premium is placed on non-invasive collection of data, and predictive certainty.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge