Rosario Messana

Mathematical Programming Algorithms for Convex Hull Approximation with a Hyperplane Budget

Jul 26, 2024

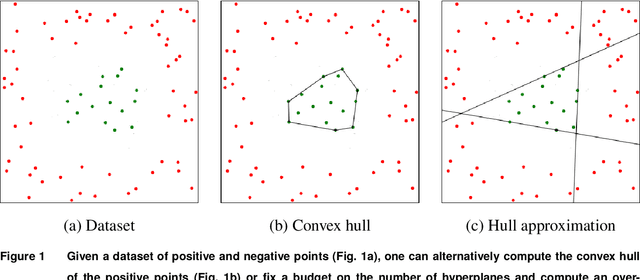

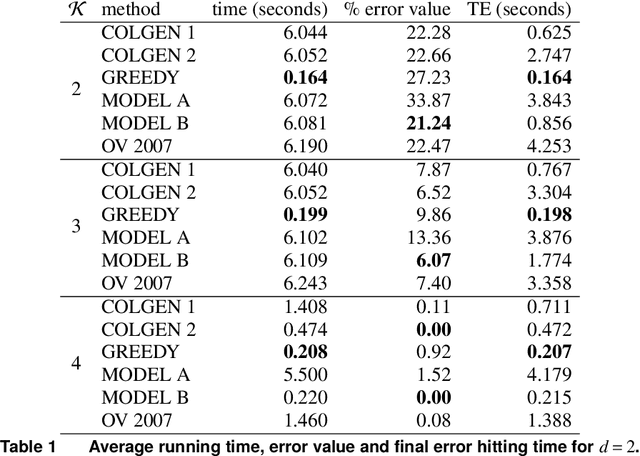

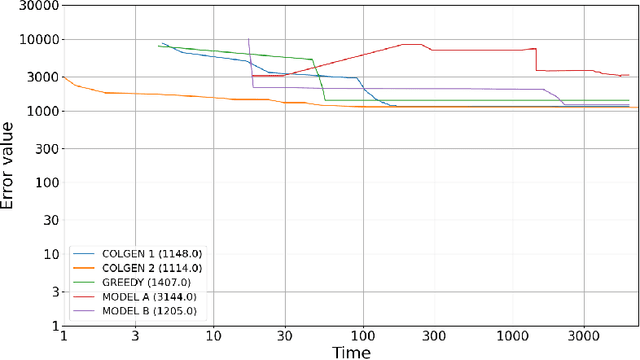

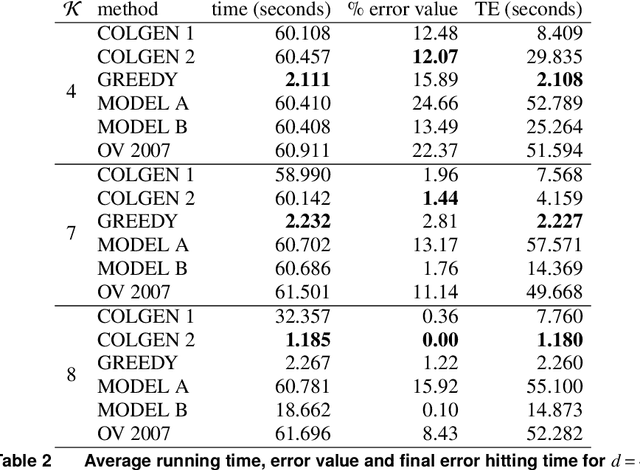

Abstract:We consider the following problem in computational geometry: given, in the d-dimensional real space, a set of points marked as positive and a set of points marked as negative, such that the convex hull of the positive set does not intersect the negative set, find K hyperplanes that separate, if possible, all the positive points from the negative ones. That is, we search for a convex polyhedron with at most K faces, containing all the positive points and no negative point. The problem is known in the literature for pure convex polyhedral approximation; our interest stems from its possible applications in constraint learning, where points are feasible or infeasible solutions of a Mixed Integer Program, and the K hyperplanes are linear constraints to be found. We cast the problem as an optimization one, minimizing the number of negative points inside the convex polyhedron, whenever exact separation cannot be achieved. We introduce models inspired by support vector machines and we design two mathematical programming formulations with binary variables. We exploit Dantzig-Wolfe decomposition to obtain extended formulations, and we devise column generation algorithms with ad-hoc pricing routines. We compare computing time and separation error values obtained by all our approaches on synthetic datasets, with number of points from hundreds up to a few thousands, showing our approaches to perform better than existing ones from the literature. Furthermore, we observe that key computational differences arise, depending on whether the budget K is sufficient to completely separate the positive points from the negative ones or not. On 8-dimensional instances (and over), existing convex hull algorithms become computational inapplicable, while our algorithms allow to identify good convex hull approximations in minutes of computation.

Actively Learning Combinatorial Optimization Using a Membership Oracle

May 23, 2024

Abstract:We consider solving a combinatorial optimization problem with an unknown linear constraint using a membership oracle that, given a solution, determines whether it is feasible or infeasible with absolute certainty. The goal of the decision maker is to find the best possible solution subject to a budget on the number of oracle calls. Inspired by active learning based on Support Vector Machines (SVMs), we adapt a classical framework in order to solve the problem by learning and exploiting a surrogate linear constraint. The resulting new framework includes training a linear separator on the labeled points and selecting new points to be labeled, which is achieved by applying a sampling strategy and solving a 0-1 integer linear program. Following the active learning literature, one can consider using SVM as a linear classifier and the information-based sampling strategy known as Simple margin. We improve on both sides: we propose an alternative sampling strategy based on mixed-integer quadratic programming and a linear separation method inspired by an algorithm for convex optimization in the oracle model. We conduct experiments on the pure knapsack problem and on a college study plan problem from the literature to show how different linear separation methods and sampling strategies influence the quality of the results in terms of objective value.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge