Ronja Strömsdörfer

Endo-Sim2Real: Consistency learning-based domain adaptation for instrument segmentation

Jul 22, 2020

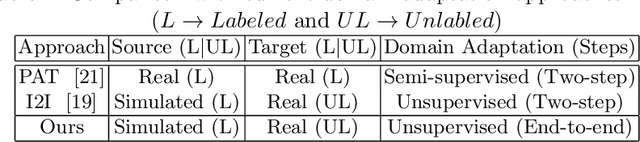

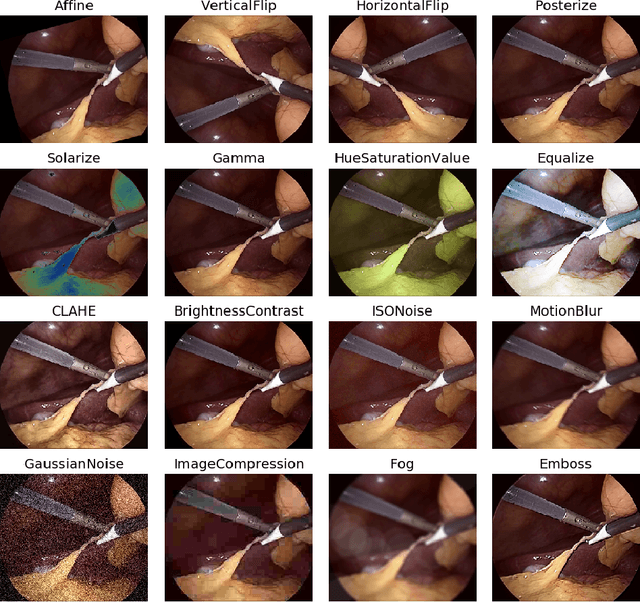

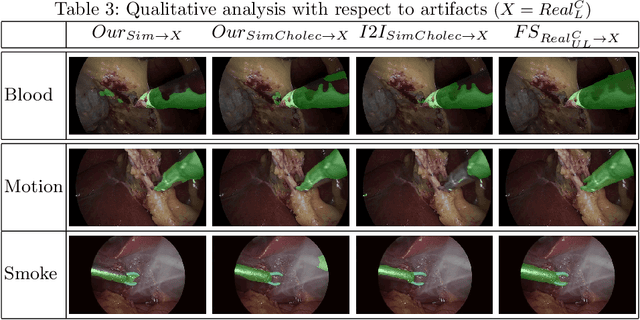

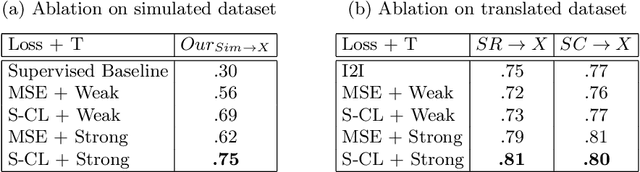

Abstract:Surgical tool segmentation in endoscopic videos is an important component of computer assisted interventions systems. Recent success of image-based solutions using fully-supervised deep learning approaches can be attributed to the collection of big labeled datasets. However, the annotation of a big dataset of real videos can be prohibitively expensive and time consuming. Computer simulations could alleviate the manual labeling problem, however, models trained on simulated data do not generalize to real data. This work proposes a consistency-based framework for joint learning of simulated and real (unlabeled) endoscopic data to bridge this performance generalization issue. Empirical results on two data sets (15 videos of the Cholec80 and EndoVis'15 dataset) highlight the effectiveness of the proposed \emph{Endo-Sim2Real} method for instrument segmentation. We compare the segmentation of the proposed approach with state-of-the-art solutions and show that our method improves segmentation both in terms of quality and quantity.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge