Rongsong Li

Multi-V2X: A Large Scale Multi-modal Multi-penetration-rate Dataset for Cooperative Perception

Sep 08, 2024

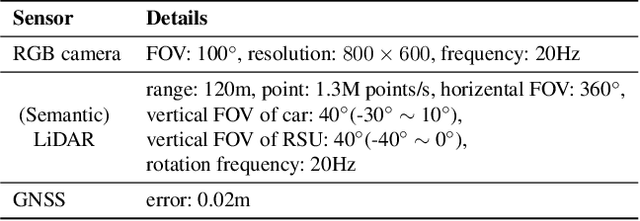

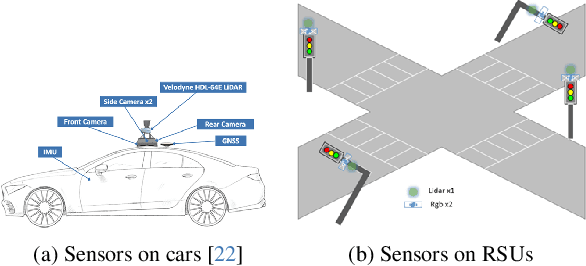

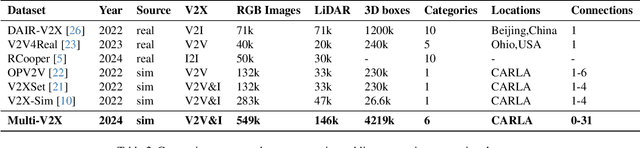

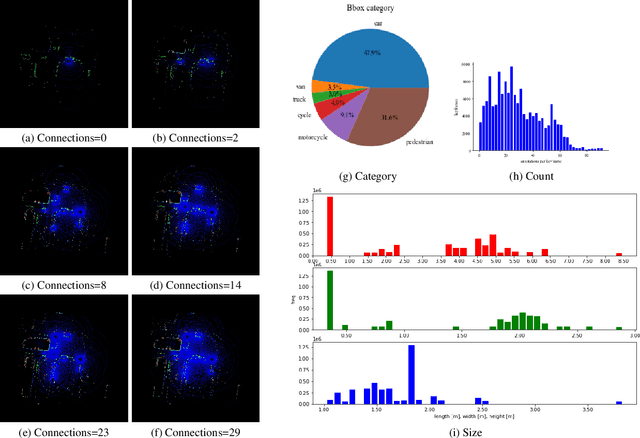

Abstract:Cooperative perception through vehicle-to-everything (V2X) has garnered significant attention in recent years due to its potential to overcome occlusions and enhance long-distance perception. Great achievements have been made in both datasets and algorithms. However, existing real-world datasets are limited by the presence of few communicable agents, while synthetic datasets typically cover only vehicles. More importantly, the penetration rate of connected and autonomous vehicles (CAVs) , a critical factor for the deployment of cooperative perception technologies, has not been adequately addressed. To tackle these issues, we introduce Multi-V2X, a large-scale, multi-modal, multi-penetration-rate dataset for V2X perception. By co-simulating SUMO and CARLA, we equip a substantial number of cars and roadside units (RSUs) in simulated towns with sensor suites, and collect comprehensive sensing data. Datasets with specified CAV penetration rates can be obtained by masking some equipped cars as normal vehicles. In total, our Multi-V2X dataset comprises 549k RGB frames, 146k LiDAR frames, and 4,219k annotated 3D bounding boxes across six categories. The highest possible CAV penetration rate reaches 86.21%, with up to 31 agents in communication range, posing new challenges in selecting agents to collaborate with. We provide comprehensive benchmarks for cooperative 3D object detection tasks. Our data and code are available at https://github.com/RadetzkyLi/Multi-V2X .

Unify Change Point Detection and Segment Classification in a Regression Task for Transportation Mode Identification

Dec 08, 2023

Abstract:Identifying travelers' transportation modes is important in transportation science and location-based services. It's appealing for researchers to leverage GPS trajectory data to infer transportation modes with the popularity of GPS-enabled devices, e.g., smart phones. Existing studies frame this problem as classification task. The dominant two-stage studies divide the trip into single-one mode segments first and then categorize these segments. The over segmentation strategy and inevitable error propagation bring difficulties to classification stage and make optimizing the whole system hard. The recent one-stage works throw out trajectory segmentation entirely to avoid these by directly conducting point-wise classification for the trip, whereas leaving predictions dis-continuous. To solve above-mentioned problems, inspired by YOLO and SSD in object detection, we propose to reframe change point detection and segment classification as a unified regression task instead of the existing classification task. We directly regress coordinates of change points and classify associated segments. In this way, our method divides the trip into segments under a supervised manner and leverage more contextual information, obtaining predictions with high accuracy and continuity. Two frameworks, TrajYOLO and TrajSSD, are proposed to solve the regression task and various feature extraction backbones are exploited. Exhaustive experiments on GeoLife dataset show that the proposed method has competitive overall identification accuracy of 0.853 when distinguishing five modes: walk, bike, bus, car, train. As for change point detection, our method increases precision at the cost of drop in recall. All codes are available at https://github.com/RadetzkyLi/TrajYOLO-SSD.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge