Romain Zimmer

Encrypted Internet traffic classification using a supervised Spiking Neural Network

Jan 24, 2021

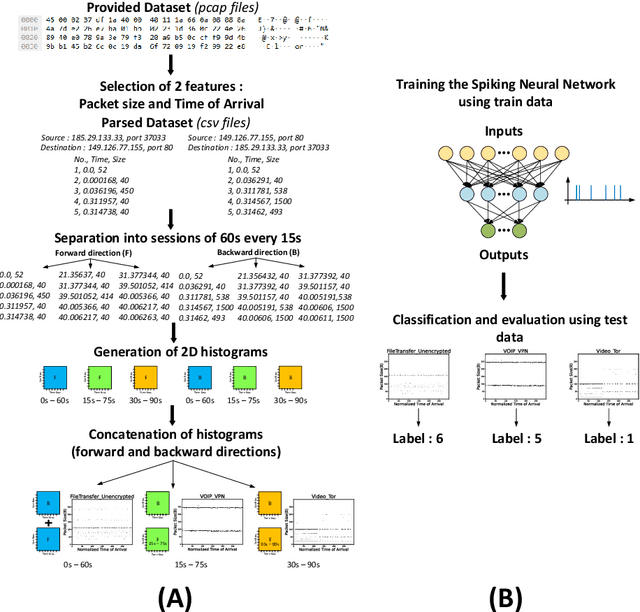

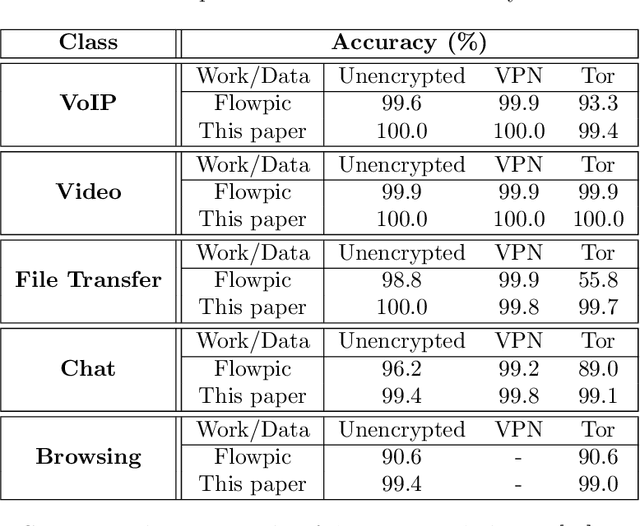

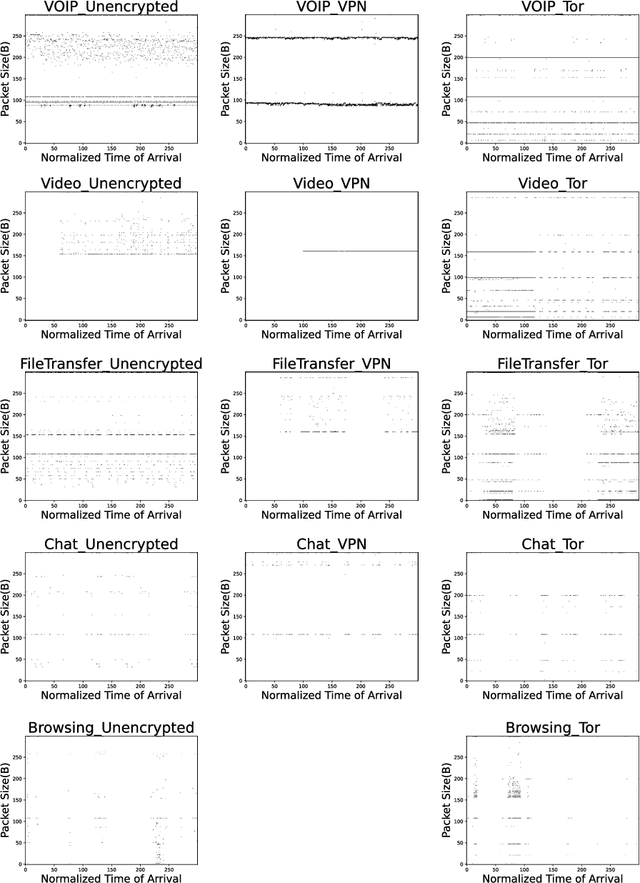

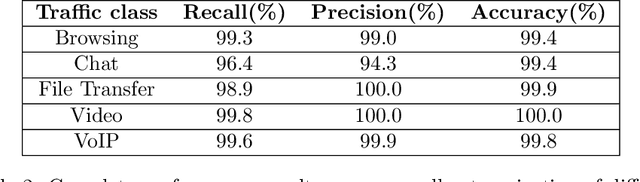

Abstract:Internet traffic recognition is an essential tool for access providers since recognizing traffic categories related to different data packets transmitted on a network help them define adapted priorities. That means, for instance, high priority requirements for an audio conference and low ones for a file transfer, to enhance user experience. As internet traffic becomes increasingly encrypted, the mainstream classic traffic recognition technique, payload inspection, is rendered ineffective. This paper uses machine learning techniques for encrypted traffic classification, looking only at packet size and time of arrival. Spiking neural networks (SNN), largely inspired by how biological neurons operate, were used for two reasons. Firstly, they are able to recognize time-related data packet features. Secondly, they can be implemented efficiently on neuromorphic hardware with a low energy footprint. Here we used a very simple feedforward SNN, with only one fully-connected hidden layer, and trained in a supervised manner using the newly introduced method known as Surrogate Gradient Learning. Surprisingly, such a simple SNN reached an accuracy of 95.9% on ISCX datasets, outperforming previous approaches. Besides better accuracy, there is also a very significant improvement on simplicity: input size, number of neurons, trainable parameters are all reduced by one to four orders of magnitude. Next, we analyzed the reasons for this good accuracy. It turns out that, beyond spatial (i.e. packet size) features, the SNN also exploits temporal ones, mostly the nearly synchronous (within a 200ms range) arrival times of packets with certain sizes. Taken together, these results show that SNNs are an excellent fit for encrypted internet traffic classification: they can be more accurate than conventional artificial neural networks (ANN), and they could be implemented efficiently on low power embedded systems.

Low-activity supervised convolutional spiking neural networks applied to speech commands recognition

Nov 13, 2020

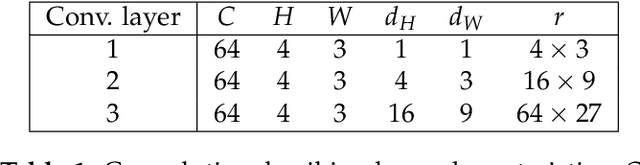

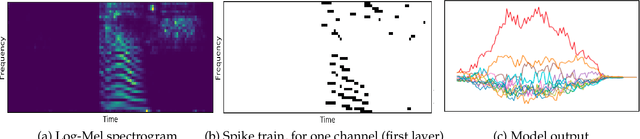

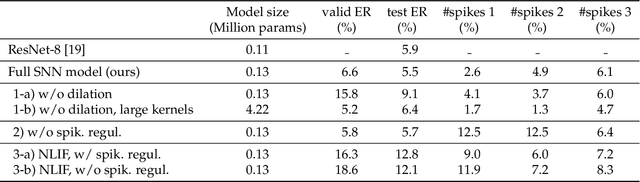

Abstract:Deep Neural Networks (DNNs) are the current state-of-the-art models in many speech related tasks. There is a growing interest, though, for more biologically realistic, hardware friendly and energy efficient models, named Spiking Neural Networks (SNNs). Recently, it has been shown that SNNs can be trained efficiently, in a supervised manner, using backpropagation with a surrogate gradient trick. In this work, we report speech command (SC) recognition experiments using supervised SNNs. We explored the Leaky-Integrate-Fire (LIF) neuron model for this task, and show that a model comprised of stacked dilated convolution spiking layers can reach an error rate very close to standard DNNs on the Google SC v1 dataset: 5.5%, while keeping a very sparse spiking activity, below 5%, thank to a new regularization term. We also show that modeling the leakage of the neuron membrane potential is useful, since the LIF model outperformed its non-leaky model counterpart significantly.

Technical report: supervised training of convolutional spiking neural networks with PyTorch

Nov 22, 2019

Abstract:Recently, it has been shown that spiking neural networks (SNNs) can be trained efficiently, in a supervised manner, using backpropagation through time. Indeed, the most commonly used spiking neuron model, the leaky integrate-and-fire neuron, obeys a differential equation which can be approximated using discrete time steps, leading to a recurrent relation for the potential. The firing threshold causes optimization issues, but they can be overcome using a surrogate gradient. Here, we extend previous approaches in two ways. Firstly, we show that the approach can be used to train convolutional layers. Convolutions can be done in space, time (which simulates conduction delays), or both. Secondly, we include fast horizontal connections \`a la Den\`eve: when a neuron N fires, we subtract to the potentials of all the neurons with the same receptive the dot product between their weight vectors and the one of neuron N. As Den\`eve et al. showed, this is useful to represent a dynamic multidimensional analog signal in a population of spiking neurons. Here we demonstrate that, in addition, such connections also allow implementing a multidimensional send-on-delta coding scheme. We validate our approach on one speech classification benchmarks: the Google speech command dataset. We managed to reach nearly state-of-the-art accuracy (94%) while maintaining low firing rates (about 5Hz). Our code is based on PyTorch and is available in open source at http://github.com/romainzimmer/s2net

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge