Rohit M. A.

Automatic Stroke Classification of Tabla Accompaniment in Hindustani Vocal Concert Audio

Apr 19, 2021

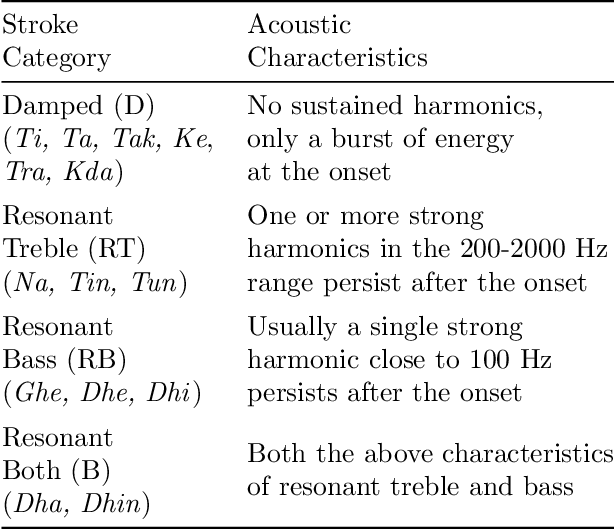

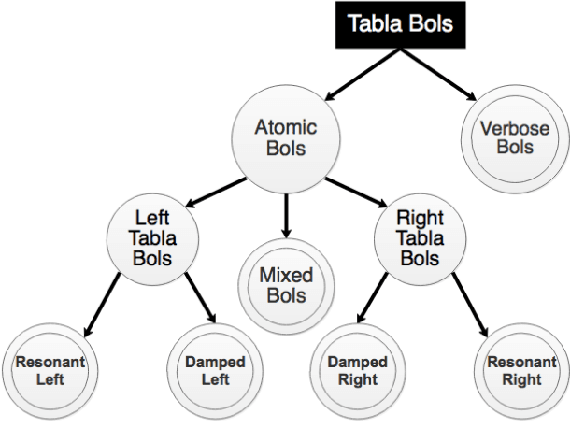

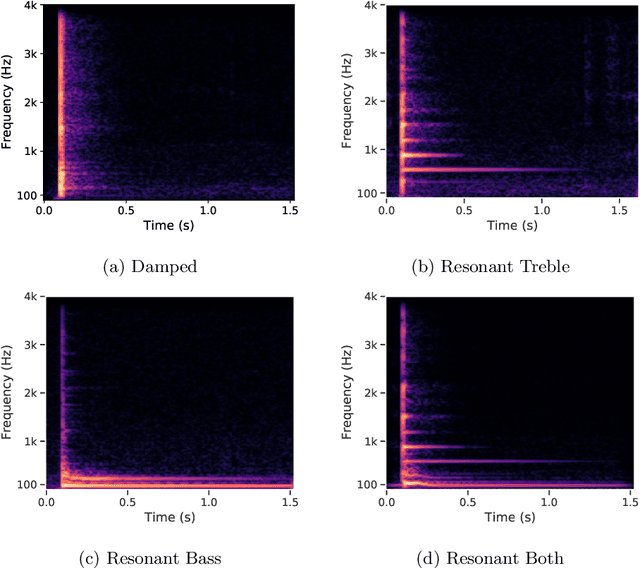

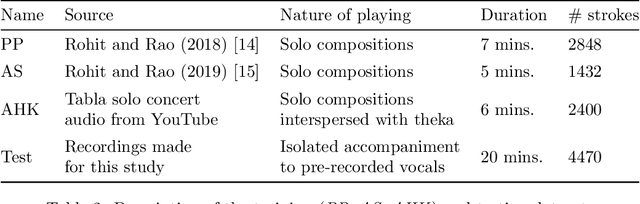

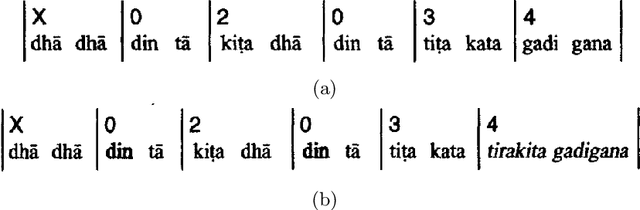

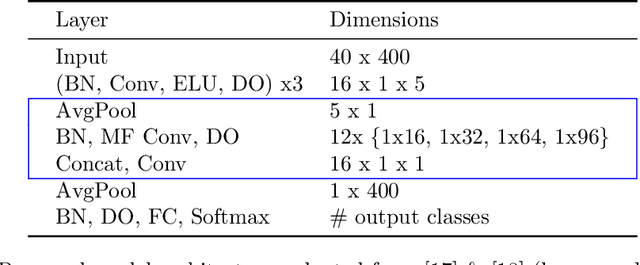

Abstract:The tabla is a unique percussion instrument due to the combined harmonic and percussive nature of its timbre, and the contrasting harmonic frequency ranges of its two drums. This allows a tabla player to uniquely emphasize parts of the rhythmic cycle (theka) in order to mark the salient positions. An analysis of the loudness dynamics and timing deviations at various cycle positions is an important part of musicological studies on the expressivity in tabla accompaniment. To achieve this at a corpus-level, and not restrict it to the few recordings that manual annotation can afford, it is helpful to have access to an automatic tabla transcription system. Although a few systems have been built by training models on labeled tabla strokes, the achieved accuracy does not necessarily carry over to unseen instruments. In this article, we report our work towards building an instrument-independent stroke classification system for accompaniment tabla based on the more easily available tabla solo audio tracks. We present acoustic features that capture the distinctive characteristics of tabla strokes and build an automatic system to predict the label as one of a reduced, but musicologically motivated, target set of four stroke categories. To address the lack of sufficient labeled training data, we turn to common data augmentation methods and find the use of pitch-shifting based augmentation to be most promising. We then analyse the important features and highlight the problem of their instrument-dependence while motivating the use of more task-specific data augmentation strategies to improve the diversity of training data.

Structure and Automatic Segmentation of Dhrupad Vocal Bandish Audio

Aug 03, 2020

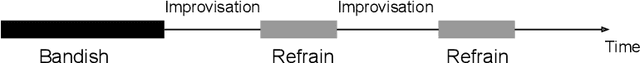

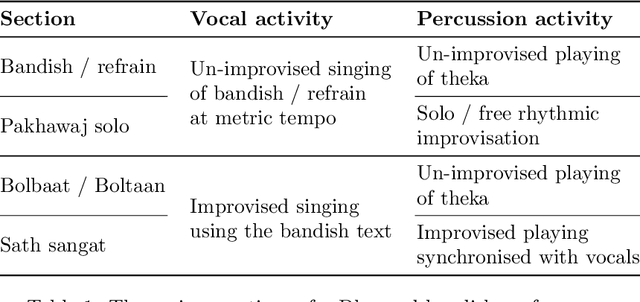

Abstract:A Dhrupad vocal concert comprises a composition section that is interspersed with improvised episodes of increased rhythmic activity involving the interaction between the vocals and the percussion. Tracking the changing rhythmic density, in relation to the underlying metric tempo of the piece, thus facilitates the detection and labeling of the improvised sections in the concert structure. This work concerns the automatic detection of the musically relevant rhythmic densities as they change in time across the bandish (composition) performance. An annotated dataset of Dhrupad bandish concert sections is presented. We investigate a CNN-based system, trained to detect local tempo relationships, and follow it with temporal smoothing. We also employ audio source separation as a pre-processing step to the detection of the individual surface densities of the vocals and the percussion. This helps us obtain the complete musical description of the concert sections in terms of capturing the changing rhythmic interaction of the two performers.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge