Robin Halvfordsson

Automated Augmentation with Reinforcement Learning and GANs for Robust Identification of Traffic Signs using Front Camera Images

Nov 15, 2019

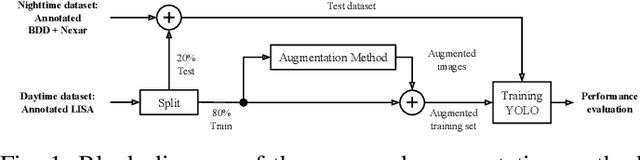

Abstract:Traffic sign identification using camera images from vehicles plays a critical role in autonomous driving and path planning. However, the front camera images can be distorted due to blurriness, lighting variations and vandalism which can lead to degradation of detection performances. As a solution, machine learning models must be trained with data from multiple domains, and collecting and labeling more data in each new domain is time consuming and expensive. In this work, we present an end-to-end framework to augment traffic sign training data using optimal reinforcement learning policies and a variety of Generative Adversarial Network (GAN) models, that can then be used to train traffic sign detector modules. Our automated augmenter enables learning from transformed nightime, poor lighting, and varying degrees of occlusions using the LISA Traffic Sign and BDD-Nexar dataset. The proposed method enables mapping training data from one domain to another, thereby improving traffic sign detection precision/recall from 0.70/0.66 to 0.83/0.71 for nighttime images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge