Roberto Prevete

IMPACTX: Improving Model Performance by Appropriately predicting CorrecT eXplanations

Feb 17, 2025Abstract:The eXplainable Artificial Intelligence (XAI) research predominantly concentrates to provide explainations about AI model decisions, especially Deep Learning (DL) models. However, there is a growing interest in using XAI techniques to automatically improve the performance of the AI systems themselves. This paper proposes IMPACTX, a novel approach that leverages XAI as a fully automated attention mechanism, without requiring external knowledge or human feedback. Experimental results show that IMPACTX has improved performance respect to the standalone ML model by integrating an attention mechanism based an XAI method outputs during the model training. Furthermore, IMPACTX directly provides proper feature attribution maps for the model's decisions, without relying on external XAI methods during the inference process. Our proposal is evaluated using three widely recognized DL models (EfficientNet-B2, MobileNet, and LeNet-5) along with three standard image datasets: CIFAR-10, CIFAR-100, and STL-10. The results show that IMPACTX consistently improves the performance of all the inspected DL models across all evaluated datasets, and it directly provides appropriate explanations for its responses.

SincVAE: a New Approach to Improve Anomaly Detection on EEG Data Using SincNet and Variational Autoencoder

Jun 25, 2024Abstract:Over the past few decades, electroencephalography (EEG) monitoring has become a pivotal tool for diagnosing neurological disorders, particularly for detecting seizures. Epilepsy, one of the most prevalent neurological diseases worldwide, affects approximately the 1 \% of the population. These patients face significant risks, underscoring the need for reliable, continuous seizure monitoring in daily life. Most of the techniques discussed in the literature rely on supervised Machine Learning (ML) methods. However, the challenge of accurately labeling variations in epileptic EEG waveforms complicates the use of these approaches. Additionally, the rarity of ictal events introduces an high imbalancing within the data, which could lead to poor prediction performance in supervised learning approaches. Instead, a semi-supervised approach allows to train the model only on data not containing seizures, thus avoiding the issues related to the data imbalancing. This work proposes a semi-supervised approach for detecting epileptic seizures from EEG data, utilizing a novel Deep Learning-based method called SincVAE. This proposal incorporates the learning of an ad-hoc array of bandpass filter as a first layer of a Variational Autoencoder (VAE), potentially eliminating the preprocessing stage where informative band frequencies are identified and isolated. Results indicate that SincVAE improves seizure detection in EEG data and is capable of identifying early seizures during the preictal stage as well as monitoring patients throughout the postictal stage.

Towards a general framework for improving the performance of classifiers using XAI methods

Mar 15, 2024Abstract:Modern Artificial Intelligence (AI) systems, especially Deep Learning (DL) models, poses challenges in understanding their inner workings by AI researchers. eXplainable Artificial Intelligence (XAI) inspects internal mechanisms of AI models providing explanations about their decisions. While current XAI research predominantly concentrates on explaining AI systems, there is a growing interest in using XAI techniques to automatically improve the performance of AI systems themselves. This paper proposes a general framework for automatically improving the performance of pre-trained DL classifiers using XAI methods, avoiding the computational overhead associated with retraining complex models from scratch. In particular, we outline the possibility of two different learning strategies for implementing this architecture, which we will call auto-encoder-based and encoder-decoder-based, and discuss their key aspects.

Don't Push the Button! Exploring Data Leakage Risks in Machine Learning and Transfer Learning

Jan 24, 2024Abstract:Machine Learning (ML) has revolutionized various domains, offering predictive capabilities in several areas. However, with the increasing accessibility of ML tools, many practitioners, lacking deep ML expertise, adopt a "push the button" approach, utilizing user-friendly interfaces without a thorough understanding of underlying algorithms. While this approach provides convenience, it raises concerns about the reliability of outcomes, leading to challenges such as incorrect performance evaluation. This paper addresses a critical issue in ML, known as data leakage, where unintended information contaminates the training data, impacting model performance evaluation. Users, due to a lack of understanding, may inadvertently overlook crucial steps, leading to optimistic performance estimates that may not hold in real-world scenarios. The discrepancy between evaluated and actual performance on new data is a significant concern. In particular, this paper categorizes data leakage in ML, discussing how certain conditions can propagate through the ML workflow. Furthermore, it explores the connection between data leakage and the specific task being addressed, investigates its occurrence in Transfer Learning, and compares standard inductive ML with transductive ML frameworks. The conclusion summarizes key findings, emphasizing the importance of addressing data leakage for robust and reliable ML applications.

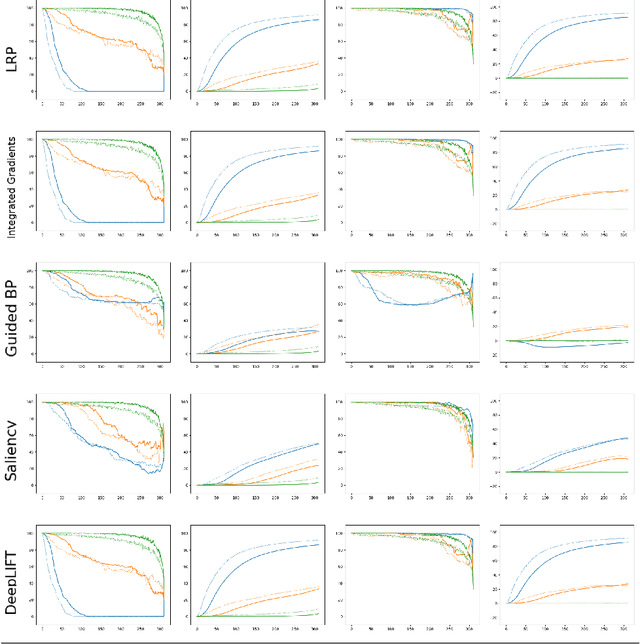

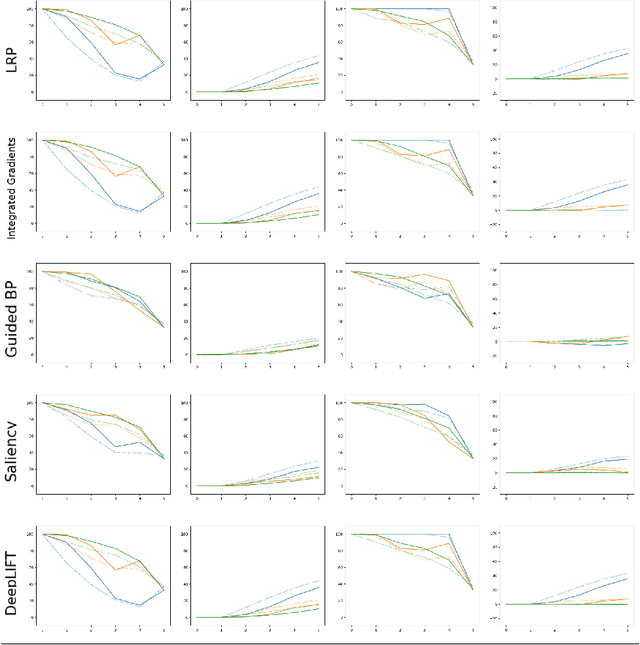

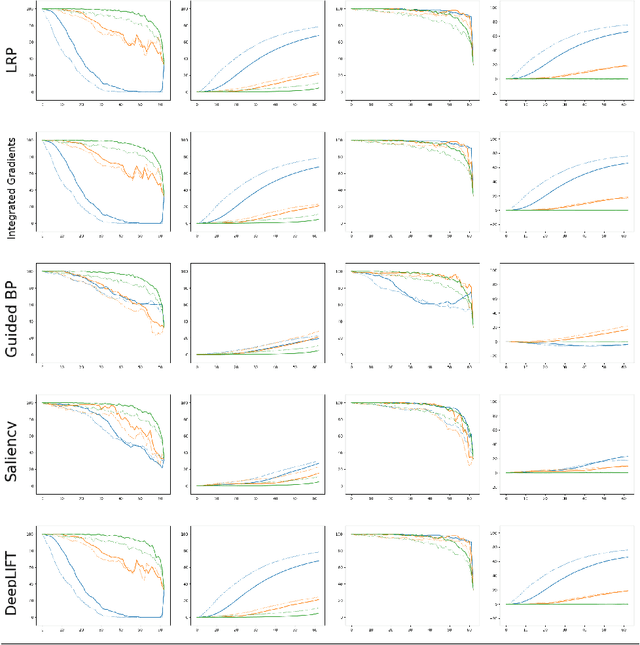

Strategies to exploit XAI to improve classification systems

Jun 09, 2023Abstract:Explainable Artificial Intelligence (XAI) aims to provide insights into the decision-making process of AI models, allowing users to understand their results beyond their decisions. A significant goal of XAI is to improve the performance of AI models by providing explanations for their decision-making processes. However, most XAI literature focuses on how to explain an AI system, while less attention has been given to how XAI methods can be exploited to improve an AI system. In this work, a set of well-known XAI methods typically used with Machine Learning (ML) classification tasks are investigated to verify if they can be exploited, not just to provide explanations but also to improve the performance of the model itself. To this aim, two strategies to use the explanation to improve a classification system are reported and empirically evaluated on three datasets: Fashion-MNIST, CIFAR10, and STL10. Results suggest that explanations built by Integrated Gradients highlight input features that can be effectively used to improve classification performance.

Hidden Classification Layers: a study on Data Hidden Representations with a Higher Degree of Linear Separability between the Classes

Jun 09, 2023Abstract:In the context of classification problems, Deep Learning (DL) approaches represent state of art. Many DL approaches are based on variations of standard multi-layer feed-forward neural networks. These are also referred to as deep networks. The basic idea is that each hidden neural layer accomplishes a data transformation which is expected to make the data representation "somewhat more linearly separable" than the previous one to obtain a final data representation which is as linearly separable as possible. However, determining the appropriate neural network parameters that can perform these transformations is a critical problem. In this paper, we investigate the impact on deep network classifier performances of a training approach favouring solutions where data representations at the hidden layers have a higher degree of linear separability between the classes with respect to standard methods. To this aim, we propose a neural network architecture which induces an error function involving the outputs of all the network layers. Although similar approaches have already been partially discussed in the past literature, here we propose a new architecture with a novel error function and an extensive experimental analysis. This experimental analysis was made in the context of image classification tasks considering four widely used datasets. The results show that our approach improves the accuracy on the test set in all the considered cases.

Machine Learning Strategies to Improve Generalization in EEG-based Emotion Assessment: \\a Systematic Review

Dec 16, 2022Abstract:A systematic review on machine-learning strategies for improving generalizability (cross-subjects and cross-sessions) electroencephalography (EEG) based in emotion classification was realized. In this context, the non-stationarity of EEG signals is a critical issue and can lead to the Dataset Shift problem. Several architectures and methods have been proposed to address this issue, mainly based on transfer learning methods. 418 papers were retrieved from the Scopus, IEEE Xplore and PubMed databases through a search query focusing on modern machine learning techniques for generalization in EEG-based emotion assessment. Among these papers, 75 were found eligible based on their relevance to the problem. Studies lacking a specific cross-subject and cross-session validation strategy and making use of other biosignals as support were excluded. On the basis of the selected papers' analysis, a taxonomy of the studies employing Machine Learning (ML) methods was proposed, together with a brief discussion on the different ML approaches involved. The studies with the best results in terms of average classification accuracy were identified, supporting that transfer learning methods seem to perform better than other approaches. A discussion is proposed on the impact of (i) the emotion theoretical models and (ii) psychological screening of the experimental sample on the classifier performances.

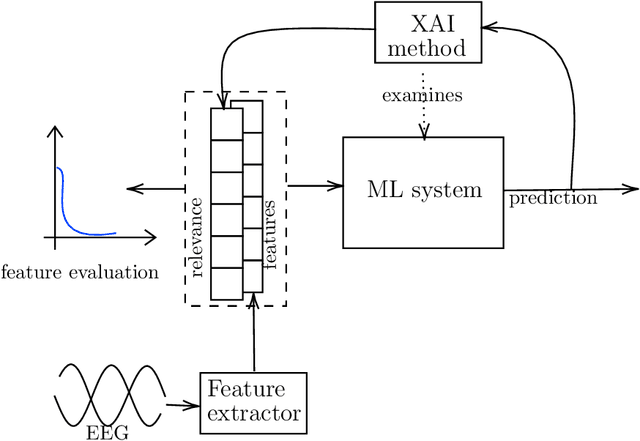

Toward the application of XAI methods in EEG-based systems

Oct 12, 2022

Abstract:An interesting case of the well-known Dataset Shift Problem is the classification of Electroencephalogram (EEG) signals in the context of Brain-Computer Interface (BCI). The non-stationarity of EEG signals can lead to poor generalisation performance in BCI classification systems used in different sessions, also from the same subject. In this paper, we start from the hypothesis that the Dataset Shift problem can be alleviated by exploiting suitable eXplainable Artificial Intelligence (XAI) methods to locate and transform the relevant characteristics of the input for the goal of classification. In particular, we focus on an experimental analysis of explanations produced by several XAI methods on an ML system trained on a typical EEG dataset for emotion recognition. Results show that many relevant components found by XAI methods are shared across the sessions and can be used to build a system able to generalise better. However, relevant components of the input signal also appear to be highly dependent on the input itself.

Unsupervised detection of structural damage using Variational Autoencoder and a One-Class Support Vector Machine

Oct 11, 2022

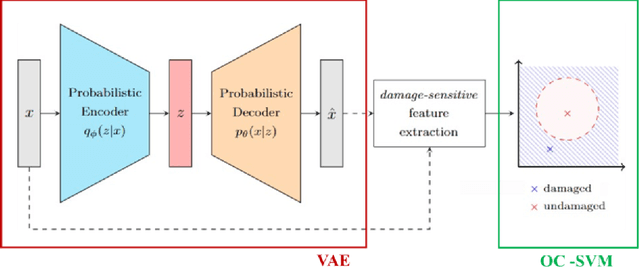

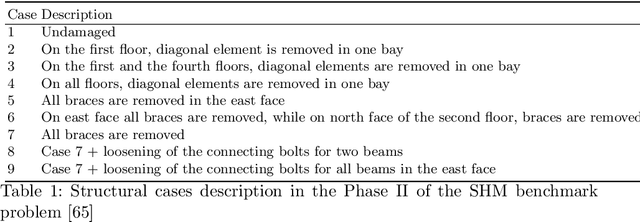

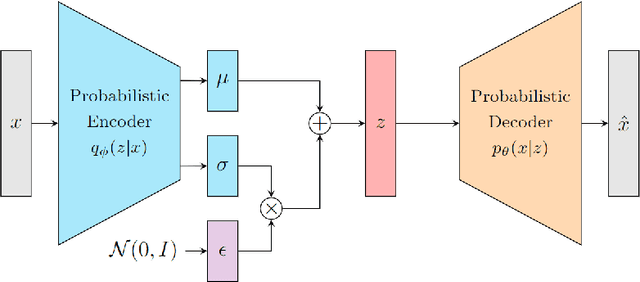

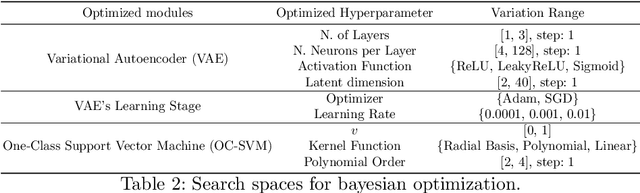

Abstract:In recent years, Artificial Neural Networks (ANNs) have been introduced in Structural Health Monitoring (SHM) systems. An unsupervised method with a data-driven approach allows the ANN training on data acquired from an undamaged structural condition to detect structural damages. In standard approaches, after the training stage, a decision rule is manually defined to detect anomalous data. However, this process could be made automatic using machine learning methods, whom performances are maximised using hyperparameter optimization techniques. The paper proposes an unsupervised method with a data-driven approach to detect structural anomalies. The methodology consists of: (i) a Variational Autoencoder (VAE) to approximate undamaged data distribution and (ii) a One-Class Support Vector Machine (OC-SVM) to discriminate different health conditions using damage sensitive features extracted from VAE's signal reconstruction. The method is applied to a scale steel structure that was tested in nine damage's scenarios by IASC-ASCE Structural Health Monitoring Task Group.

On The Effects Of Data Normalisation For Domain Adaptation On EEG Data

Oct 03, 2022

Abstract:In the Machine Learning (ML) literature, a well-known problem is the Dataset Shift problem where, differently from the ML standard hypothesis, the data in the training and test sets can follow different probability distributions, leading ML systems toward poor generalisation performances. This problem is intensely felt in the Brain-Computer Interface (BCI) context, where bio-signals as Electroencephalographic (EEG) are often used. In fact, EEG signals are highly non-stationary both over time and between different subjects. To overcome this problem, several proposed solutions are based on recent transfer learning approaches such as Domain Adaption (DA). In several cases, however, the actual causes of the improvements remain ambiguous. This paper focuses on the impact of data normalisation, or standardisation strategies applied together with DA methods. In particular, using \textit{SEED}, \textit{DEAP}, and \textit{BCI Competition IV 2a} EEG datasets, we experimentally evaluated the impact of different normalization strategies applied with and without several well-known DA methods, comparing the obtained performances. It results that the choice of the normalisation strategy plays a key role on the classifier performances in DA scenarios, and interestingly, in several cases, the use of only an appropriate normalisation schema outperforms the DA technique.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge