Robert E Blackwell

Can Large Language Models Reason about the Region Connection Calculus?

Nov 29, 2024

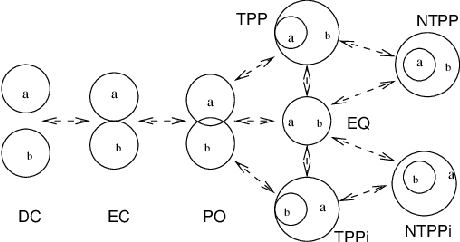

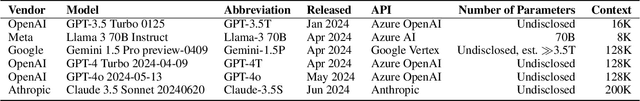

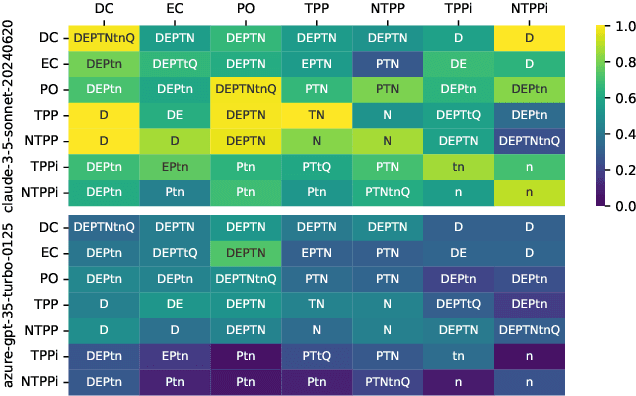

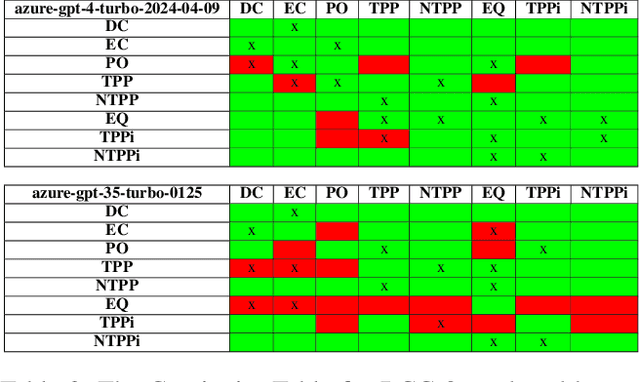

Abstract:Qualitative Spatial Reasoning is a well explored area of Knowledge Representation and Reasoning and has multiple applications ranging from Geographical Information Systems to Robotics and Computer Vision. Recently, many claims have been made for the reasoning capabilities of Large Language Models (LLMs). Here, we investigate the extent to which a set of representative LLMs can perform classical qualitative spatial reasoning tasks on the mereotopological Region Connection Calculus, RCC-8. We conduct three pairs of experiments (reconstruction of composition tables, alignment to human composition preferences, conceptual neighbourhood reconstruction) using state-of-the-art LLMs; in each pair one experiment uses eponymous relations and one, anonymous relations (to test the extent to which the LLM relies on knowledge about the relation names obtained during training). All instances are repeated 30 times to measure the stochasticity of the LLMs.

Evaluating the Ability of Large Language Models to Reason about Cardinal Directions

Jun 24, 2024

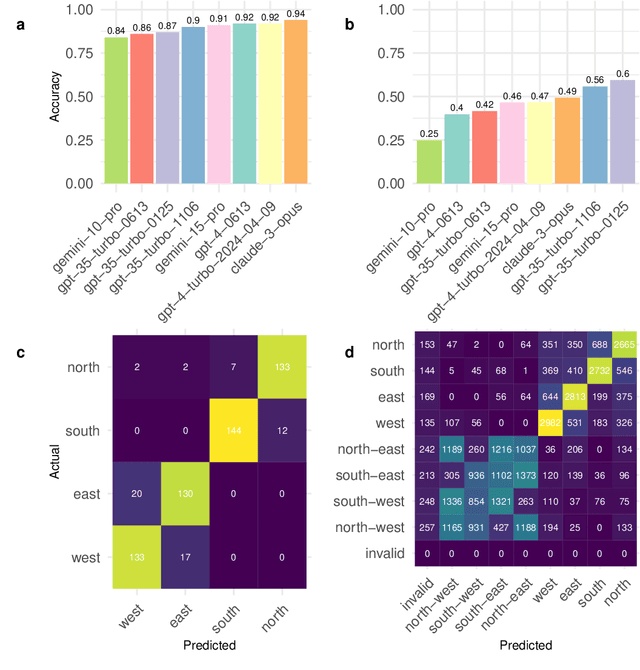

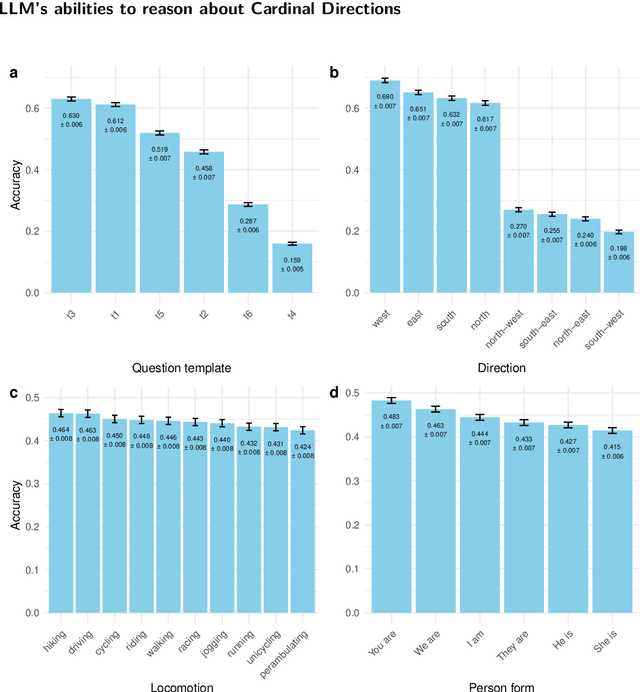

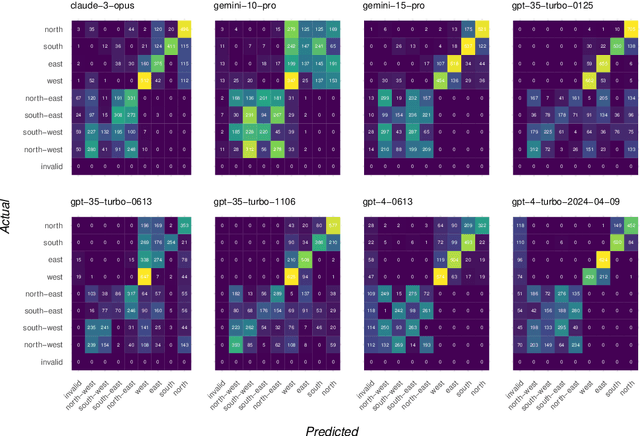

Abstract:We investigate the abilities of a representative set of Large language Models (LLMs) to reason about cardinal directions (CDs). To do so, we create two datasets: the first, co-created with ChatGPT, focuses largely on recall of world knowledge about CDs; the second is generated from a set of templates, comprehensively testing an LLM's ability to determine the correct CD given a particular scenario. The templates allow for a number of degrees of variation such as means of locomotion of the agent involved, and whether set in the first , second or third person. Even with a temperature setting of zero, Our experiments show that although LLMs are able to perform well in the simpler dataset, in the second more complex dataset no LLM is able to reliably determine the correct CD, even with a temperature setting of zero.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge