Rida Miraj

kdehumor at semeval-2020 task 7: a neural network model for detecting funniness in dataset humicroedit

May 11, 2021

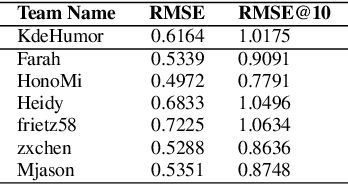

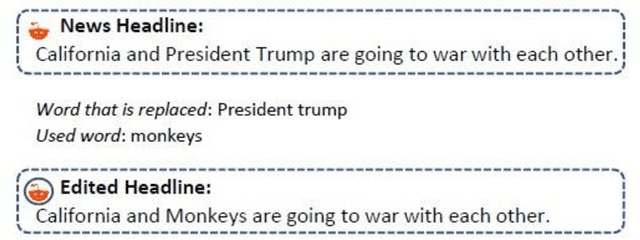

Abstract:This paper describes our contribution to SemEval-2020 Task 7: Assessing Humor in Edited News Headlines. Here we present a method based on a deep neural network. In recent years, quite some attention has been devoted to humor production and perception. Our team KdeHumor employs recurrent neural network models including Bi-Directional LSTMs (BiLSTMs). Moreover, we utilize the state-of-the-art pre-trained sentence embedding techniques. We analyze the performance of our method and demonstrate the contribution of each component of our architecture.

Integrating extracted information from bert and multiple embedding methods with the deep neural network for humour detection

May 11, 2021

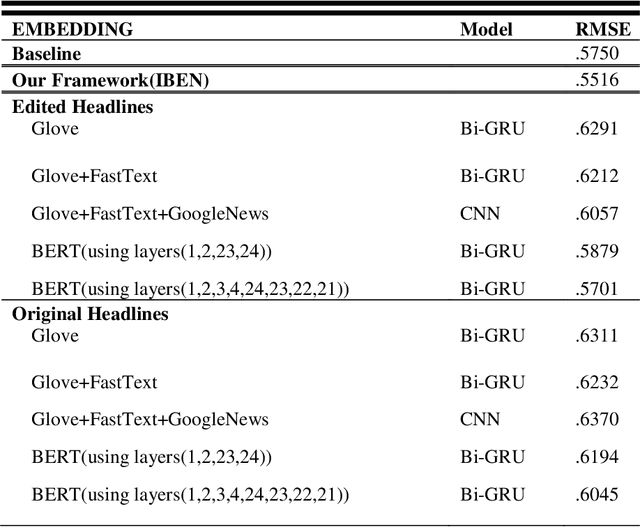

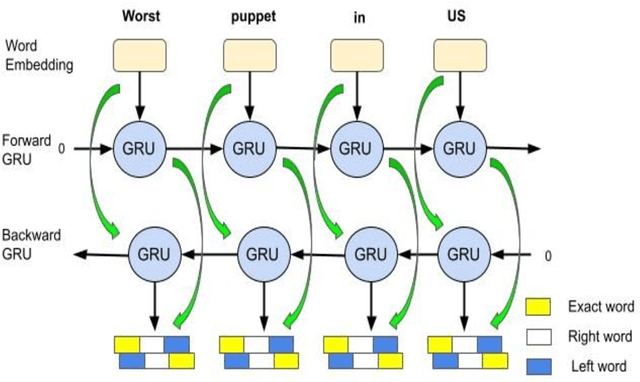

Abstract:Humour detection from sentences has been an interesting and challenging task in the last few years. In attempts to highlight humour detection, most research was conducted using traditional approaches of embedding, e.g., Word2Vec or Glove. Recently BERT sentence embedding has also been used for this task. In this paper, we propose a framework for humour detection in short texts taken from news headlines. Our proposed framework (IBEN) attempts to extract information from written text via the use of different layers of BERT. After several trials, weights were assigned to different layers of the BERT model. The extracted information was then sent to a Bi-GRU neural network as an embedding matrix. We utilized the properties of some external embedding models. A multi-kernel convolution in our neural network was also employed to extract higher-level sentence representations. This framework performed very well on the task of humour detection.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge