Rene Vossen

Learning Robust Manipulation Skills with Guided Policy Search via Generative Motor Reflexes

Feb 19, 2019

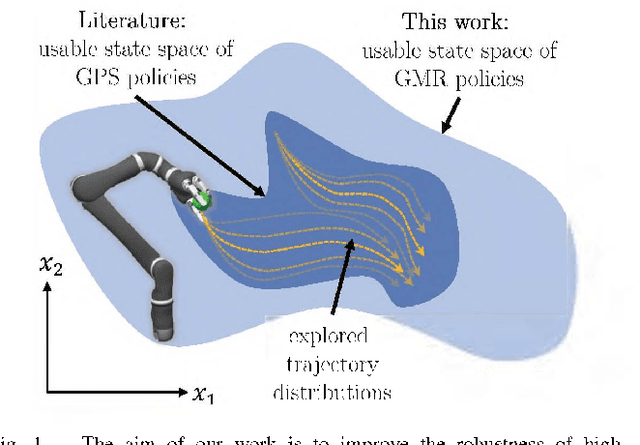

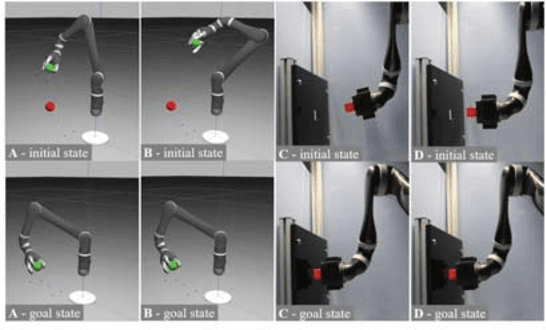

Abstract:Guided Policy Search enables robots to learn control policies for complex manipulation tasks efficiently. Therein, the control policies are represented as high-dimensional neural networks which derive robot actions based on states. However, due to the small number of real-world trajectory samples in Guided Policy Search, the resulting neural networks are only robust in the neighbourhood of the trajectory distribution explored by real-world interactions. In this paper, we present a new policy representation called Generative Motor Reflexes, which is able to generate robust actions over a broader state space compared to previous methods. In contrast to prior state-action policies, Generative Motor Reflexes map states to parameters for a state-dependent motor reflex, which is then used to derive actions. Robustness is achieved by generating similar motor reflexes for many states. We evaluate the presented method in simulated and real-world manipulation tasks, including contact-rich peg-in-hole tasks. Using these evaluation tasks, we show that policies represented as Generative Motor Reflexes lead to robust manipulation skills also outside the explored trajectory distribution with less training needs compared to previous methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge