Rebecca Pelke

Mixed-Precision Training and Compilation for RRAM-based Computing-in-Memory Accelerators

Jan 29, 2026Abstract:Computing-in-Memory (CIM) accelerators are a promising solution for accelerating Machine Learning (ML) workloads, as they perform Matrix-Vector Multiplications (MVMs) on crossbar arrays directly in memory. Although the bit widths of the crossbar inputs and cells are very limited, most CIM compilers do not support quantization below 8 bit. As a result, a single MVM requires many compute cycles, and weights cannot be efficiently stored in a single crossbar cell. To address this problem, we propose a mixed-precision training and compilation framework for CIM architectures. The biggest challenge is the massive search space, that makes it difficult to find good quantization parameters. This is why we introduce a reinforcement learning-based strategy to find suitable quantization configurations that balance latency and accuracy. In the best case, our approach achieves up to a 2.48x speedup over existing state-of-the-art solutions, with an accuracy loss of only 0.086 %.

Optimizing Binary and Ternary Neural Network Inference on RRAM Crossbars using CIM-Explorer

May 20, 2025Abstract:Using Resistive Random Access Memory (RRAM) crossbars in Computing-in-Memory (CIM) architectures offers a promising solution to overcome the von Neumann bottleneck. Due to non-idealities like cell variability, RRAM crossbars are often operated in binary mode, utilizing only two states: Low Resistive State (LRS) and High Resistive State (HRS). Binary Neural Networks (BNNs) and Ternary Neural Networks (TNNs) are well-suited for this hardware due to their efficient mapping. Existing software projects for RRAM-based CIM typically focus on only one aspect: compilation, simulation, or Design Space Exploration (DSE). Moreover, they often rely on classical 8 bit quantization. To address these limitations, we introduce CIM-Explorer, a modular toolkit for optimizing BNN and TNN inference on RRAM crossbars. CIM-Explorer includes an end-to-end compiler stack, multiple mapping options, and simulators, enabling a DSE flow for accuracy estimation across different crossbar parameters and mappings. CIM-Explorer can accompany the entire design process, from early accuracy estimation for specific crossbar parameters, to selecting an appropriate mapping, and compiling BNNs and TNNs for a finalized crossbar chip. In DSE case studies, we demonstrate the expected accuracy for various mappings and crossbar parameters. CIM-Explorer can be found on GitHub.

Introducing Instruction-Accurate Simulators for Performance Estimation of Autotuning Workloads

May 19, 2025Abstract:Accelerating Machine Learning (ML) workloads requires efficient methods due to their large optimization space. Autotuning has emerged as an effective approach for systematically evaluating variations of implementations. Traditionally, autotuning requires the workloads to be executed on the target hardware (HW). We present an interface that allows executing autotuning workloads on simulators. This approach offers high scalability when the availability of the target HW is limited, as many simulations can be run in parallel on any accessible HW. Additionally, we evaluate the feasibility of using fast instruction-accurate simulators for autotuning. We train various predictors to forecast the performance of ML workload implementations on the target HW based on simulation statistics. Our results demonstrate that the tuned predictors are highly effective. The best workload implementation in terms of actual run time on the target HW is always within the top 3 % of predictions for the tested x86, ARM, and RISC-V-based architectures. In the best case, this approach outperforms native execution on the target HW for embedded architectures when running as few as three samples on three simulators in parallel.

Evaluating the Scalability of Binary and Ternary CNN Workloads on RRAM-based Compute-in-Memory Accelerators

May 12, 2025Abstract:The increasing computational demand of Convolutional Neural Networks (CNNs) necessitates energy-efficient acceleration strategies. Compute-in-Memory (CIM) architectures based on Resistive Random Access Memory (RRAM) offer a promising solution by reducing data movement and enabling low-power in-situ computations. However, their efficiency is limited by the high cost of peripheral circuits, particularly Analog-to-Digital Converters (ADCs). Large crossbars and low ADC resolutions are often used to mitigate this, potentially compromising accuracy. This work introduces novel simulation methods to model the impact of resistive wire parasitics and limited ADC resolution on RRAM crossbars. Our parasitics model employs a vectorised algorithm to compute crossbar output currents with errors below 0.15% compared to SPICE. Additionally, we propose a variable step-size ADC and a calibration methodology that significantly reduces ADC resolution requirements. These accuracy models are integrated with a statistics-based energy model. Using our framework, we conduct a comparative analysis of binary and ternary CNNs. Experimental results demonstrate that the ternary CNNs exhibit greater resilience to wire parasitics and lower ADC resolution but suffer a 40% reduction in energy efficiency. These findings provide valuable insights for optimising RRAM-based CIM accelerators for energy-efficient deep learning.

A Calibratable Model for Fast Energy Estimation of MVM Operations on RRAM Crossbars

May 07, 2024

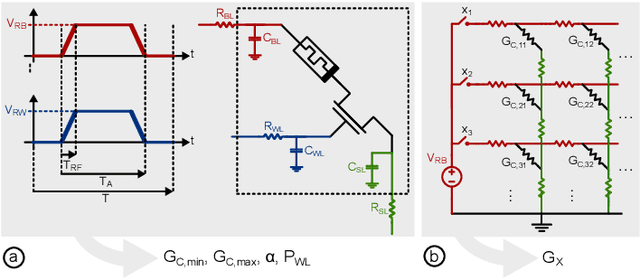

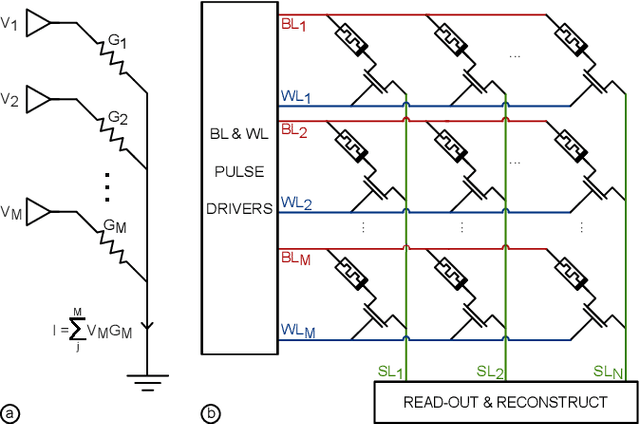

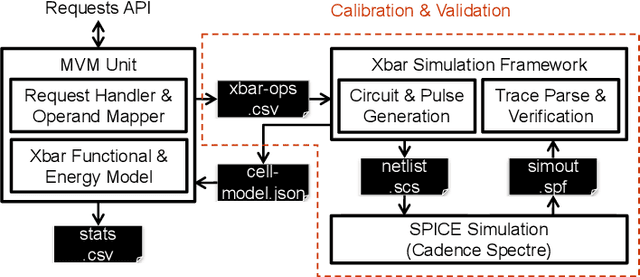

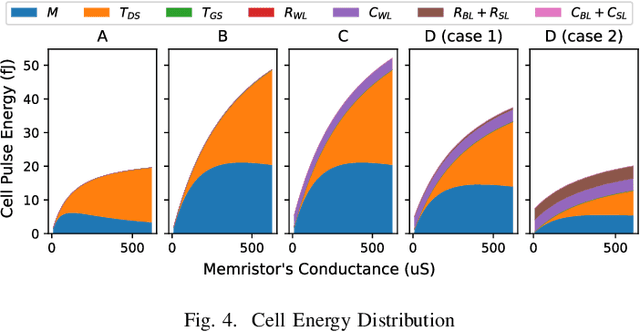

Abstract:The surge in AI usage demands innovative power reduction strategies. Novel Compute-in-Memory (CIM) architectures, leveraging advanced memory technologies, hold the potential for significantly lowering energy consumption by integrating storage with parallel Matrix-Vector-Multiplications (MVMs). This study addresses the 1T1R RRAM crossbar, a core component in numerous CIM architectures. We introduce an abstract model and a calibration methodology for estimating operational energy. Our tool condenses circuit-level behaviour into a few parameters, facilitating energy assessments for DNN workloads. Validation against low-level SPICE simulations demonstrates speedups of up to 1000x and energy estimations with errors below 1%.

CLSA-CIM: A Cross-Layer Scheduling Approach for Computing-in-Memory Architectures

Jan 17, 2024

Abstract:The demand for efficient machine learning (ML) accelerators is growing rapidly, driving the development of novel computing concepts such as resistive random access memory (RRAM)-based tiled computing-in-memory (CIM) architectures. CIM allows to compute within the memory unit, resulting in faster data processing and reduced power consumption. Efficient compiler algorithms are essential to exploit the potential of tiled CIM architectures. While conventional ML compilers focus on code generation for CPUs, GPUs, and other von Neumann architectures, adaptations are needed to cover CIM architectures. Cross-layer scheduling is a promising approach, as it enhances the utilization of CIM cores, thereby accelerating computations. Although similar concepts are implicitly used in previous work, there is a lack of clear and quantifiable algorithmic definitions for cross-layer scheduling for tiled CIM architectures. To close this gap, we present CLSA-CIM, a cross-layer scheduling algorithm for tiled CIM architectures. We integrate CLSA-CIM with existing weight-mapping strategies and compare performance against state-of-the-art (SOTA) scheduling algorithms. CLSA-CIM improves the utilization by up to 17.9 x , resulting in an overall speedup increase of up to 29.2 x compared to SOTA.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge