Rasika Karkare

A Pre-training Oracle for Predicting Distances in Social Networks

Jun 06, 2021

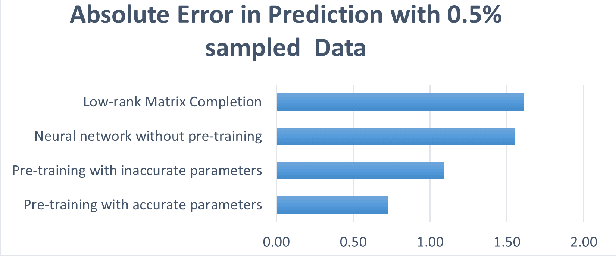

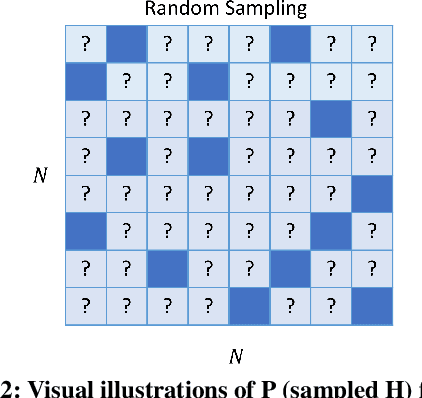

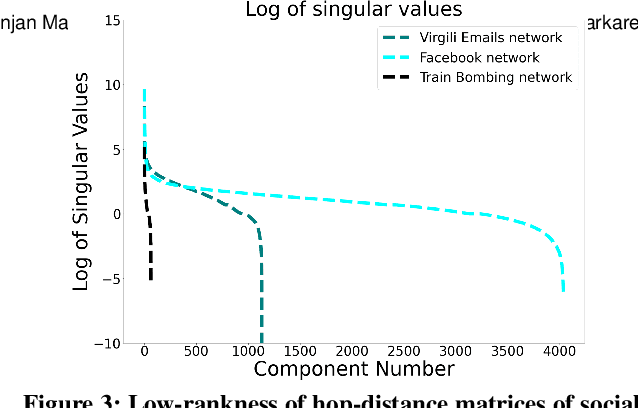

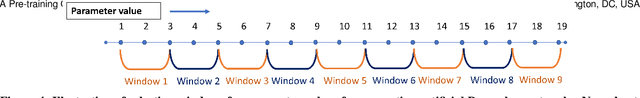

Abstract:In this paper, we propose a novel method to make distance predictions in real-world social networks. As predicting missing distances is a difficult problem, we take a two-stage approach. Structural parameters for families of synthetic networks are first estimated from a small set of measurements of a real-world network and these synthetic networks are then used to pre-train the predictive neural networks. Since our model first searches for the most suitable synthetic graph parameters which can be used as an "oracle" to create arbitrarily large training data sets, we call our approach "Oracle Search Pre-training" (OSP). For example, many real-world networks exhibit a Power law structure in their node degree distribution, so a Power law model can provide a foundation for the desired oracle to generate synthetic pre-training networks, if the appropriate Power law graph parameters can be estimated. Accordingly, we conduct experiments on real-world Facebook, Email, and Train Bombing networks and show that OSP outperforms models without pre-training, models pre-trained with inaccurate parameters, and other distance prediction schemes such as Low-rank Matrix Completion. In particular, we achieve a prediction error of less than one hop with only 1% of sampled distances from the social network. OSP can be easily extended to other domains such as random networks by choosing an appropriate model to generate synthetic training data, and therefore promises to impact many different network learning problems.

Blind Image Denoising and Inpainting Using Robust Hadamard Autoencoders

Jan 26, 2021

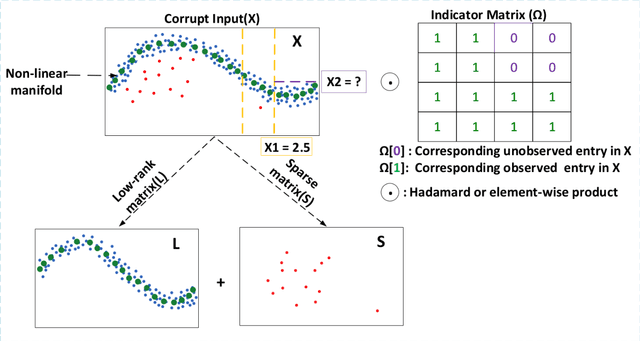

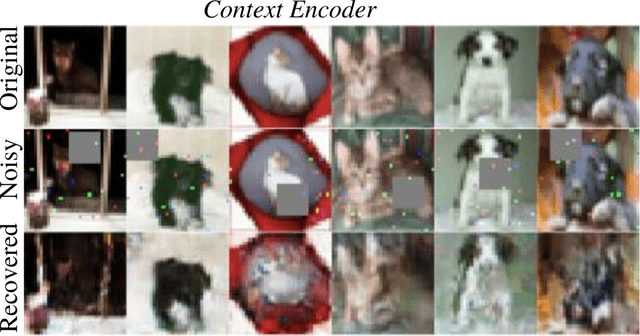

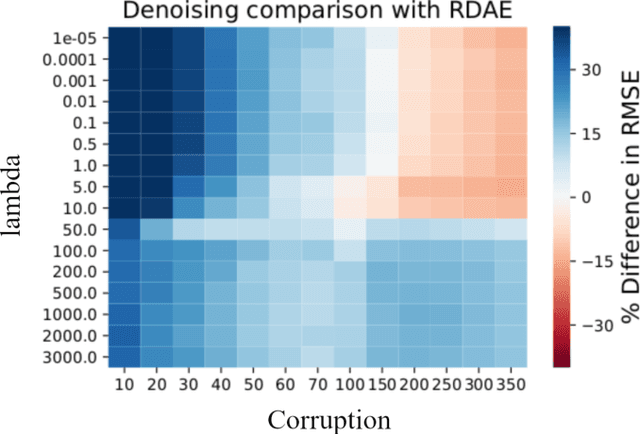

Abstract:In this paper, we demonstrate how deep autoencoders can be generalized to the case of inpainting and denoising, even when no clean training data is available. In particular, we show how neural networks can be trained to perform all of these tasks simultaneously. While, deep autoencoders implemented by way of neural networks have demonstrated potential for denoising and anomaly detection, standard autoencoders have the drawback that they require access to clean data for training. However, recent work in Robust Deep Autoencoders (RDAEs) shows how autoencoders can be trained to eliminate outliers and noise in a dataset without access to any clean training data. Inspired by this work, we extend RDAEs to the case where data are not only noisy and have outliers, but also only partially observed. Moreover, the dataset we train the neural network on has the properties that all entries have noise, some entries are corrupted by large mistakes, and many entries are not even known. Given such an algorithm, many standard tasks, such as denoising, image inpainting, and unobserved entry imputation can all be accomplished simultaneously within the same framework. Herein we demonstrate these techniques on standard machine learning tasks, such as image inpainting and denoising for the MNIST and CIFAR10 datasets. However, these approaches are not only applicable to image processing problems, but also have wide ranging impacts on datasets arising from real-world problems, such as manufacturing and network processing, where noisy, partially observed data naturally arise.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge