Ramkumar Raghu

Performance of Queueing Models for MISO Content-Centric Networks

Nov 15, 2021

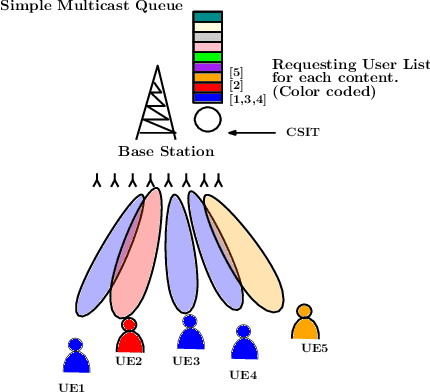

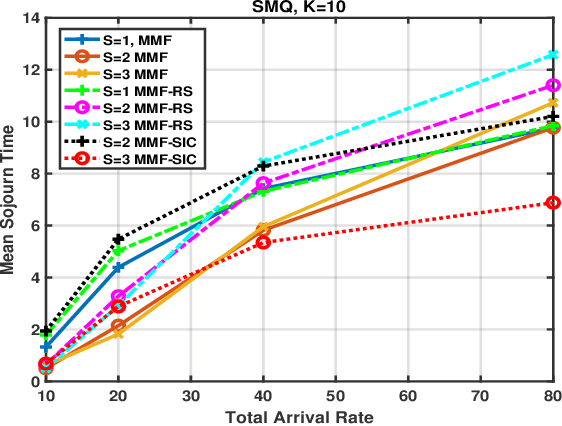

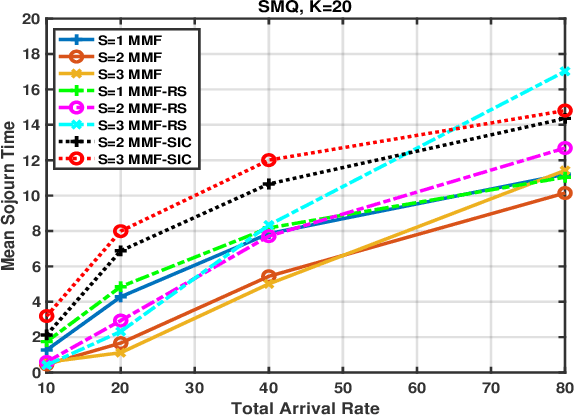

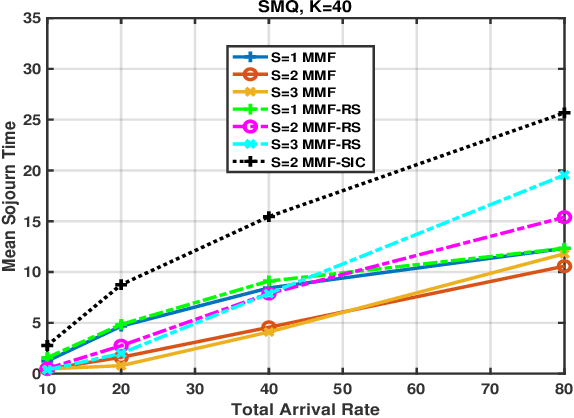

Abstract:MISO networks have garnered attention in wireless content-centric networks due to the additional degrees of freedoms they provide. Several beamforming techniques such as NOMA, OMA, SDMA and Rate splitting have been proposed for such networks. These techniques utilise the redundancy in the content requests across users and leverage the spatial multicast and multiplexing gains of multi-antenna transmit beamforming to improve the content delivery rate. However, queueing delays and user traffic dynamics which significantly affect the performance of these schemes, have generally been ignored. We study queueing delays in the downlink for several scheduling and beamforming schemes in content-centric networks, with one base-station possessing multiple transmit antennas. These schemes are studied along with a recently proposed Simple Multicast Queue, to improve the delay performance of the network. This work is particularly relevant for content delivery in 5G and eMBB networks.

Deep Reinforcement Learning Based Power control for Wireless Multicast Systems

Oct 24, 2019

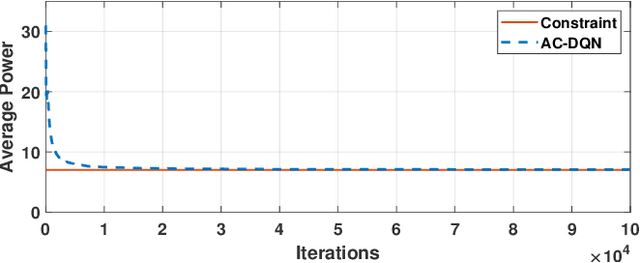

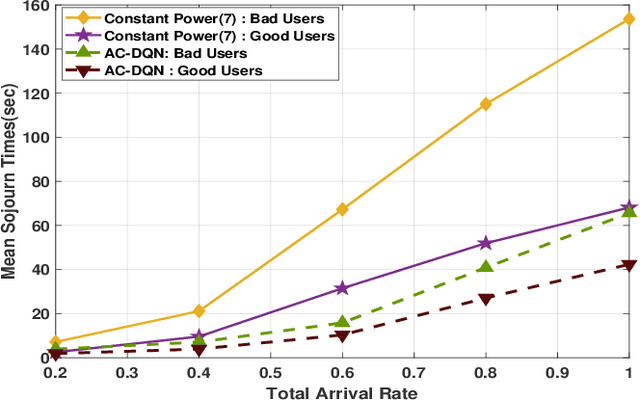

Abstract:We consider a multicast scheme recently proposed for a wireless downlink in [1]. It was shown earlier that power control can significantly improve its performance. However for this system, obtaining optimal power control is intractable because of a very large state space. Therefore in this paper we use deep reinforcement learning where we use function approximation of the Q-function via a deep neural network. We show that optimal power control can be learnt for reasonably large systems via this approach. The average power constraint is ensured via a Lagrange multiplier, which is also learnt. Finally, we demonstrate that a slight modification of the learning algorithm allows the optimal control to track the time varying system statistics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge