Ramesh Kumar Sah

CUDLE: Learning Under Label Scarcity to Detect Cannabis Use in Uncontrolled Environments

Oct 04, 2024Abstract:Wearable sensor systems have demonstrated a great potential for real-time, objective monitoring of physiological health to support behavioral interventions. However, obtaining accurate labels in free-living environments remains difficult due to limited human supervision and the reliance on self-labeling by patients, making data collection and supervised learning particularly challenging. To address this issue, we introduce CUDLE (Cannabis Use Detection with Label Efficiency), a novel framework that leverages self-supervised learning with real-world wearable sensor data to tackle a pressing healthcare challenge: the automatic detection of cannabis consumption in free-living environments. CUDLE identifies cannabis consumption moments using sensor-derived data through a contrastive learning framework. It first learns robust representations via a self-supervised pretext task with data augmentation. These representations are then fine-tuned in a downstream task with a shallow classifier, enabling CUDLE to outperform traditional supervised methods, especially with limited labeled data. To evaluate our approach, we conducted a clinical study with 20 cannabis users, collecting over 500 hours of wearable sensor data alongside user-reported cannabis use moments through EMA (Ecological Momentary Assessment) methods. Our extensive analysis using the collected data shows that CUDLE achieves a higher accuracy of 73.4%, compared to 71.1% for the supervised approach, with the performance gap widening as the number of labels decreases. Notably, CUDLE not only surpasses the supervised model while using 75% less labels, but also reaches peak performance with far fewer subjects.

Stress Classification and Personalization: Getting the most out of the least

Jul 12, 2021

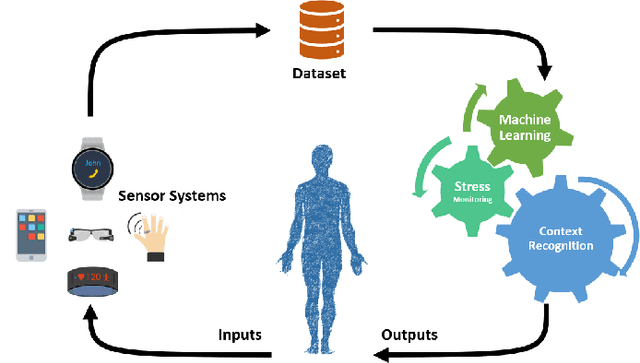

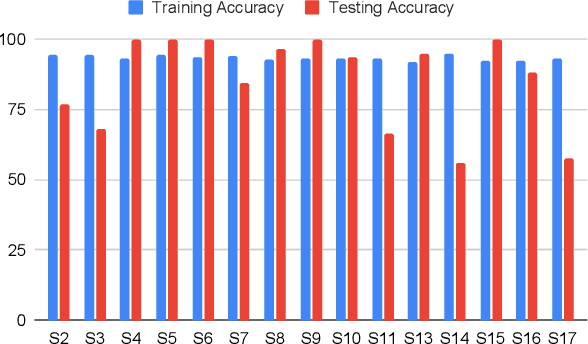

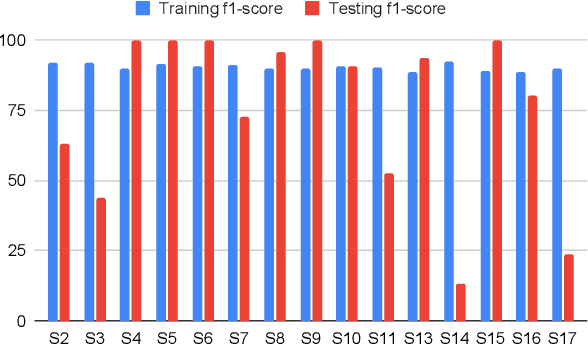

Abstract:Stress detection and monitoring is an active area of research with important implications for the personal, professional, and social health of an individual. Current approaches for affective state classification use traditional machine learning algorithms with features computed from multiple sensor modalities. These methods are data-intensive and rely on hand-crafted features which impede the practical applicability of these sensor systems in daily lives. To overcome these shortcomings, we propose a novel Convolutional Neural Network (CNN) based stress detection and classification framework without any feature computation using data from only one sensor modality. Our method is competitive and outperforms current state-of-the-art techniques and achieves a classification accuracy of $92.85\%$ and an $f1$ score of $0.89$. Through our leave-one-subject-out analysis, we also show the importance of personalizing stress models.

Adversarial Transferability in Wearable Sensor Systems

Mar 17, 2020

Abstract:Machine learning has increasingly become the most used approach for inference and decision making in wearable sensor systems. However, recent studies have found that machine learning systems are easily fooled by the addition of adversarial perturbation to their inputs. What is more interesting is that the adversarial examples generated for one machine learning system can also degrade the performance of another. This property of adversarial examples is called transferability. In this work, we take the first strides in studying adversarial transferability in wearable sensor systems, from the following perspectives: 1) Transferability between machine learning models, 2) Transferability across subjects, 3) Transferability across sensor locations, and 4) Transferability across datasets. With Human Activity Recognition (HAR) as an example sensor system, we found strong untargeted transferability in all cases of transferability. Specifically, gradient-based attacks were able to achieve higher misclassification rates compared to non-gradient attacks. The misclassification rate of untargeted adversarial examples ranged from 20% to 98%. For targeted transferability between machine learning models, the success rate of adversarial examples was 100% for iterative attack methods. However, the success rate for other types of targeted transferability ranged from 20% to 0%. Our findings strongly suggest that adversarial transferability has serious consequences not only in sensor systems but also across the broad spectrum of ubiquitous computing.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge