Rahul Harsha Cheppally

Axis-Aligned 3D Stalk Diameter Estimation from RGB-D Imagery

Sep 15, 2025Abstract:Accurate, high-throughput phenotyping is a critical component of modern crop breeding programs, especially for improving traits such as mechanical stability, biomass production, and disease resistance. Stalk diameter is a key structural trait, but traditional measurement methods are labor-intensive, error-prone, and unsuitable for scalable phenotyping. In this paper, we present a geometry-aware computer vision pipeline for estimating stalk diameter from RGB-D imagery. Our method integrates deep learning-based instance segmentation, 3D point cloud reconstruction, and axis-aligned slicing via Principal Component Analysis (PCA) to perform robust diameter estimation. By mitigating the effects of curvature, occlusion, and image noise, this approach offers a scalable and reliable solution to support high-throughput phenotyping in breeding and agronomic research.

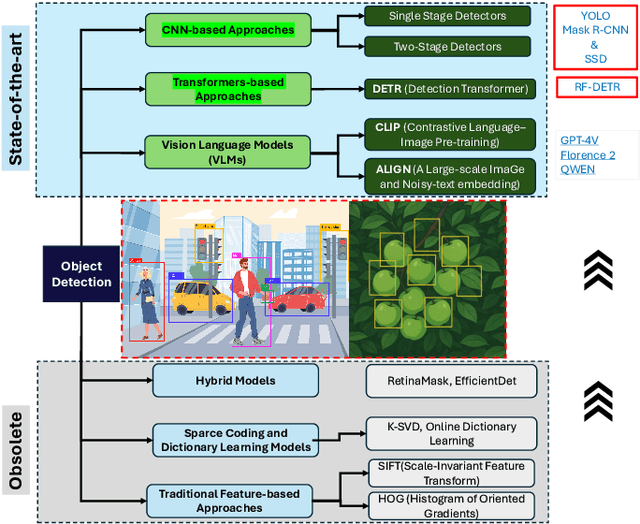

A Review of 3D Object Detection with Vision-Language Models

Apr 25, 2025Abstract:This review provides a systematic analysis of comprehensive survey of 3D object detection with vision-language models(VLMs) , a rapidly advancing area at the intersection of 3D vision and multimodal AI. By examining over 100 research papers, we provide the first systematic analysis dedicated to 3D object detection with vision-language models. We begin by outlining the unique challenges of 3D object detection with vision-language models, emphasizing differences from 2D detection in spatial reasoning and data complexity. Traditional approaches using point clouds and voxel grids are compared to modern vision-language frameworks like CLIP and 3D LLMs, which enable open-vocabulary detection and zero-shot generalization. We review key architectures, pretraining strategies, and prompt engineering methods that align textual and 3D features for effective 3D object detection with vision-language models. Visualization examples and evaluation benchmarks are discussed to illustrate performance and behavior. Finally, we highlight current challenges, such as limited 3D-language datasets and computational demands, and propose future research directions to advance 3D object detection with vision-language models. >Object Detection, Vision-Language Models, Agents, VLMs, LLMs, AI

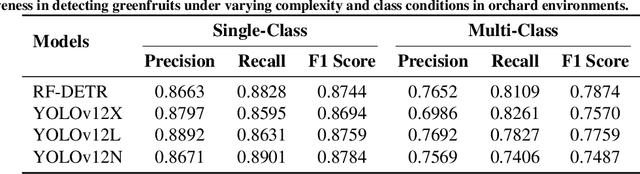

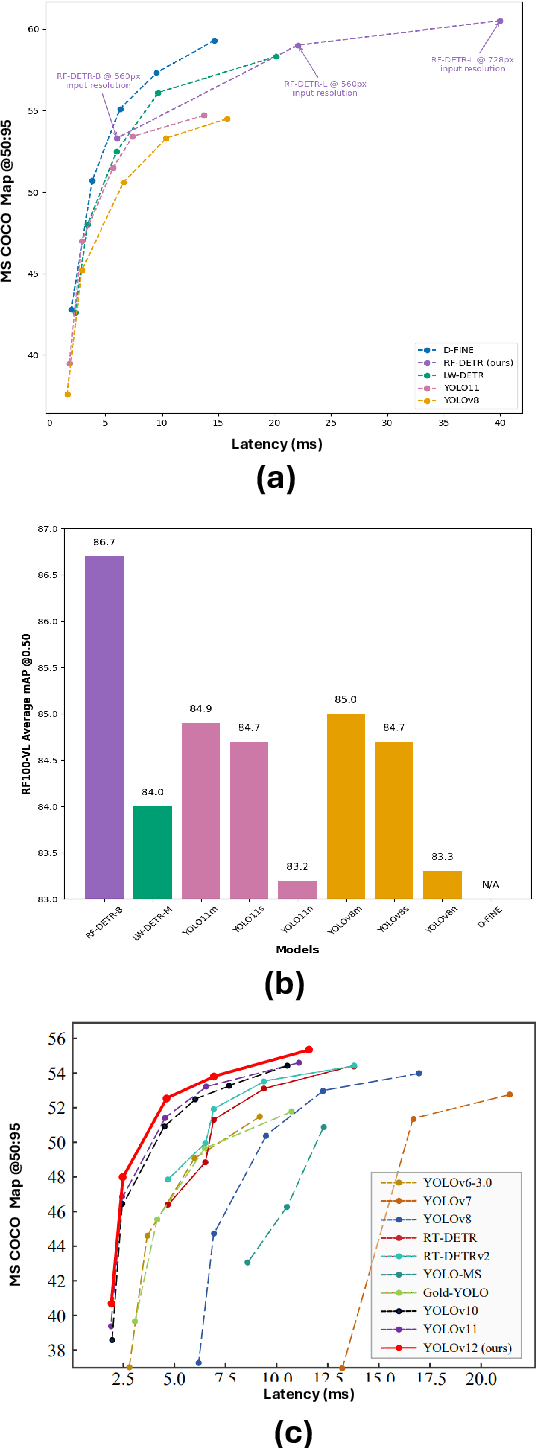

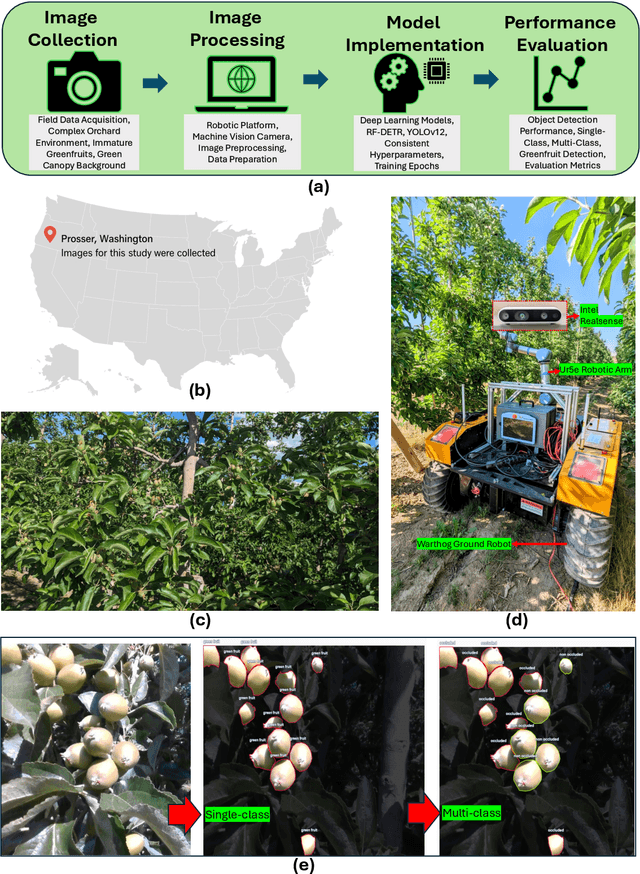

RF-DETR Object Detection vs YOLOv12 : A Study of Transformer-based and CNN-based Architectures for Single-Class and Multi-Class Greenfruit Detection in Complex Orchard Environments Under Label Ambiguity

Apr 17, 2025

Abstract:This study conducts a detailed comparison of RF-DETR object detection base model and YOLOv12 object detection model configurations for detecting greenfruits in a complex orchard environment marked by label ambiguity, occlusions, and background blending. A custom dataset was developed featuring both single-class (greenfruit) and multi-class (occluded and non-occluded greenfruits) annotations to assess model performance under dynamic real-world conditions. RF-DETR object detection model, utilizing a DINOv2 backbone and deformable attention, excelled in global context modeling, effectively identifying partially occluded or ambiguous greenfruits. In contrast, YOLOv12 leveraged CNN-based attention for enhanced local feature extraction, optimizing it for computational efficiency and edge deployment. RF-DETR achieved the highest mean Average Precision (mAP50) of 0.9464 in single-class detection, proving its superior ability to localize greenfruits in cluttered scenes. Although YOLOv12N recorded the highest mAP@50:95 of 0.7620, RF-DETR consistently outperformed in complex spatial scenarios. For multi-class detection, RF-DETR led with an mAP@50 of 0.8298, showing its capability to differentiate between occluded and non-occluded fruits, while YOLOv12L scored highest in mAP@50:95 with 0.6622, indicating better classification in detailed occlusion contexts. Training dynamics analysis highlighted RF-DETR's swift convergence, particularly in single-class settings where it plateaued within 10 epochs, demonstrating the efficiency of transformer-based architectures in adapting to dynamic visual data. These findings validate RF-DETR's effectiveness for precision agricultural applications, with YOLOv12 suited for fast-response scenarios. >Index Terms: RF-DETR object detection, YOLOv12, YOLOv13, YOLOv14, YOLOv15, YOLOE, YOLO World, YOLO, You Only Look Once, Roboflow, Detection Transformers, CNNs

RowDetr: End-to-End Row Detection Using Polynomials

Dec 13, 2024

Abstract:Crop row detection has garnered significant interest due to its critical role in enabling navigation in GPS-denied environments, such as under-canopy agricultural settings. To address this challenge, we propose RowDetr, an end-to-end neural network that utilizes smooth polynomial functions to delineate crop boundaries in image space. A novel energy-based loss function, PolyOptLoss, is introduced to enhance learning robustness, even with noisy labels. The proposed model demonstrates a 3% improvement over Agronav in key performance metrics while being six times faster, making it well-suited for real-time applications. Additionally, metrics from lane detection studies were adapted to comprehensively evaluate the system, showcasing its accuracy and adaptability in various scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge