Qingxian Luo

Effects of quantum resources on the statistical complexity of quantum circuits

Feb 05, 2021

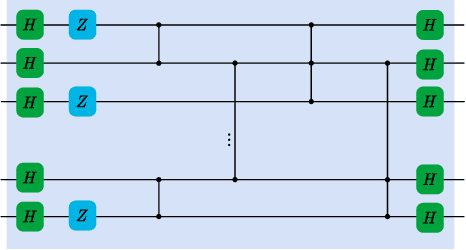

Abstract:We investigate how the addition of quantum resources changes the statistical complexity of quantum circuits by utilizing the framework of quantum resource theories. Measures of statistical complexity that we consider include the Rademacher complexity and the Gaussian complexity, which are well-known measures in computational learning theory that quantify the richness of classes of real-valued functions. We derive bounds for the statistical complexities of quantum circuits that have limited access to certain resources and apply our results to two special cases: (1) stabilizer circuits that are supplemented with a limited number of T gates and (2) instantaneous quantum polynomial-time Clifford circuits that are supplemented with a limited number of CCZ gates. We show that the increase in the statistical complexity of a quantum circuit when an additional quantum channel is added to it is upper bounded by the free robustness of the added channel. Finally, we derive bounds for the generalization error associated with learning from training data arising from quantum circuits.

On the statistical complexity of quantum circuits

Jan 15, 2021

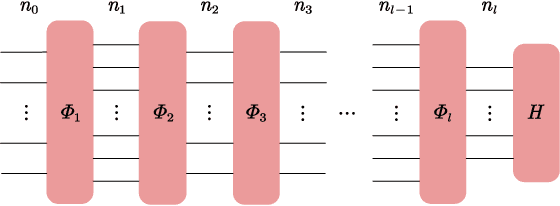

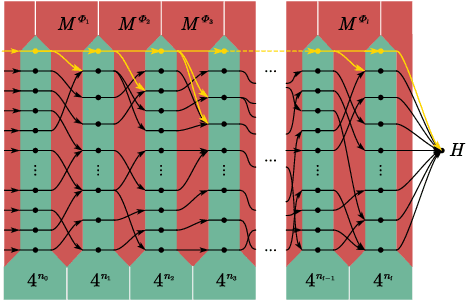

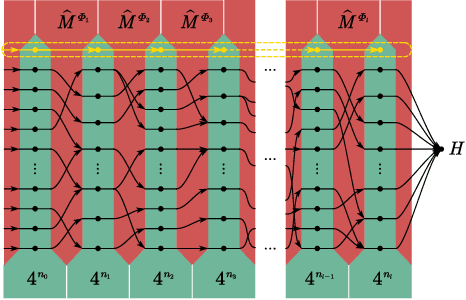

Abstract:In theoretical machine learning, the statistical complexity is a notion that measures the richness of a hypothesis space. In this work, we apply a particular measure of statistical complexity, namely the Rademacher complexity, to the quantum circuit model in quantum computation and study how the statistical complexity depends on various quantum circuit parameters. In particular, we investigate the dependence of the statistical complexity on the resources, depth, width, and the number of input and output registers of a quantum circuit. To study how the statistical complexity scales with resources in the circuit, we introduce a resource measure of magic based on the $(p,q)$ group norm, which quantifies the amount of magic in the quantum channels associated with the circuit. These dependencies are investigated in the following two settings: (i) where the entire quantum circuit is treated as a single quantum channel, and (ii) where each layer of the quantum circuit is treated as a separate quantum channel. The bounds we obtain can be used to constrain the capacity of quantum neural networks in terms of their depths and widths as well as the resources in the network.

Depth-Width Trade-offs for Neural Networks via Topological Entropy

Oct 15, 2020

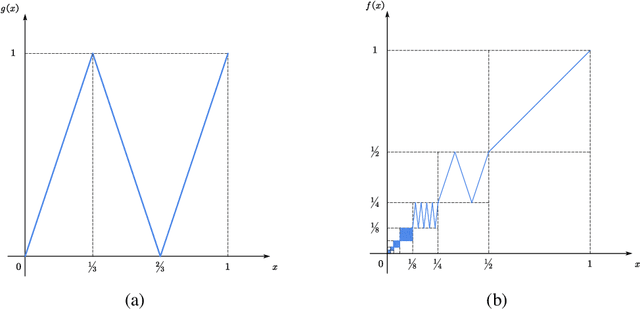

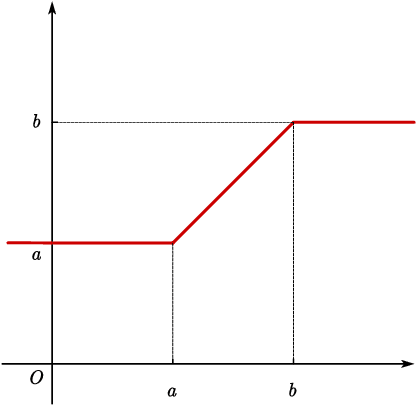

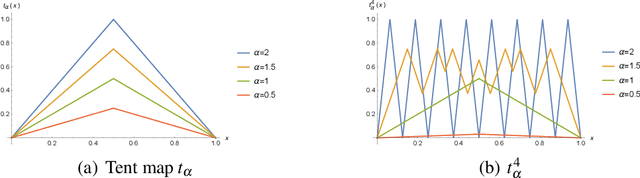

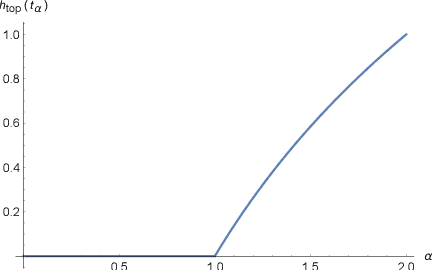

Abstract:One of the central problems in the study of deep learning theory is to understand how the structure properties, such as depth, width and the number of nodes, affect the expressivity of deep neural networks. In this work, we show a new connection between the expressivity of deep neural networks and topological entropy from dynamical system, which can be used to characterize depth-width trade-offs of neural networks. We provide an upper bound on the topological entropy of neural networks with continuous semi-algebraic units by the structure parameters. Specifically, the topological entropy of ReLU network with $l$ layers and $m$ nodes per layer is upper bounded by $O(l\log m)$. Besides, if the neural network is a good approximation of some function $f$, then the size of the neural network has an exponential lower bound with respect to the topological entropy of $f$. Moreover, we discuss the relationship between topological entropy, the number of oscillations, periods and Lipschitz constant.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge