Qing-Hao Meng

SAD-TIME: a Spatiotemporal-fused network for depression detection with Automated multi-scale Depth-wise and TIME-interval-related common feature extractor

Nov 13, 2024Abstract:Background and Objective: Depression is a severe mental disorder, and accurate diagnosis is pivotal to the cure and rehabilitation of people with depression. However, the current questionnaire-based diagnostic methods could bring subjective biases and may be denied by subjects. In search of a more objective means of diagnosis, researchers have begun to experiment with deep learning-based methods for identifying depressive disorders in recent years. Methods: In this study, a novel Spatiotemporal-fused network with Automated multi-scale Depth-wise and TIME-interval-related common feature extractor (SAD-TIME) is proposed. SAD-TIME incorporates an automated nodes' common features extractor (CFE), a spatial sector (SpS), a modified temporal sector (TeS), and a domain adversarial learner (DAL). The CFE includes a multi-scale depth-wise 1D-convolutional neural network and a time-interval embedding generator, where the unique information of each channel is preserved. The SpS fuses the functional connectivity with the distance-based connectivity containing spatial position of EEG electrodes. A multi-head-attention graph convolutional network is also applied in the SpS to fuse the features from different EEG channels. The TeS is based on long short-term memory and graph transformer networks, where the temporal information of different time-windows is fused. Moreover, the DAL is used after the SpS to obtain the domain-invariant feature. Results: Experimental results under tenfold cross-validation show that the proposed SAD-TIME method achieves 92.00% and 94.00% depression classification accuracies on two datasets, respectively, in cross-subject mode. Conclusion: SAD-TIME is a robust depression detection model, where the automatedly-generated features, the SpS and the TeS assist the classification performance with the fusion of the innate spatiotemporal information in the EEG signals.

Heatmap-based Vanishing Point boosts Lane Detection

Jul 30, 2020

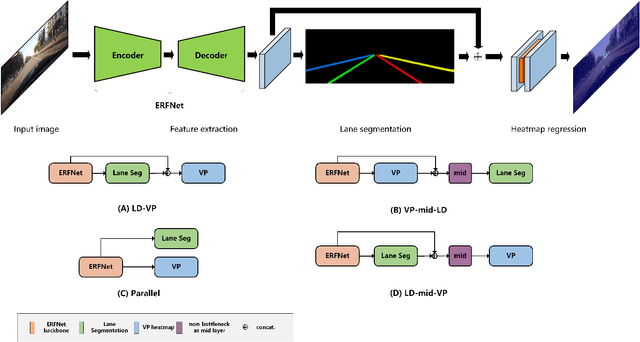

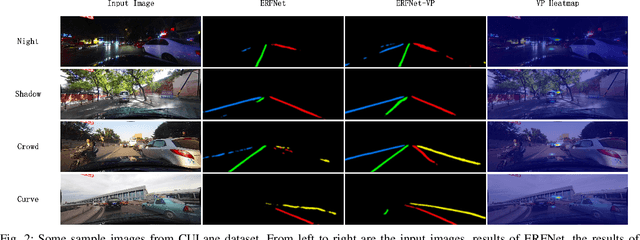

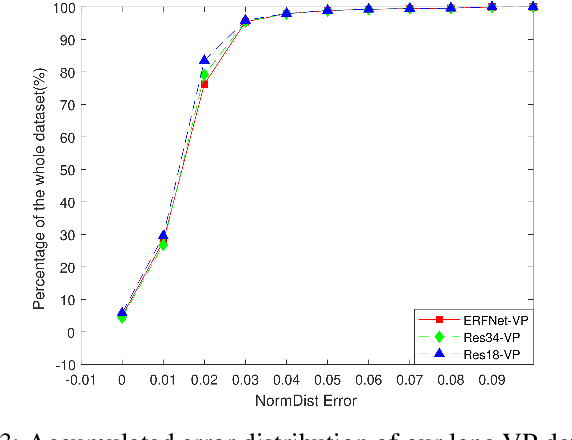

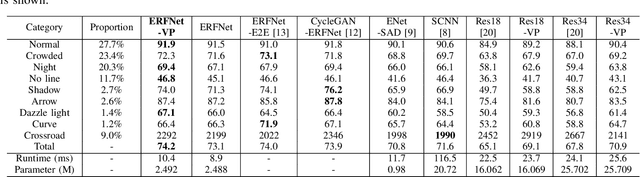

Abstract:Vision-based lane detection (LD) is a key part of autonomous driving technology, and it is also a challenging problem. As one of the important constraints of scene composition, vanishing point (VP) may provide a useful clue for lane detection. In this paper, we proposed a new multi-task fusion network architecture for high-precision lane detection. Firstly, the ERFNet was used as the backbone to extract the hierarchical features of the road image. Then, the lanes were detected using image segmentation. Finally, combining the output of lane detection and the hierarchical features extracted by the backbone, the lane VP was predicted using heatmap regression. The proposed fusion strategy was tested using the public CULane dataset. The experimental results suggest that the lane detection accuracy of our method outperforms those of state-of-the-art (SOTA) methods.

D-VPnet: A Network for Real-time Dominant Vanishing Point Detection in Natural Scenes

Jun 09, 2020

Abstract:As an important part of linear perspective, vanishing points (VPs) provide useful clues for mapping objects from 2D photos to 3D space. Existing methods are mainly focused on extracting structural features such as lines or contours and then clustering these features to detect VPs. However, these techniques suffer from ambiguous information due to the large number of line segments and contours detected in outdoor environments. In this paper, we present a new convolutional neural network (CNN) to detect dominant VPs in natural scenes, i.e., the Dominant Vanishing Point detection Network (D-VPnet). The key component of our method is the feature line-segment proposal unit (FLPU), which can be directly utilized to predict the location of the dominant VP. Moreover, the model also uses the two main parallel lines as an assistant to determine the position of the dominant VP. The proposed method was tested using a public dataset and a Parallel Line based Vanishing Point (PLVP) dataset. The experimental results suggest that the detection accuracy of our approach outperforms those of state-of-the-art methods under various conditions in real-time, achieving rates of 115fps.

Unstructured Road Vanishing Point Detection Using the Convolutional Neural Network and Heatmap Regression

Jun 08, 2020

Abstract:Unstructured road vanishing point (VP) detection is a challenging problem, especially in the field of autonomous driving. In this paper, we proposed a novel solution combining the convolutional neural network (CNN) and heatmap regression to detect unstructured road VP. The proposed algorithm firstly adopts a lightweight backbone, i.e., depthwise convolution modified HRNet, to extract hierarchical features of the unstructured road image. Then, three advanced strategies, i.e., multi-scale supervised learning, heatmap super-resolution, and coordinate regression techniques are utilized to achieve fast and high-precision unstructured road VP detection. The empirical results on Kong's dataset show that our proposed approach enjoys the highest detection accuracy compared with state-of-the-art methods under various conditions in real-time, achieving the highest speed of 33 fps.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge