Puranjay Mohan

A Tiny CNN Architecture for Medical Face Mask Detection for Resource-Constrained Endpoints

Dec 22, 2020

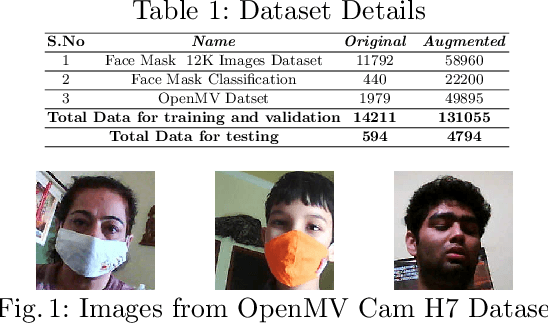

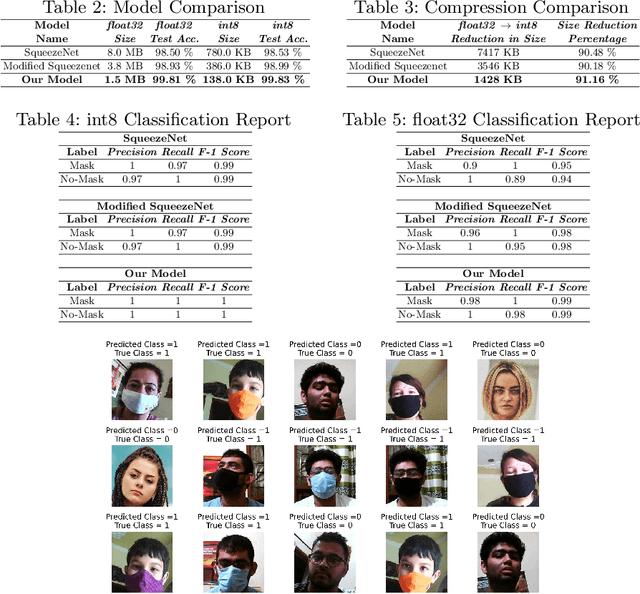

Abstract:The world is going through one of the most dangerous pandemics of all time with the rapid spread of the novel coronavirus (COVID-19). According to the World Health Organisation, the most effective way to thwart the transmission of coronavirus is to wear medical face masks. Monitoring the use of face masks in public places has been a challenge because manual monitoring could be unsafe. This paper proposes an architecture for detecting medical face masks for deployment on resource-constrained endpoints having extremely low memory footprints. A small development board with an ARM Cortex-M7 microcontroller clocked at 480 Mhz and having just 496 KB of framebuffer RAM, has been used for the deployment of the model. Using the TensorFlow Lite framework, the model is quantized to further reduce its size. The proposed model is 138 KB post quantization and runs at the inference speed of 30 FPS.

Rethinking Generalization in American Sign Language Prediction for Edge Devices with Extremely Low Memory Footprint

Nov 27, 2020

Abstract:Due to the boom in technical compute in the last few years, the world has seen massive advances in artificially intelligent systems solving diverse real-world problems. But a major roadblock in the ubiquitous acceptance of these models is their enormous computational complexity and memory footprint. Hence efficient architectures and training techniques are required for deployment on extremely low resource inference endpoints. This paper proposes an architecture for detection of alphabets in American Sign Language on an ARM Cortex-M7 microcontroller having just 496 KB of framebuffer RAM. Leveraging parameter quantization is a common technique that might cause varying drops in test accuracy. This paper proposes using interpolation as augmentation amongst other techniques as an efficient method of reducing this drop, which also helps the model generalize well to previously unseen noisy data. The proposed model is about 185 KB post-quantization and inference speed is 20 frames per second.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge