Puduru Viswanadha Reddy

Lyapunov stochastic stability and control of robust dynamic coalitional games with transferable utilities

Apr 23, 2012

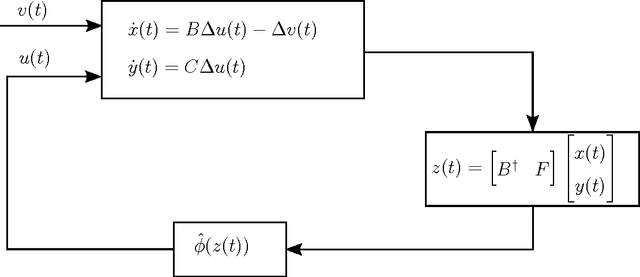

Abstract:This paper considers a dynamic game with transferable utilities (TU), where the characteristic function is a continuous-time bounded mean ergodic process. A central planner interacts continuously over time with the players by choosing the instantaneous allocations subject to budget constraints. Before the game starts, the central planner knows the nature of the process (bounded mean ergodic), the bounded set from which the coalitions' values are sampled, and the long run average coalitions' values. On the other hand, he has no knowledge of the underlying probability function generating the coalitions' values. Our goal is to find allocation rules that use a measure of the extra reward that a coalition has received up to the current time by re-distributing the budget among the players. The objective is two-fold: i) guaranteeing convergence of the average allocations to the core (or a specific point in the core) of the average game, ii) driving the coalitions' excesses to an a priori given cone. The resulting allocation rules are robust as they guarantee the aforementioned convergence properties despite the uncertain and time-varying nature of the coaltions' values. We highlight three main contributions. First, we design an allocation rule based on full observation of the extra reward so that the average allocation approaches a specific point in the core of the average game, while the coalitions' excesses converge to an a priori given direction. Second, we design a new allocation rule based on partial observation on the extra reward so that the average allocation converges to the core of the average game, while the coalitions' excesses converge to an a priori given cone. And third, we establish connections to approachability theory and attainability theory.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge